Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch“. In this newsletter, we will paint a roadmap to build powerful generative AI applications

In 2024, we stand at a pivotal moment where the AI solutions we develop are set to reshape the future of work.

The world recognizes the stakes. Therefore, we’re witnessing a historic surge in the creation of applications that are powered by generative AI. However, from these applications, only a selected few will prove indispensable.

But then the question arises, what is the secret process to build applications that can truly create an impact?

Creating impactful AI apps is still a frontier, filled with challenges and requiring pivotal decisions on cost management, technology selection, and more. These decisions critically shape the product’s performance for end-users.

We at Data Science Dojo present you a comprehensive guide that will help you build generative AI applications that are not just powerful, but also reliable and scalable. It is based on our own experiences in making AI work in real-world settings, and it’s here to help you make your AI projects a success.

The Process of Building Generative AI Applications

The process of the creation of generative AI applications is an iterative process and fairly, quite a bumpy ride.

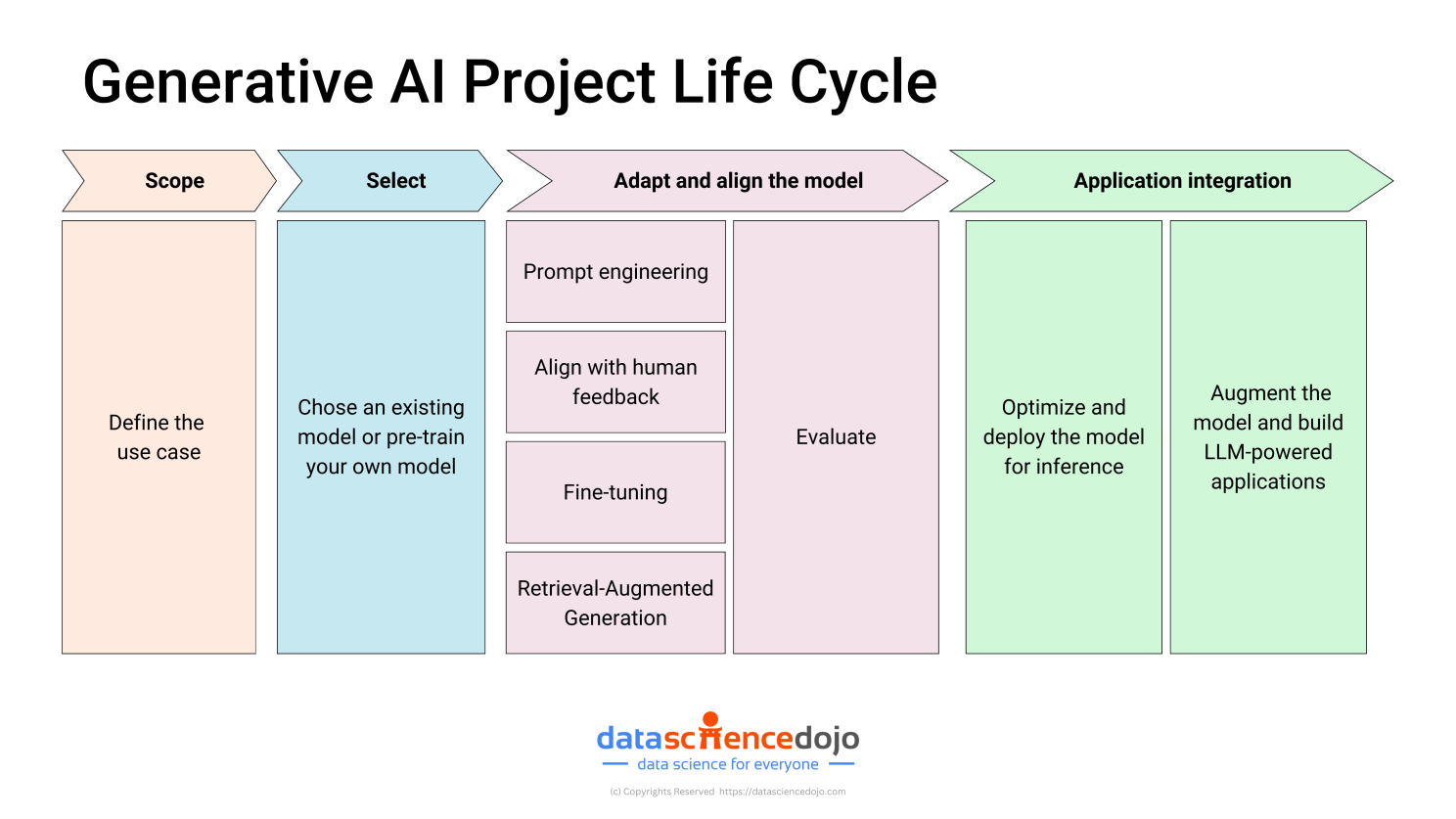

First, let’s understand, the different stages you will encounter while building a genAI application.

Dive Deeper: A Complete Guide for Managing Generative AI Projects

Phase 1 – Defining the Scope of the Generative AI Project

Let’s start at square one: figuring out why you’re undertaking this project. What’s the purpose of your AI endeavor? This step is all about laying the foundation for everything that follows.

Here’s what you need to pin down:

- The Problem: What exactly are you trying to fix or improve with your AI solution?

- The Users: Who’s going to use this? Who benefits directly from your project?

- Expectations: What do these users expect the AI to do? Can it meet those expectations?

Take, for example, if you’re aiming to enhance customer service. In this case, you might consider developing a chatbot that can handle complex queries with a human-like understanding.

Phase 2 – Select the Ideal Language Model for Your Application

Now that you’ve nailed down your use case, choosing the right foundation model for your application should be your next step.

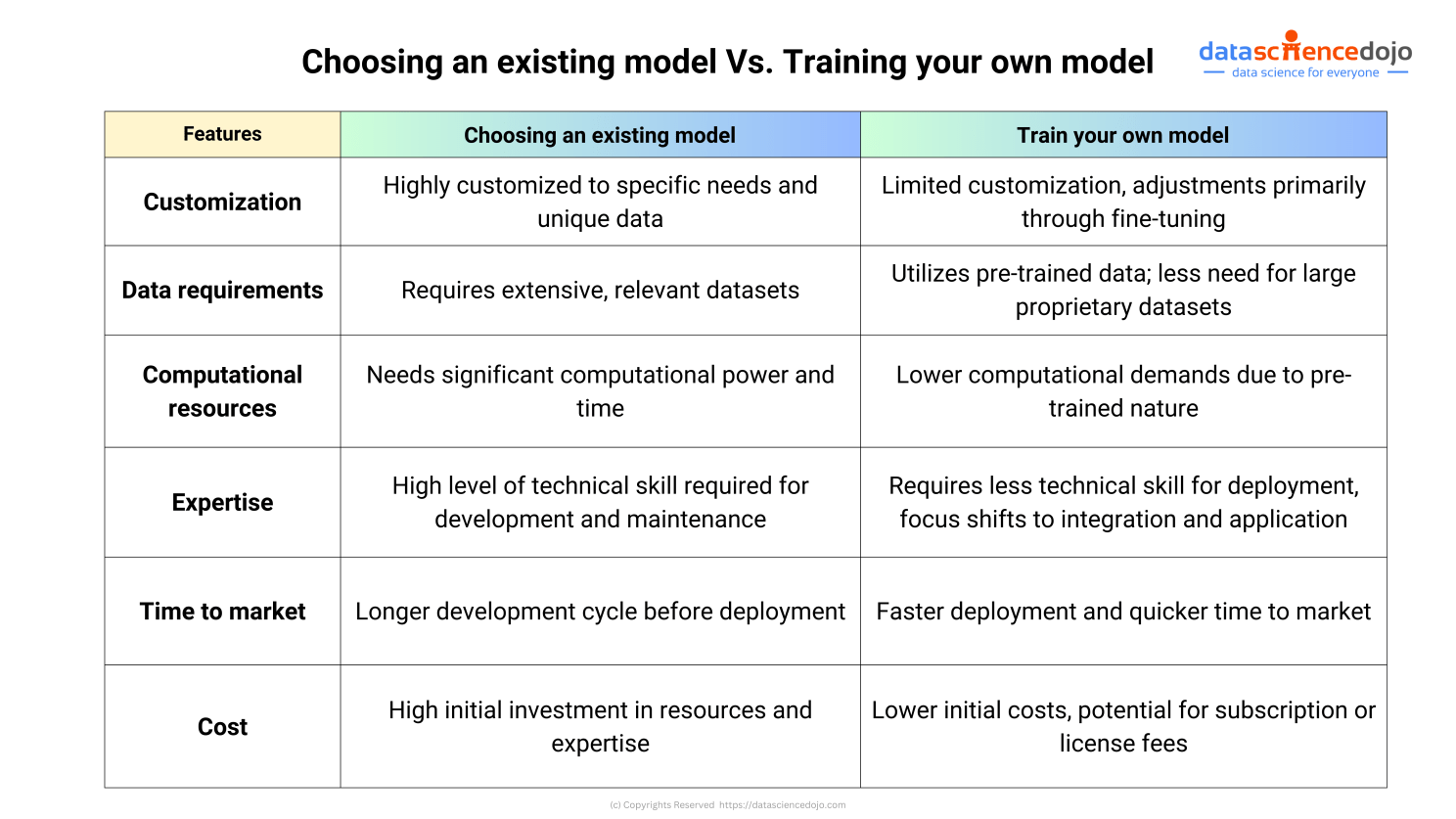

But let’s not sugarcoat it—selecting the right model is still a tough decision. You need to figure out whether to build your own model from scratch or use an existing one.

Here’s a quick breakdown of the pros and cons for both options:

Since building a language model on your own is quite expensive, most companies choose to use an existing one.

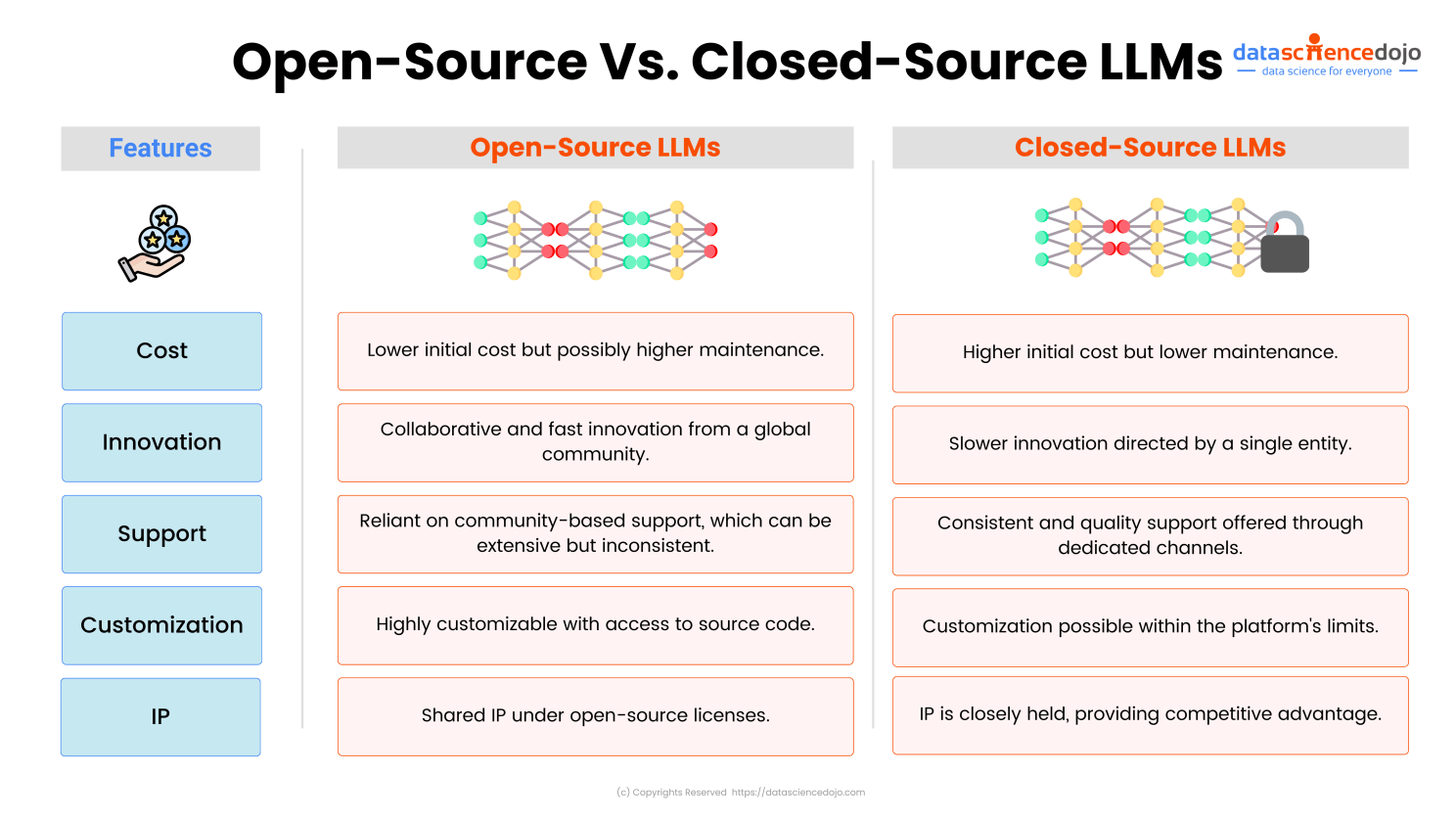

There are two main types of existing models: open-source and closed-source.

Read more: Open-Source Vs. Closed-Source Models for Enterprises

Phase 3 – Adapt and Align the Model

Language models have vast, generalized information within them. But based on your use case, you’d want the model to specialize in a specific domain, use external information, or have a specific quality.

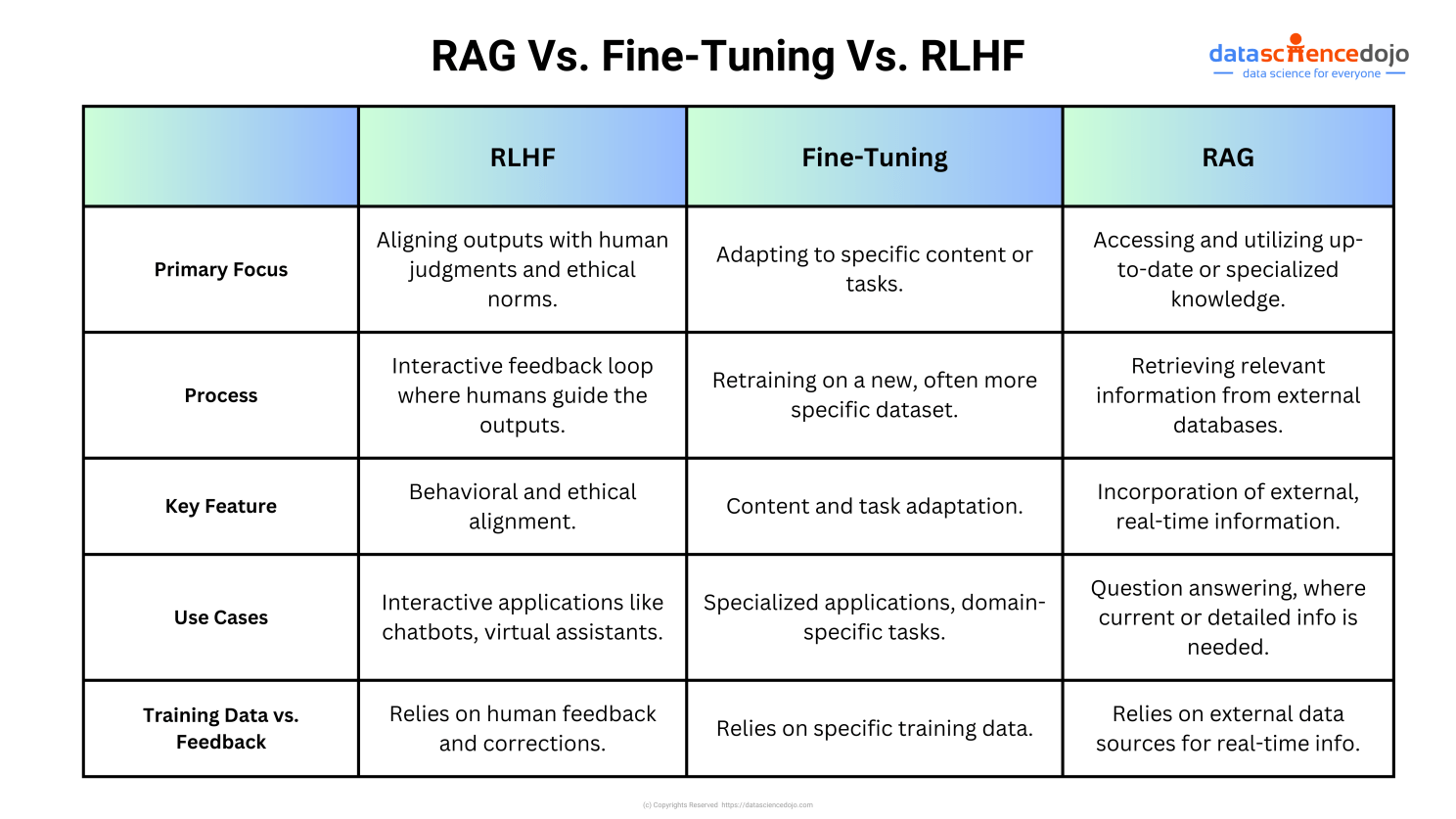

To align a model’s performance with your use case, there are four popular methods to boost a model’s performance, each with its own advantages and challenges.

- Prompt Engineering: This method involves creating prompts that direct the model to produce the results you want. Read more

- Fine-tuning: This approach adjusts the model’s parameters to better meet the specific needs of your project. Read more

- Retrieval Augmented Generation (RAG): RAG combines a traditional language model with a retrieval component. It retrieves information from a knowledge base (like a database of documents) as context to generate more informed and accurate outputs. Read more

- Human Feedback Alignment: This involves using feedback from real-world use to fine-tune the model’s outputs, making sure they stay relevant and precise. Read more

Once you adapt the model to perform well using any of these approaches, it is important to evaluate if the performance of the model has increased from how the base model performed.

Read: Key Benchmarks to Evaluate the Performance of Language Models

Phase 4 – Application Integration

Now that you’ve gotten a handle on how to tweak language models for your specific needs, it’s time to think about how to roll them out into real-world applications. Here’s a straightforward look at the key considerations for integrating your model:

Performance and Resources: First up, you need to figure out how quickly your model needs to deliver results. What’s your budget for computational resources? Sometimes, you might need to balance between how fast the model works and how much it costs in terms of performance or storage.

User Interaction: Next, think about how people will use your model. What will the interface look like? Whether it’s through an app or an API, the design of this interface is crucial for a smooth user experience.

Dive Deeper: Best Practices for Deploying Generative AI Applications

Tutorial – Generative AI: From Proof of Concept to Production

Launching a generative AI application into production is no easy task.

In this tutorial, Denys Linkov talks about seamlessly transitioning conversational AI from proof of concept to production-ready generative AI applications.

He dives into strategies from utilizing Retrieval-Augmented Generation to evaluating large language models and crafting compelling business cases.

Comment down if you can relate!

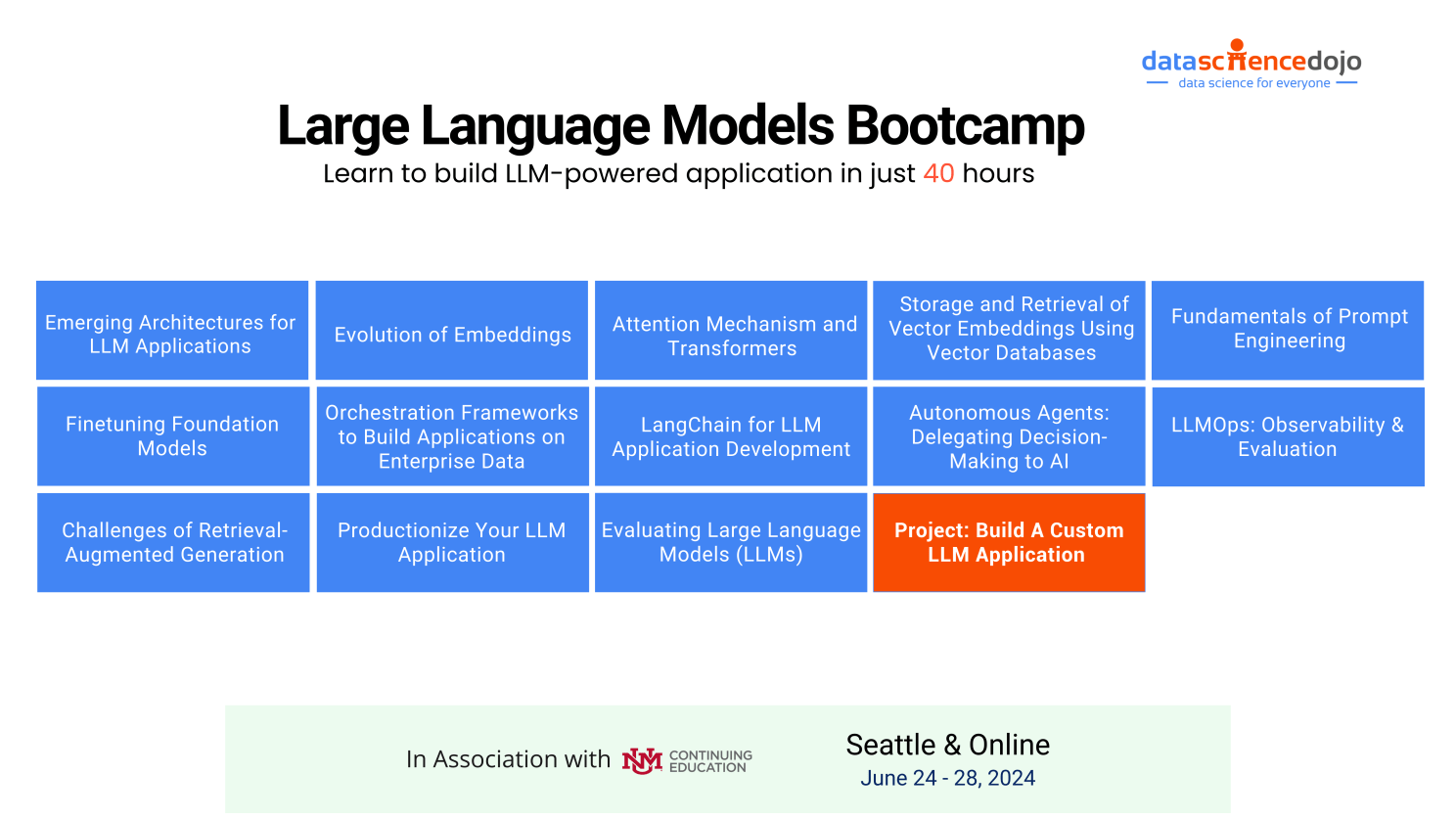

Bootcamp – Learn to Build Generative AI Applications

If you have a great AI idea but are confused about where to start, Data Science Dojo’s LLM bootcamp is where you ought to be.

It’s a comprehensive bootcamp led by experts in the field that empowers you with all the knowledge and expertise required to build LLM applications.

Here’s the curriculum of the bootcamp:

Learn more about the bootcamp by booking a meeting with an advisor now.

Upcoming AI Conferences

We’re excited to be a part of #DataCloudDevDay on June 6 in San Francisco! Get the latest in AI/ML, join a GenAI bootcamp, and connect with thousands of other builders. Register for free

Let’s end this week with some exciting things that happened in the AI world.

- Elon Musk’s newest venture, xAI, raises $6B funding. Read more

- Google won’t comment on a potentially massive leak of its search algorithm documentation. Read more

- OpenAI forms a safety committee as it starts training the latest artificial intelligence model.

- Mistral announces Codestral, a code-generation LLM it says outperforms all others Read more

- Anthropic’s AI now lets you create bots that work for you. Read more