Large language models (LLMs) are a fascinating aspect of machine learning. Selective prediction in large language models refers to the model’s ability to generate specific predictions or responses based on the given input.

This means that the model can focus on certain aspects of the input text to make more relevant or context-specific predictions. For example, if asked a question, the model will selectively predict an answer relevant to that question, ignoring unrelated information.

Learn how LLM is making chatbots smarter

They function by employing deep learning techniques and analyzing vast datasets of text. Here’s a simple breakdown of how they work:

- Architecture: LLMs use a transformer architecture, which is highly effective in handling sequential data like language. This architecture allows the model to consider the context of each word in a sentence, enabling more accurate predictions and the generation of text.

- Training: They are trained on enormous amounts of text data. During this process, the model learns patterns, structures, and nuances of human language. This training involves predicting the next word in a sentence or filling in missing words, thereby understanding language syntax and semantics.

Understand the LLM Guide as a beginner resource to top technology

- Capabilities: Once trained, LLMs can perform a variety of tasks such as translation, summarization, question answering, and content generation. They can understand and generate text in a way that is remarkably similar to human language.

How Selective Predictions Work in LLMs

Selective prediction in the context of large language models (LLMs) is a technique aimed at enhancing the reliability and accuracy of the model’s outputs. Here’s how it works in detail:

Decision to Predict or Abstain

Selective prediction serves as a vital mechanism in LLMs, enabling the model to decide whether to make a prediction or abstain based on its confidence level. This decision-making process is crucial for ensuring that the model only provides answers when it is reasonably certain of their accuracy.

Know how non-profit organizations be empowered through Generative AI and LLMs

By implementing this approach, LLMs can significantly reduce the risk of delivering incorrect or irrelevant information, which is especially important in sensitive applications such as healthcare, legal advice, and financial analysis.

This careful consideration not only enhances the reliability of the model but also builds user trust by ensuring that the information provided is both relevant and accurate. Through selective prediction, LLMs can maintain a high standard of output quality, making them more dependable tools in critical decision-making scenarios.

Improving Reliability

The selective prediction mechanism plays a pivotal role in enhancing the reliability of LLMs by allowing them to abstain from making predictions when uncertainty is high. This capability is particularly crucial in fields where the repercussions of incorrect information can be severe.

Know about LLM Finance in the Financial Industry

For instance, in healthcare, an inaccurate diagnosis could lead to inappropriate treatment, potentially endangering patient lives. Similarly, in legal advice, erroneous predictions might result in costly legal missteps, while in financial forecasting, they could lead to significant economic losses.

By choosing to withhold responses in situations where confidence is low, LLMs uphold a higher standard of accuracy and trustworthiness. This not only minimizes the risk of errors but also fosters greater user confidence in the model’s outputs, making it a reliable tool in critical decision-making processes.

Self-Evaluation

Incorporating self-evaluation mechanisms into selective prediction allows LLMs to internally assess the likelihood of their predictions being correct. This self-assessment is vital for refining the model’s output and ensuring higher accuracy.

Models like PaLM-2 and GPT-3 have shown that using self-evaluation scores can significantly enhance the alignment of predictions with correct answers. This process involves the model analyzing its own confidence levels and historical performance, enabling it to make informed decisions about when to predict.

Exlpore GPT-3.5 and GPT-4 comparative analysis

By continuously evaluating its predictions, the model can adjust its strategies, leading to improved performance and reliability over time.

Advanced Techniques like ASPIRE

Google’s ASPIRE framework represents an advanced approach to selective prediction, enhancing LLMs’ ability to make confident predictions. ASPIRE effectively determines when to provide a response and when to abstain by leveraging sophisticated algorithms to evaluate the model’s confidence.

Are Bootcamps worth It for LLM Training? Get Insights Here

This ensures that predictions are made only when there is a high probability of correctness. By implementing such advanced techniques, LLMs can improve their decision-making processes, resulting in more accurate and reliable outputs.

Selective Prediction in Applications

Selective prediction proves particularly beneficial in various applications, such as conformal prediction, multi-choice question answering, and filtering out low-quality predictions. In these contexts, the technique ensures that the model only delivers responses when it has a high degree of confidence.

Explore a Comprehensive Guide on Natural Language Processing and its Applications

This approach not only improves the quality of the output but also reduces the risk of disseminating incorrect information. By integrating selective prediction, LLMs can achieve a balance between providing valuable insights and maintaining accuracy, ultimately leading to more reliable and trustworthy AI systems.

This balance is crucial for enhancing the overall user experience and building trust in the model’s capabilities.

Example

How do Selective Predictions Work in LLMs? Imagine using a language model for a task like answering trivia questions. The LLM is prompted with a question: “What is the capital of France?” Normally, the model would generate a response based on its training.

However, with selective prediction, the model first evaluates its confidence in its knowledge about the answer. If it’s highly confident (knowing that Paris is the capital), it proceeds with the response. If not, it may abstain from answering or express uncertainty rather than providing a potentially incorrect answer.

Improvement in Response Quality

Selective predictions in LLM help in the improvement of the response quality. this is done by removing misinformation and ensuring confident answers or solutions from the model. this increases the reliability of the model and builds trust in the outputs.

- Reduces Misinformation: By abstaining from answering when uncertain, selective prediction minimizes the risk of spreading incorrect information.

- Enhances Reliability: It improves the overall reliability of the model by ensuring that responses are given only when the model has high confidence in their accuracy.

- Better User Trust: Users can trust the model more, knowing that it avoids guessing when unsure, leading to higher quality and more dependable interactions.

Selective prediction, therefore, plays a vital role in enhancing the quality and reliability of responses in real-world applications of LLMs.

ASPIRE Framework for Selective Predictions

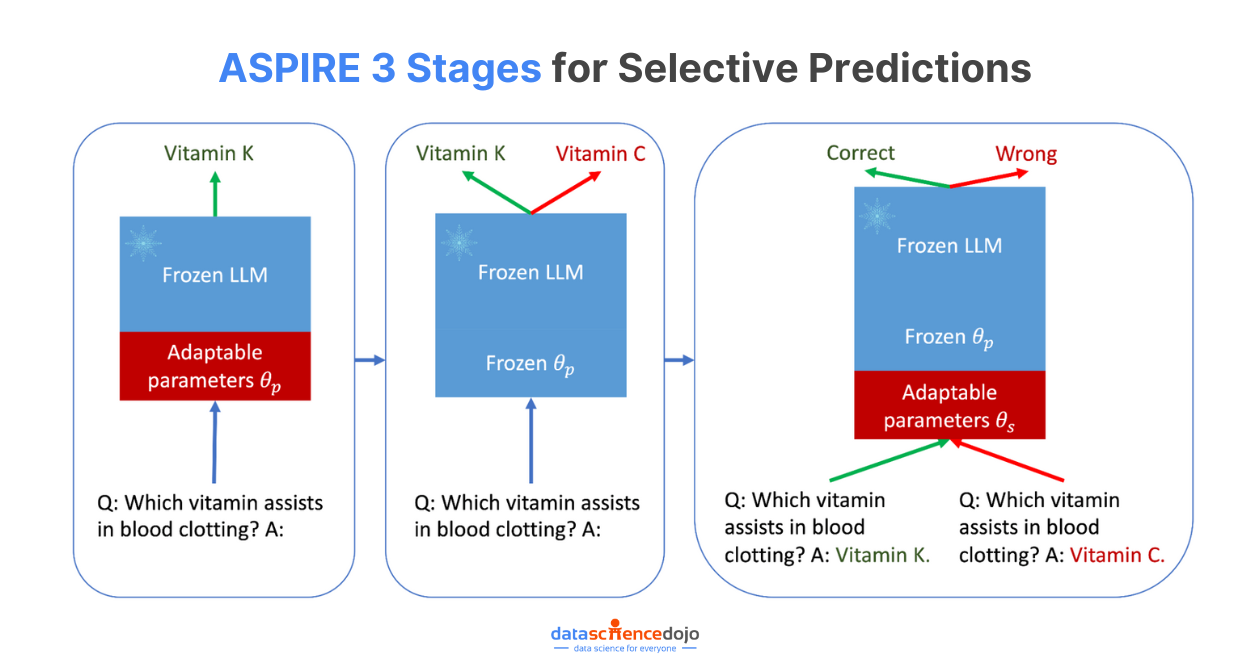

The ASPIRE framework, particularly in the context of selective prediction for Large Language Models (LLMs), is a sophisticated process designed to enhance the model’s prediction capabilities. It comprises three main stages:

Understand 7 Best Large Language Models (LLMs) You Must Know About in 2024

Task-Specific Tuning

In this initial stage, the LLM is fine-tuned for specific tasks. This means adjusting the model’s parameters and training it on data relevant to the tasks it will perform. This step ensures that the model is well-prepared and specialized for the type of predictions it will make.

Answer Sampling

After tuning, the LLM engages in answer sampling. Here, the model generates multiple potential answers or responses to a given input. This process allows the model to explore a range of possible predictions rather than settle on the first plausible option.

Explore Data Science Dojo’s LLM Bootcamp to unleash LLM power and build your own ChatGPT

Self-Evaluation Learning

The final stage involves self-evaluation learning. The model evaluates the generated answers from the previous stage, assessing their quality and relevance. It learns to identify which answers are most likely to be correct or useful based on its training and the specific context of the question or task.

Boosting Business Decisions with ASPIRE

Businesses and industries can greatly benefit from adopting selective prediction frameworks in informed decision-making. Frameworks like ASPIRE helps in several ways:

- Enhanced Decision Making: By using selective prediction, businesses can make more informed decisions. The framework’s focus on task-specific tuning and self-evaluation allows for more accurate predictions, which is crucial in strategic planning and market analysis.

- Risk Management: Selective prediction helps in identifying and mitigating risks. By accurately predicting market trends and customer behavior, businesses can proactively address potential challenges.

- Efficiency in Operations: In industries such as manufacturing, selective prediction can optimize supply chain management and production processes. This leads to reduced waste and increased efficiency.

- Improved Customer Experience: In service-oriented sectors, predictive frameworks can enhance customer experience by personalizing services and anticipating customer needs more accurately.

- Innovation and Competitiveness: Selective prediction aids in fostering innovation by identifying new market opportunities and trends. This helps businesses stay competitive in their respective industries.

- Cost Reduction: By making more accurate predictions, businesses can reduce costs associated with trial and error and inefficient processes.

Learn more about how DALLE, GPT 3, and MuseNet are reshaping industries

Enhance Trust with LLMs

Selective prediction frameworks like ASPIRE offer businesses and industries a strategic advantage by enhancing decision-making, improving operational efficiency, managing risks, fostering innovation, and ultimately leading to cost savings.

Overall, the ASPIRE framework is designed to refine the predictive capabilities of LLMs, making them more accurate and reliable by focusing on task-specific tuning, exploratory answer generation, and self-assessment of generated responses.

In summary, selective prediction in LLMs is about the model’s ability to judge its own certainty and decide when to provide a response. This enhances the trustworthiness and applicability of LLMs in various domains.