Kaggle Days Dubai is a data science competition to improve your data science skillset. Here’s what you can expect to learn from the grandmasters.

Anyone interested in analytics or machine learning would certainly be aware of Kaggle. Kaggle is the world’s largest community of data scientists and offers companies to host prize money competitions for data scientists around the world to compete in. This has made it the largest online competition platform too. However, Kaggle has started to evolve itself to organize offline meetups globally.

One such initiative is the organization of Kaggle Days. Up till now, four Kaggle Days events have been organized in various cities around the world, the recent one being in Dubai. The format of Kaggle Days involves a 2-day session consisting of presentations, practical workshops, and brainstorming sessions during the first day followed by an offline data science competition the next day.

For a machine learning enthusiast with intermediate experience in this field, participating in a Kaggle-hosted competition and teaming up with a Kaggle Grandmaster to compete against other grandmasters was an enjoyable experience on its own for me. I couldn’t reach the top ranks in the data science competition, but competing with and networking with the dozens of grandmasters and other enthusiasts present during the 2-day event boosted my learning and abilities.

I desired to make the best use of this opportunity, learn to the utmost extent I could, and ask the right questions from the grandmasters present at the event to get the best out of their wisdom and learn the optimal ways to approach any data science problem. It was heart-whelming to discover how supportive they were as they shared tricks and advice to get to the top position in data science competitions and improve the performance of any machine learning project. In this blog, I’d like to share the insights that I gathered during my conversations and the noteworthy points I recorded during their presentations.

Strengthen your basic knowledge of Kaggle

My primary mentor during the offline competition was Yauhen Babakhin. Yauhen is a data scientist at H2O.ai and has worked on a range of domains including e-commerce, gaming, and banking, specializing in NLP-related problems.

He has an inspiring personality and is one of the youngest Kaggle Grandmasters. Fortunately, I got the opportunity to network with him the most. His profile defied my misconception that only someone with a doctoral degree can achieve the prestige of being a grandmaster.

During our conversations, the most significant advice that came from Yauhen was to strengthen our basic knowledge and have an intuition about various machine learning concepts and algorithms. One does not need to go extensively deep into these concepts or be extra knowledgeable to begin with. As he said, “Start learning a few important learning models, but get to know how they work!”

It will be ideal to start with the basics and extend your knowledge along the way by building experience through competitions, especially the ones hosted on Kaggle. For most of the queries, Yauhen suggests, one must know what to search on Google. This alone will prove to be an extremely handy tool on its own to get us through most of the problems despite having limited experience relative to our competitors.

Furthermore, Yauhen emphasized how Kaggle single-handedly played a leading role in heightening his skills. Throughout this period, he stressed on how challenges triggered him to perform better and learn more.

It was such challenges that provoked him to learn beyond his current knowledge and explore areas beyond his specialization, such as computer vision, said the winner of the $100,000 TGS Salt Identification Challenge. It was these challenges that prompted him to dive into various areas of machine learning, and it was this trick that he suggested we use to accelerate career growth.

Through this conversation, I was able to learn the importance of going broad. Though Yauhen insisted on selecting problems that target a broad range of problems and cover various aspects of data science, he also suggested limiting it to the extent that it should align with our career pursuits and make us realize if we even need to target something beyond what we are ever going to use.

Lastly, the Grandmaster in his late 20’s also wanted us to practice with deep learning models as it’ll allow us to target a broad set of problems to discover the best approaches used by previous winners and to combine them in our projects or competition submissions. These approaches could be found in blogs, kernels, and forum discussions.

Remain persistent

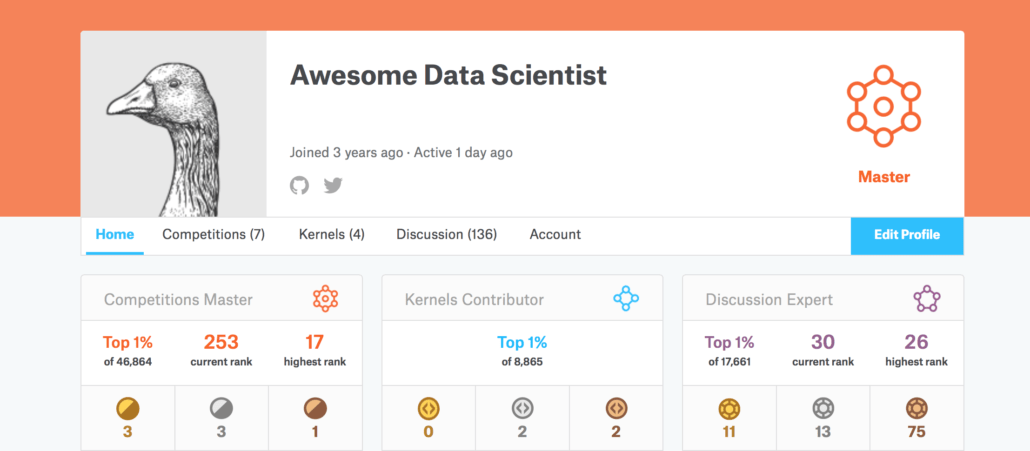

My next detailed interaction was with Abhishek Thakur. The conversation provoked me to ask as many questions as I could, as every suggestion given by Abhishek seemed wise and encouraging. One of the rare examples of someone crowned with 2 Kaggle Grandmaster titles, competitions, and discussion grandmasters, Abhishek is the chief data scientist at boost.ai, having once attained the 3rd rank in global competitions at Kaggle.

What made his profile more convincing was Abhishek’s accelerated growth from a novice to a grandmaster within a year and a half. He started his career in machine learning from scratch and took this initiative from Kaggle itself. Initially starting with the lowest rank in competitions, Abhishek was adamant that Kaggle could be the only platform one could totally rely on to catapult his growth within such a short time.

However, as Abhishek repeatedly said, it all required continuous persistence. From the beginning until now, even after being placed in the bottom ranks initially, Abhishek carried on and demonstrated how persistence was the key to his success. Upon inquiring about the significant tools that led him to get gold in his recent participation, Thakur emphasized immensely on feature engineering.

He insisted that this step was the most important of all in distinguishing the winner. Similarly, he suggested that a thorough exploratory data analysis can assist one in finding those magical features that can enable one to get the winning results.

Like other Grandmasters who have attained massive success in this domain, Abhishek also emphasized improving one’s personal profile through Kaggle. Not only does it offer you a distinct and fast-paced learning experience, as it did for all the grandmasters at the event, but it’s also recognized across various industries and major employees who value these rankings. Abhishek told how it enabled him to get numerous lucrative job offers over time.

Start instantly with data science competitions

On the first day, I was able to attend Pavel Pleskov’s workshop on ‘Building The Ultimate Binary Classification Pipeline’. Based in Russia, Pavel currently works for an NLP startup, PointAPI, and was once ranked number 2 among Kagglers globally. The workshop was fantastic, but the conversations during and after the workshop intrigued me the most as they mostly comprised tips for beginners.

Pavel, who quit his profitable business to compete on Kaggle, Pavel insisted on the ‘do what you love’ strategy as it leads to more life satisfaction and profit. Pavel told us how he started with some of the most popular online courses on machine learning but found them lacking practical skills and homework, which he covered using Kaggle.

For beginners, he strongly recommended not to put off Kaggle contests or wait until the completion of courses, but to start instantly. According to him, practical experience on Kaggle is more important than any other course assignment.

Some other noteworthy and touching tips from Pavel were that to win such competitions, unlike many students who approach Kaggle as an academic problem and start creating fancy architectures and ultimately do not score well, Pavel approaches a problem with a business mindset. He increased the probability of success by leveraging resources, such as including people in his team who had resources, like a GPU, or merging his team with another to improve the overall score.

Upon an inquiry related to keeping the right balance between taking time to build theoretical knowledge and using that time to generate new ideas, Pavel advised looking at forum threads on Kaggle. They can help you know how much theoretical knowledge you are missing while competing with others.

Pavel is an avid user of LightGBM and CatBoost models, which he claims have given him superior rankings during the competitions. One of his suggestions is to use the fast.ai library, which, despite receiving many critical reviews, has been a flexible and useful library that he mostly keeps in consideration.

Hunt for ideas and rework them

Due to the limitation of time during the 2-day event, I was able to hear less from another young grandmaster from Russia, coincidentally sharing the same first name with his fellow Russian grandmaster, Pavel Ostyakov. Remarkably, Pavel was still an undergrad student then and has been working for Yandex and Samsung AI for the past couple of years.

He brought a distinct set of advice that can prove to be extremely resourceful when one is targeting gold in data science competitions. He emphasized writing clean code that could be used in the future and allows easy collaboration with other teammates, a practice usually overlooked which later becomes troubling for participants. He also insisted on trying to read as many forums on Kaggle as one could.

Not just ones related to the same competition but those belonging to other data science competitions as well since most of them are similar. Apart from searching for workable solutions, Pavel suggested also looking for ideas that failed. As he recommended, one must try using (and reworking) those failed ideas as there are chances they may work.

Pavel also brought up the point that to surpass other competitors, reading research papers and implementing their solutions could increase your chances of success. However, during all this time he stressed a lot on to have a mindset that anyone can achieve gold in a competition, even if he/she possesses limited experience relative to others.

Experiment with diverse strategies

Other noteworthy tips and ideas that I collected while mingling with grandmasters and attending their presentations included those from Gilberto Titericz (Giba), the grandmaster from Brazil with 45 Gold medals! While personally inquiring about Giba, he repeatedly used the keyword ‘experiment’ and insisted that it is always important to experiment with new strategies, methods, and parameters. This is one simple, although tedious, way to learn quickly and get great results.

Giba also proposed, that to attain top performance, one must build models using different viewpoints of the data. This diversity can come from feature engineering, using varying training algorithms, or using different transformations. Therefore, one must explore all possibilities.

Furthermore, Giba suggested that fitting a model using default hyperparameters is good enough to start a data science competition and build a benchmark score to improve further. Regarding teaming up, he repeated that diversity is the key here as well, and choosing someone who thinks similar to you is not a good move.

A great piece of advice that came from Giba was to blend models. Combining models can help improve the performance of the final solution, especially if each model’s prediction has a low correlation. A blend can be something as simple as a weighted average. For instance, non-linear models like Gradient Boosting Machines blend very well with neural network-based models.

Conclusion

Considering the key takeaways from the suggestions given by these grandmasters and observing the way they competed during the offline data science competition, I noted that beginners in data science must use their efforts to try varying methodologies as much as they can. Moreover, a summary of the recommendations given above stresses the significance of taking part in online data science competitions no matter how much knowledge or experience one possesses.

I also noted that most of the experienced data scientists were fond of using ensemble techniques and one of the most prominent methods used by them was the creation of new features out of the existing ones. This is what was cited by the winners of the offline data science competition as their strategy for success. Conclusively, these sorts of meetups could enable one to interact with the top minds in the field and gain the maximum within a short time as I fortunately did.