Integrating generative AI into edge devices is a significant challenge on its own.

You are required to smartly run advanced models efficiently within the limited computational power and memory of smartphones and computers.

Ensuring these models operate swiftly without draining battery life or overheating devices adds to the complexity.

Additionally, safeguarding user privacy is crucial, requiring AI to process data locally without relying on cloud servers.

Apple has addressed these challenges with the introduction of Apple Intelligence.

This new system brings sophisticated AI directly to devices while maintaining high privacy standards.

Let’s explore the cutting-edge technology that powers Apple Intelligence and makes on-device AI possible.

Core Features of Apple Intelligence

-

AI-Powered Tools for Enhanced Productivity

Apple devices like iPhones, iPads, and Macs are now equipped with a range of AI-powered tools designed to boost productivity and creativity. You can use these tools to:

-

- Writing and Communication: Apple’s predictive text features have evolved to understand context better and offer more accurate suggestions.This makes writing emails or messages faster and more intuitive.Moreover, the AI integrates with communication apps to suggest responses based on incoming messages, saving time and enhancing the flow of conversation.

- Image Creation and Editing: The Photos app uses advanced machine learning to organize photos intelligently and suggest edits. For creators, features like Live Text in photos and videos use AI to detect text in images, allowing users to interact with it as if it were typed text. This can be particularly useful for quickly extracting information without manual data entry.Learn how to use AI image generation tools

-

Equipping Siri with Advanced AI Capabilities

Apple Intelligence has taken Siri, Apple’s virtual assistant, to the next level, making it smarter and more versatile than ever. These exciting upgrades are designed to help Siri become a more proactive and helpful assistant, seamlessly working across all your Apple devices.

-

- Richer Language Understanding: Siri’s ability to understand and process natural language has been significantly enhanced. This improvement allows Siri to handle more complex queries and offer more accurate responses, mimicking a more natural conversation flow with the user.

- On-Screen Awareness: Siri now possesses the ability to understand the context based on what is displayed on the screen.This feature allows users to make requests related to the content currently being viewed without needing to be overly specific, making interactions smoother and more intuitive.

- Cross-App Actions: Perhaps one of the most significant updates is Siri’s enhanced capability to perform actions across multiple apps. For example, you can ask Siri to book a ride through a ride-sharing app and then send the ETA to a friend via a messaging app, all through voice commands. This level of integration across different platforms and services simplifies complex tasks, turning Siri into a powerful tool for multitasking.

Technical Innovations Behind Apple Intelligence

Apple’s strategic deployment of AI capabilities across its devices is underpinned by significant technical innovations that ensure both performance and user privacy are optimized.

These advancements are particularly evident in their dual model architecture, the application of novel post-training algorithms, and various optimization techniques that enhance efficiency and accuracy.

-

Dual Model Architecture: Balancing On-Device and Server-Based Processing

Apple employs a sophisticated approach known as dual model architecture to maximize the performance and efficiency of AI applications.

This architecture cleverly divides tasks between on-device processing and server-based resources, leveraging the strengths of each environment:

-

- On-Device Processing: This is designed for tasks that require immediate response or involve sensitive data that must remain on the device.The on-device model, a ~3 billion parameter language model, is fine-tuned to efficiently execute tasks. This model excels at writing and refining text, summarizing notifications, and creating images, among other tasks, ensuring swift and responsible AI interactions

- Server-Based Processing: More complex or less time-sensitive tasks are handled in the cloud, where Apple can use more powerful computing resources.This setup is used for tasks like Siri’s deep learning-based voice recognition, where extensive data sets can be analyzed quickly to understand and predict user queries more effectively.

The synergy between these two processing sites allows Apple to optimize performance and battery life while maintaining strong data privacy protections.

-

Novel Post-Training Algorithms

Beyond the initial training phase, Apple has implemented post-training algorithms to enhance the instruction-following capabilities of its AI models.

These algorithms refine the model’s ability to understand and execute user commands more accurately, significantly improving user experience:

-

- Rejection Sampling Fine-Tuning Algorithm with Teacher Committee:One of the innovative algorithms employed in the post-training phase is a rejection sampling fine-tuning algorithm,This technique leverages insights from multiple expert models (teachers) to oversee the fine-tuning of the AI.This committee of models ensures the AI adopts only the most effective behaviors and responses, enhancing its ability to follow instructions accurately and effectively.This results in a refined learning process that significantly boosts the AI’s performance by reinforcing the desired outcomes.

- Reinforcement Learning from Human Feedback Algorithm: Another cornerstone of Apple Intelligence’s post-training improvements is the Reinforcement Learning from Human Feedback (RLHF) algorithm.This technique integrates human insights into the AI training loop, utilizing mirror descent policy optimization alongside a leave-one-out advantage estimator.Through this method, the AI learns directly from human feedback, continually adapting and refining its responses.This not only improves the accuracy of the AI but also ensures its outputs are contextually relevant and genuinely useful.The RLHF algorithm is instrumental in aligning the AI’s outputs with human preferences, making each interaction more intuitive and effective.

- Error Correction Algorithms: These algorithms are designed to identify and learn from mistakes post-deployment.By continuously analyzing interactions, the model self-improves, offering increasingly accurate responses to user queries over time.

-

Optimization Techniques for Edge Devices

To ensure that AI models perform well on hardware-limited edge devices, Apple has developed several optimization techniques that enhance both efficiency and accuracy:

-

- Low-Bit Palletization: This technique involves reducing the bit-width of the data used by the AI models. By transforming data into a low-bit format, the amount of memory required is decreased, which significantly speeds up the computation while maintaining accuracy.This is particularly important for devices with limited processing power or battery life.

- Shared Embedding Tensors: Apple uses shared embedding tensors to reduce the duplication of similar data across different parts of the AI model.By sharing embeddings, models can operate more efficiently by reusing learned representations for similar types of data. This not only reduces the model’s memory footprint but also speeds up the processing time on edge devices.

These technical strategies are part of Apple’s broader commitment to balancing performance, efficiency, and privacy. By continually advancing these areas, Apple ensures that its devices are not only powerful and intelligent but also trusted by users for their data integrity and security.

Apple’s Smart Move with On-Device AI

Apple’s recent unveilings reveal a strategic pivot towards more sophisticated on-device AI capabilities, distinctively emphasizing user privacy.

This move is not just about enhancing product offerings but is a deliberate stride to reposition Apple in the AI landscape which has been predominantly dominated by rivals like Google and Microsoft.

- Proprietary Technology and User-Centric Innovation: Apple’s approach centers around proprietary technologies that enhance user experience without compromising privacy.By employing dual-model architecture, Apple ensures that sensitive operations like facial recognition and personal data processing are handled entirely on-device, leveraging the power of its M-series chips.This method not only boosts performance due to reduced latency but also fortifies user trust by minimizing data exposure.

- Strategic Partnerships and Third-Party Integrations: Apple’s strategy includes partnerships and integrations with other AI leaders like OpenAI, allowing users to access advanced AI features such as ChatGPT directly from their devices.This integration points towards a future where Apple devices could serve as hubs for powerful third-party on-device AI applications, enhancing the user experience and expanding Apple’s ecosystem.

This strategy is not just about improving what Apple devices can do; it’s also about making sure you feel safe and confident about how your data is handled.

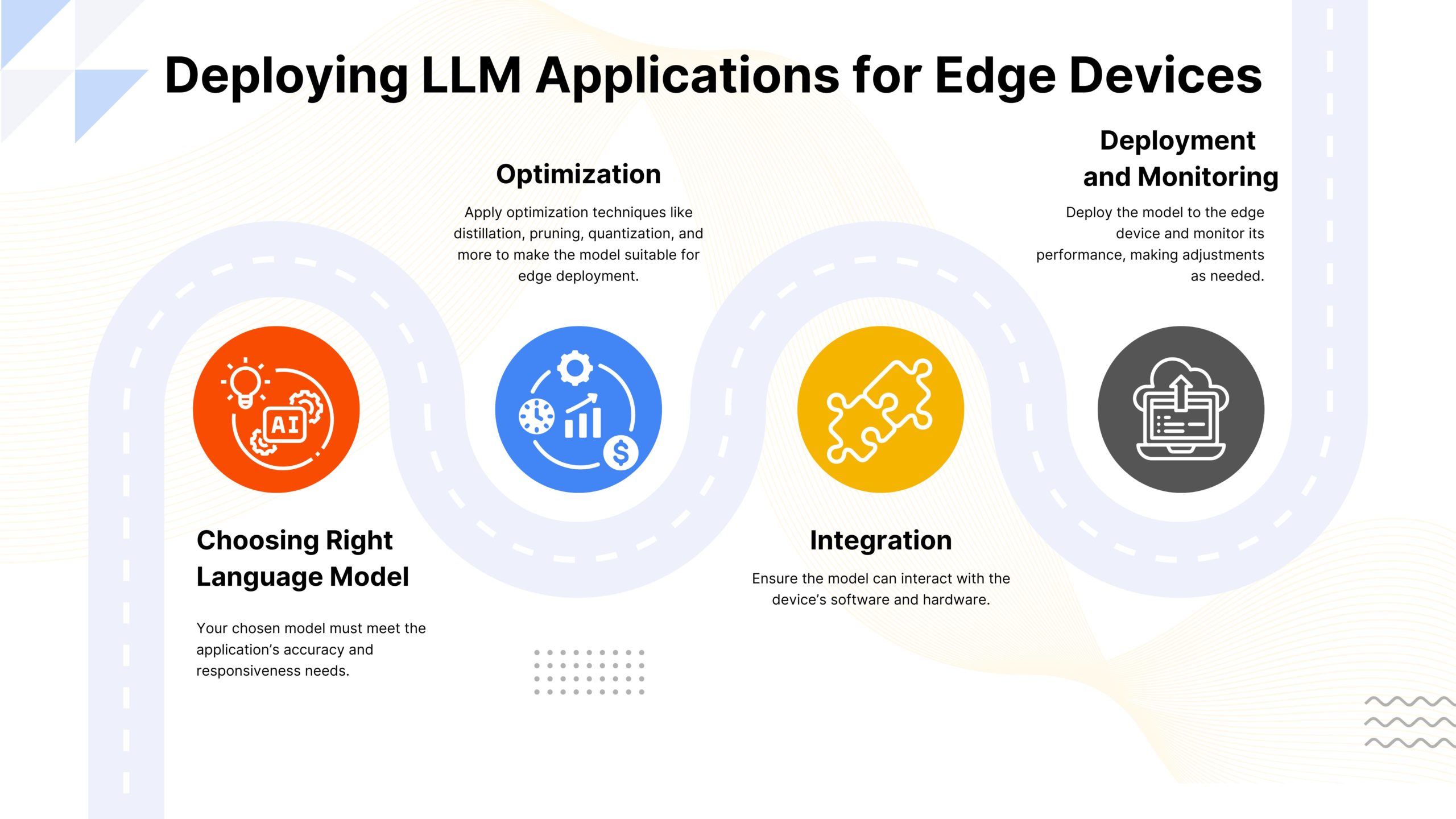

How to Deploy On-Device AI Applications

Interested in developing on-device AI applications?

Here’s a guide to navigating the essential choices you’ll face. This includes picking the most suitable model, applying a range of optimization techniques, and using effective deployment strategies to enhance performance.

Read: Roadmap to Deploy On-Device AI Applications

Where Are We Headed with Apple Intelligence?

With Apple Intelligence, we’re headed towards a future where AI is more integrated into our daily lives, enhancing functionality while prioritizing user privacy.

Apple’s approach ensures that sensitive data remains on our devices, enhancing trust and performance.

By collaborating with leading AI technologies like OpenAI, Apple is poised to redefine how we interact with our devices, making them smarter and more responsive without compromising on security.