Prompt engineering is the process of designing and refining prompts that are given to large language models (LLMs) to get them to generate the desired output.

The beginning of prompt engineering

The history of prompt engineering can be traced back to the early days of artificial intelligence when researchers were experimenting with ways to get computers to understand and respond to natural language.

Learn in detail about —> Prompt Engineering

One of the earliest examples of prompt engineering was the work of Terry Winograd in the 1970s. Winograd developed a system called SHRDLU that could answer questions about a simple block world. SHRDLU was able to do this by using a set of prompts that were designed to help it understand the context of the question.

In the 1980s, prompt engineering became more sophisticated as researchers developed new techniques for training LLMs. One of the most important techniques was backpropagation, which allowed Large Language Models to learn from their mistakes. This made it possible to train LLMs on much larger datasets, leading to significant performance improvements.

In the 2010s, the development of deep learning led to a new wave of progress in prompt engineering. Deep learning models are able to learn much more complex relationships between words than previous models. This has made it possible to create prompts that are much more effective at controlling the output of LLMs.

Today, prompt engineering is a critical tool for researchers and developers who are working with LLMs. It is used in a wide variety of applications, including machine translation, text summarization, and creative writing.

Have you tried any of these fun prompts?

- In the field of machine translation, one researcher tried to get an LLM to translate the phrase “I am a large language model” into French. The LLM responded with “Je suis un grand modèle linguistique”, which is a grammatically correct translation, but it also happens to be the name of a popular French cheese.

- In the field of text summarization, one researcher tried to get an LLM to summarize the plot of the movie “The Shawshank Redemption”. The LLM responded with a summary that was surprisingly accurate, but it also included a number of jokes and puns.

- In the field of creative writing, one researcher tried to get an LLM to write a poem about a cat. The LLM responded with a poem that was both funny and touching.

These are just a few examples of the many funny prompts that people have tried with LLMs. As LLMs become more powerful, it is likely that we will see even more creative and entertaining uses of prompt engineering.

Want to improve your prompting skills? Click below:

Some unknown facts about Prompt Engineering

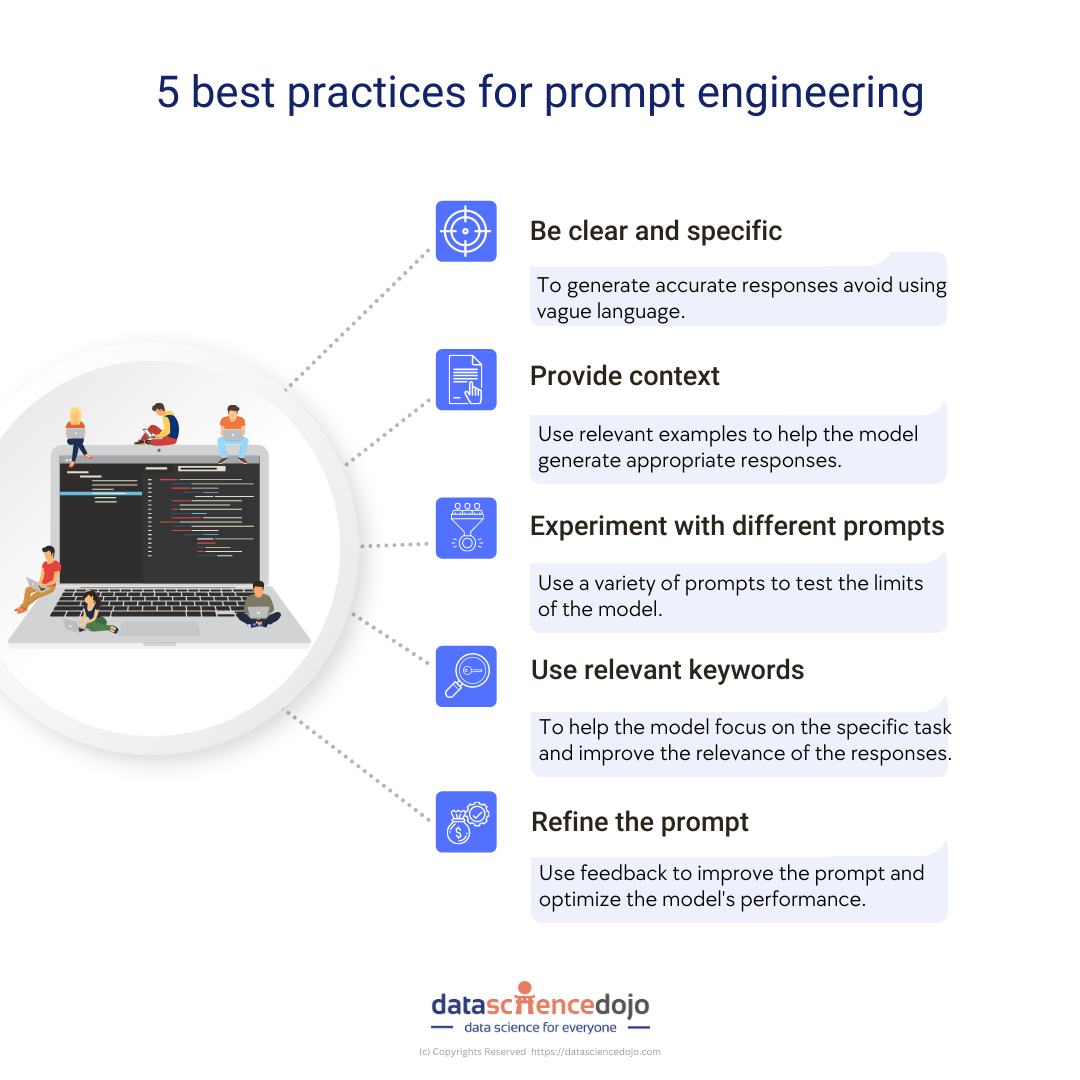

- It is a relatively new field, and there is still much that we do not know about it. However, it is a rapidly growing field, and there are many exciting new developments happening all the time.

- The effectiveness of a prompt can depend on a number of factors, including the specific LLM being used, the training data that the LLM has been trained in, and the context in which the prompt is being used.

- There are a number of different techniques that can be used for prompt engineering, and the best technique to use will depend on the specific application.

- It can be used to control a wide variety of aspects of the output of an LLM, including the length, style, and content of the output.

- It can be used to generate creative and interesting text, as well as to solve complex problems.

- It is a powerful tool that can be used to unlock the full potential of LLMs.

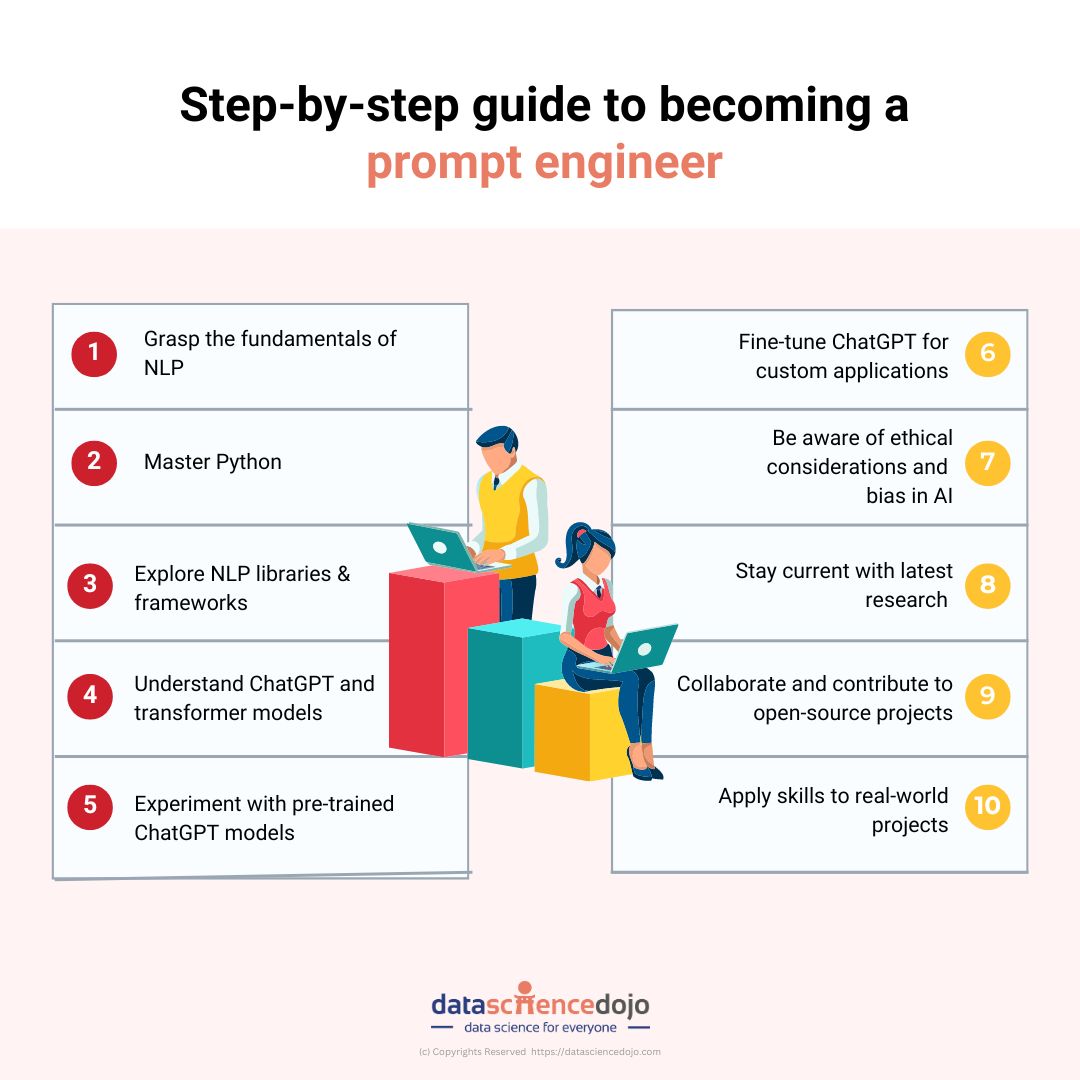

Learn how to become a prompt engineer in 10 steps

Here are some specific examples of important and unknown facts about prompting:

- It is possible to use prompts to control the creativity of an LLM. For example, one study found that adding the phrase “in a creative way” to a prompt led to more creative outputs from the LLM.

- Prompts can be used to generate text that is consistent with a particular style. For example, one study found that adding the phrase “in the style of Shakespeare” to a prompt led to outputs that were more Shakespearean in style.

- Prompts can be used to solve complex problems. For example, one study found that adding the phrase “prove that” to a prompt led to the LLM generating mathematical proofs.

- It is a complex and challenging task. There is no one-size-fits-all approach to prompt engineering, and the best way to create effective prompts will vary depending on the specific application.

- It is a rapidly evolving field. There are new developments happening all the time, and the field is constantly growing and changing.

Most popular myths and facts of prompt engineering

In this ever-evolving realm, it’s crucial to discern fact from fiction to stay ahead of the curve. Our team of experts has meticulously sifted through the noise to present you with the most accurate insights, dispelling myths that might have clouded your understanding. Let’s delve into the heart of prompting and uncover the truths that can drive your success.

Myth: Prompt engineering is just about keywords

Fact: Prompt engineering is a symphony of elements

Gone are the days when prompt engineering was solely about sprinkling keywords like confetti. Today, it’s a meticulous symphony of various components working harmoniously. While keywords remain pivotal, they’re just one part of the grand orchestra. Structured data, user intent analysis, and contextual relevance are the unsung heroes that make your prompt engineering soar. Balancing these elements crafts a narrative that resonates with both users and search engines.

Myth: More prompts, higher results

Fact: Quality over quantity

Quantity might impress at first glance, but it’s quality that truly wields power in the world of prompt engineering. Crafting a handful of compelling, highly relevant prompts that align seamlessly with your content yields far superior results than flooding your page with irrelevant ones. Remember, it’s the value you provide that keeps users engaged, not the sheer number of prompts you throw their way.

Myth: Prompt engineering is a one-time task

Fact: Ongoing optimization is the key

Imagine your website as a garden that requires constant tending. Similarly, prompt engineering demands continuous attention. Regularly analyzing the performance of your prompts and adapting to shifting trends is paramount. This ensures that your content remains evergreen and resonates with the dynamic preferences of your audience.

Myth: Creativity has no place in prompt engineering

Fact: Creativity elevates engagement

While prompt engineering involves a systematic approach, creativity is the secret ingredient that adds flavor to the mix. Crafting prompts that spark curiosity, evoke emotion, or present a unique perspective can exponentially boost user engagement. Metaphors, analogies, and storytelling are potent tools that, when woven into your prompts, make your content unforgettable.

Myth: Only text prompts matter

Fact: Diversify with various formats

Text prompts are undeniably significant, but limiting yourself to them is a missed opportunity. Embrace a diverse range of prompt formats to cater to different learning styles and preferences.

Visual prompts, such as infographics and videos, engage visual learners, while audio prompts cater to those who prefer auditory learning. The more versatile your prompt formats, the broader your audience reaches.

Myth: Prompt engineering and SEO are unrelated

Fact: Symbiotic relationship

Prompt engineering and SEO are not isolated islands; they’re interconnected domains that thrive on collaboration. Solid prompt engineering bolsters SEO by providing search engines with the context they crave. Conversely, a well-optimized website enhances prompt engineering, as it ensures your content is easily discoverable by your target audience.

Myth: Complex language boosts credibility

Fact: Clarity trumps complexity

Using complex jargon might seem like a credibility booster, but it often does more harm than good. Clear, concise prompts that resonate with a broader audience hold more weight. Remember, the goal is not to showcase your vocabulary prowess but to communicate effectively and establish a genuine connection with your readers.

Myth: Prompt engineering is set-and-forget

Fact: Continuous monitoring is vital

Once you’ve orchestrated your prompts, it’s not time to sit back and relax. The digital landscape is in perpetual motion, and so should be your approach to prompt engineering. Monitor the performance of your prompts regularly, employing data analytics to identify patterns and make informed adjustments that keep your content relevant and engaging.

Myth: Only experts can master prompt engineering

Fact: Learning and iteration lead to mastery

While prompt engineering might appear daunting, it’s a skill that can be honed with dedication and a willingness to learn. Don’t shy away from experimentation and iteration. Embrace the insights gained from your data, be open to refining your approach, and gradually you’ll find yourself mastering the art of prompt engineering.

Get on the journey of prompt engineering

Prompt engineering is a dynamic discipline that demands both strategy and creativity. Dispelling these myths and embracing the facts will propel your content to new heights, setting you apart from the competition. Remember, prompt engineering is not a one-size-fits-all solution; it’s an evolving journey of discovery that, when approached with dedication and insight, can yield remarkable results