Generative AI is a type of artificial intelligence that can create new data, such as text, images, and music. This technology has the potential to revolutionize healthcare by providing new ways to diagnose diseases, develop new treatments, and improve patient care.

A recent report by McKinsey & Company suggests that generative AI in healthcare has the potential to generate up to $1 trillion in value for the healthcare industry by 2030. This represents a significant opportunity for the healthcare sector, which is constantly seeking new ways to improve patient outcomes, reduce costs, and enhance efficiency.

Generative AI in Healthcare

- Improved diagnosis: Generative AI can be used to create virtual patients that mimic real-world patients. These virtual patients can be used to train doctors and nurses on how to diagnose diseases.

- New drug discovery: Generative AI can be used to design new drugs that target specific diseases. This technology can help to reduce the time and cost of drug discovery.

- Personalized medicine: Generative AI can be used to create personalized treatment plans for patients. This technology can help to ensure that patients receive the best possible care.

- Better medical imaging: Generative AI can be used to improve the quality of medical images. This technology can help doctors to see more detail in images, which can lead to earlier diagnosis and treatment.

- More efficient surgery: Generative AI can be used to create virtual models of patients’ bodies. These models can be used to plan surgeries and to train surgeons.

- Enhanced rehabilitation: Generative AI can be used to create virtual environments that can help patients to recover from injuries or diseases. These environments can be tailored to the individual patient’s needs.

- Improved mental health care: Generative AI can be used to create chatbots that can provide therapy to patients. These chatbots can be available 24/7, which can help patients to get the help they need when they need it.

Read more –> LLM Use-Cases: Top 10 industries that can benefit from using LLM

Limitations of Generative AI in Healthcare

Despite the promises of generative AI in healthcare, there are also some limitations to this technology. These limitations include:

Data requirements: Generative AI models require large amounts of data to train. This data can be difficult and expensive to obtain, especially in healthcare.

Bias: Generative AI models can be biased, which means that they may not be accurate for all populations. This is a particular concern in healthcare, where bias can lead to disparities in care.

Also learn about algorithmic bias and skewed decision making

Interpretability: Generative AI models can be difficult to interpret, which means that it can be difficult to understand how they make their predictions. This can make it difficult to trust these models and to use them for decision-making.

False results: Despite how sophisticated generative AI is, it is fallible. Inaccuracies and false results may emerge, especially when AI-generated guidance is relied upon without rigorous validation or human oversight, leading to misguided diagnoses, treatments, and medical decisions.

Patient privacy: The crux of generative AI involves processing copious amounts of sensitive patient data. Without robust protection, the specter of data breaches and unauthorized access looms large, jeopardizing patient privacy and confidentiality.

Ethical considerations: The ethical landscape traversed by generative AI raises pivotal questions. Responsible use, algorithmic transparency, and accountability for AI-generated outcomes demand ethical frameworks and guidelines for conscientious implementation.

Regulatory and legal challenges: The regulatory landscape for generative AI in healthcare is intricate. Navigating data protection regulations, liability concerns for AI-generated errors, and ensuring transparency in algorithms pose significant legal challenges.

Generative AI in Healthcare: 6 Use Cases

Generative AI is revolutionizing healthcare by leveraging deep learning, transformer models, and reinforcement learning to improve diagnostics, personalize treatments, optimize drug discovery, and automate administrative workflows. Below, we explore the technical advancements, real-world applications, and AI-driven improvements in key areas of healthcare.

-

Medical Imaging and Diagnostics

Generative AI in healthcare enhances medical imaging by employing convolutional neural networks (CNNs), GANs, and diffusion models to reconstruct, denoise, and interpret medical scans. These models improve image quality, segmentation, and diagnostic accuracy while reducing radiation exposure in CT scans and MRIs.

Key AI Models Used:

U-Net & FCNs: These models enable precise segmentation of tumors and lesions in MRIs and CT scans, making it easier for doctors to pinpoint problem areas with higher accuracy.

CycleGAN: This model converts CT scans into synthetic MRI-like images, increasing diagnostic versatility without requiring paired datasets, which can be time-consuming and resource-intensive.

Diffusion Models: Though still in experimental stages, these models hold great promise for denoising low-resolution MRI and CT scans, improving image quality even in cases of low-quality scans.

Real-World Applications:

Brain Tumor Segmentation: In collaboration with University College London Hospital, DeepMind developed CNN-based models to accurately segment brain tumors in MRIs, leading to faster and more precise diagnoses.

Diabetic Retinopathy Detection: Google’s AI team has created a model that can detect diabetic retinopathy from retinal images with 97.4% sensitivity, matching the performance of expert ophthalmologists.

Low-Dose CT Enhancement: GANs like GAN-CIRCLE can generate high-quality CT images from low-dose inputs, reducing radiation exposure while maintaining diagnostic quality.

-

Personalized Treatment and Drug Discovery

Generative AI accelerates drug discovery and precision medicine through reinforcement learning (RL), transformer-based models, and generative chemistry algorithms. These models predict drug-target interactions, optimize molecular structures, and identify novel treatments.

Key AI Models Used:

AlphaFold (DeepMind): AlphaFold predicts protein 3D structures with remarkable accuracy, enabling faster identification of potential drug targets and advancing personalized medicine.

Variational Autoencoders (VAEs): These models explore chemical space and generate novel drug molecules, with companies like Insilico Medicine leveraging VAEs to discover new compounds for various diseases.

Transformer Models (BioGPT, ChemBERTa): These models analyze large biomedical datasets to predict drug toxicity, efficacy, and interactions, helping scientists streamline the drug development process.

Real-World Applications:

AI-Generated Drug Candidates: Insilico Medicine used generative AI to discover a preclinical candidate for fibrosis in just 18 months—far quicker than the traditional 3 to 5 years.

Halicin Antibiotic Discovery: MIT’s deep learning model screened millions of molecules to identify Halicin, a novel antibiotic that fights drug-resistant bacteria.

Precision Oncology: Tools like Tempus analyze multi-omics data (genomics, transcriptomics) to recommend personalized cancer therapies, offering tailored treatments based on an individual’s unique genetic makeup.

-

Virtual Health Assistants and Chatbots

AI-powered chatbots use transformer-based NLP models and reinforcement learning from human feedback (RLHF) to understand patient queries, provide triage, and deliver mental health support.

Key AI Models Used:

Med-PaLM 2 (Google): This medically tuned large language model (LLM) answers complex clinical questions with impressive accuracy, performing well on the U.S. Medical Licensing Exam-style queries.

ClinicalBERT: A specialized version of BERT, ClinicalBERT processes electronic health records (EHRs) to predict diagnoses and suggest treatments, helping healthcare professionals make informed decisions quickly.

Real-World Applications:

Mental Health Support: Woebot uses sentiment analysis and cognitive-behavioral therapy (CBT) techniques to support users dealing with anxiety and depression, offering them coping strategies and a listening ear.

AI Symptom Checkers: Babylon Health offers an AI-powered chatbot that analyzes symptoms and helps direct patients to the appropriate level of care, improving access to healthcare.

-

Medical Research and Data Analysis

AI accelerates research by analyzing complex datasets with self-supervised learning (SSL), graph neural networks (GNNs), and federated learning while preserving privacy.

Key AI Models Used:

Graph Neural Networks (GNNs): GNNs are used to model protein-protein interactions, which can help in drug repurposing, as seen with Stanford’s Decagon model.

Federated Learning: This technique enables training AI models on distributed datasets across different institutions (like Google’s mammography research) without compromising patient privacy.

Real-World Applications:

The Cancer Genome Atlas (TCGA): AI models are used to analyze genomic data to identify mutations driving cancer progression, helping researchers understand cancer biology at a deeper level.

Synthetic EHRs: Companies like Syntegra are generating privacy-compliant synthetic patient data for research, enabling large-scale studies without risking patient privacy.

-

Robotic Surgery and AI-Assisted Procedures

AI-assisted robotic surgery integrates computer vision and predictive modeling to enhance precision, though human oversight remains critical.

Key AI Models Used:

Mask R-CNN: This model identifies anatomical structures in real-time during surgery, providing surgeons with a better view of critical areas and improving precision.

Reinforcement Learning (RL): RL is used to train robotic systems to adapt to tissue variability, allowing them to make more precise adjustments during procedures.

Real-World Applications:

Da Vinci Surgical System: Surgeons use AI-assisted tools to smooth motion and reduce tremors during minimally invasive procedures, improving outcomes and reducing recovery times.

Neurosurgical Guidance: AI is used in neurosurgery to map functional brain regions during tumor resections, reducing the risk of damaging critical brain areas during surgery.

-

AI in Administrative Healthcare

AI automates workflows using NLP, OCR, and anomaly detection, though human validation is often required for regulatory compliance.

Key AI Models Used:

Tesseract OCR: This optical character recognition (OCR) tool helps digitize handwritten clinical notes, converting them into structured data for easy access and analysis.

Anomaly Detection: AI models can analyze claims data to flag potential fraud, reducing administrative overhead and improving security.

Real-World Applications:

AI-Assisted Medical Coding: Tools like Nuance CDI assist in coding clinical documentation, improving accuracy and reducing errors in the medical billing process by over 30% in some pilot studies.

Hospital Resource Optimization: AI can predict patient admission rates and help hospitals optimize staff scheduling and resource allocation, ensuring smoother operations and more effective care delivery.

Simple Strategies for Mitigating the Risks of AI in Healthcare

We’ve already talked about the potential pitfalls of generative AI in healthcare. Hence, there lies a critical need to address these risks and ensure AI’s responsible implementation. This demands a collaborative effort from healthcare organizations, regulatory bodies, and AI developers to mitigate biases, safeguard patient privacy, and uphold ethical principles.

1. Mitigating Biases and Ensuring Unbiased Outcomes: One of the primary concerns surrounding generative AI in healthcare is the potential for biased outputs. Generative AI models, if trained on biased datasets, can perpetuate and amplify existing disparities in healthcare, leading to discriminatory outcomes. To address this challenge, healthcare organizations must adopt a multi-pronged approach.

Also know about 6 risks of LLMs & best practices to overcome them

2. Diversity in Data Sources: Diversify the datasets used to train AI models to ensure they represent the broader patient population, encompassing diverse demographics, ethnicities, and socioeconomic backgrounds.

3. Continuous Monitoring and Bias Detection: Continuously monitor AI models for potential biases, employing techniques such as fairness testing and bias detection algorithms.

4. Human Oversight and Intervention: Implement robust human oversight mechanisms to review AI-generated outputs, ensuring they align with clinical expertise and ethical considerations.

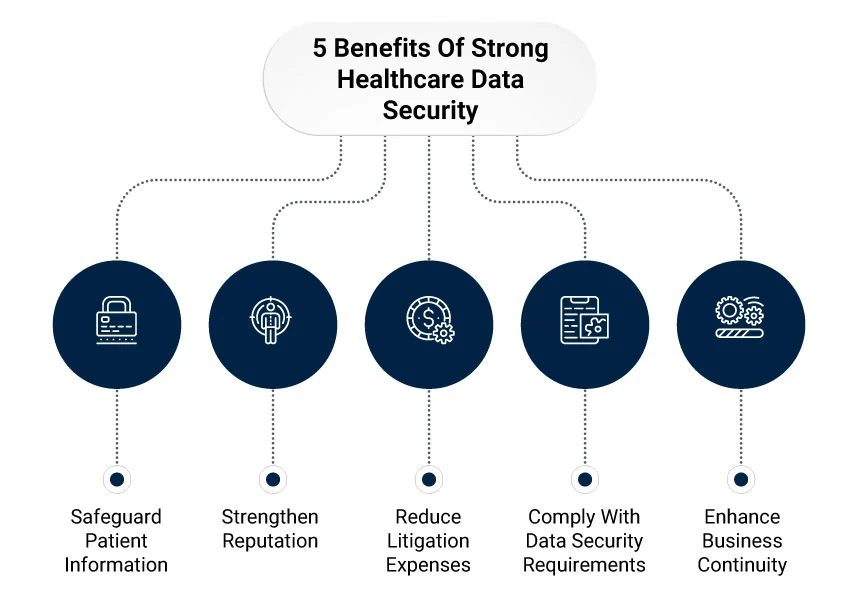

Safeguarding Patient Privacy and Data Security

The use of generative AI in healthcare involves the processing of vast amounts of sensitive patient data, including medical records, genetic information, and personal identifiers. Protecting this data from unauthorized access, breaches, and misuse is paramount. Healthcare organizations must prioritize data security by implementing:

Learn about: Top 6 cybersecurity trends

Secure Data Storage and Access Controls

To ensure the protection of sensitive patient data, it’s crucial to implement strong security measures like data encryption and multi-factor authentication. Encryption ensures that patient data is stored in a secure, unreadable format, accessible only to authorized individuals. Multi-factor authentication adds an extra layer of security, requiring users to provide multiple forms of verification before gaining access.

Additionally, strict access controls should be in place to limit who can view or modify patient data, ensuring that only those with a legitimate need can access sensitive information. These measures help mitigate the risk of data breaches and unauthorized access.

Data Minimization and Privacy by Design

AI systems in healthcare should follow the principle of data minimization, collecting only the data necessary to achieve their specific purpose. This reduces the risk of over-collection and ensures that sensitive information is only used when absolutely necessary.

Privacy by design is also essential—privacy considerations should be embedded into the AI system’s development from the very beginning. Techniques like anonymization and pseudonymization should be employed, where personal identifiers are removed or replaced, making it more difficult to link data back to specific individuals. These steps help safeguard patient privacy while ensuring the AI system remains effective.

Transparent Data Handling Practices

Clear communication with patients about how their data will be used, stored, and protected is essential to maintaining trust. Healthcare providers should obtain informed consent from patients before using their data in AI models, ensuring they understand the purpose and scope of data usage.

This transparency helps patients feel more secure in sharing their data and allows them to make informed decisions about their participation. Regular audits and updates to data handling practices are also important to ensure ongoing compliance with privacy regulations and best practices in data security.

Upholding Ethical Principles and Ensuring Accountability

The integration of generative AI in healthcare decision-making raises ethical concerns regarding transparency, accountability, and the ethical use of AI algorithms. To address these concerns, healthcare organizations must:

- Provide transparency and explainability of AI algorithms, enabling healthcare professionals to understand the rationale behind AI-generated decisions.

- Healthcare organizations must implement accountability mechanisms for generative AI in healthcare to ensure error resolution, risk mitigation, and harm prevention. Providers, developers, and regulators should define clear roles and responsibilities in overseeing AI-generated outcomes.

- Develop and adhere to ethical frameworks and guidelines that govern the responsible use of generative AI in healthcare, addressing issues such as fairness, non-discrimination, and respect for patient autonomy.

Ensuring Safe Passage: A Continuous Commitment

The responsible implementation of generative AI in healthcare requires a proactive and multifaceted approach that addresses potential risks, upholds ethical principles, and safeguards patient privacy.

By adopting these measures, healthcare organizations can leverage generative AI in healthcare to transform delivery while ensuring its benefits are safe, equitable, and ethical.