Welcome to Data Science Dojo’s Weekly Newsletter, “The Data-Driven Dispatch”.

Did you know how much it costs to run ChatGPT every day? Well, hold on to your hats, because the numbers are jaw-dropping! Running the GPT-3.5 version costs OpenAI $700,000 per day. And the newer GPT-4? It demands an even steeper financial commitment.

But why such a hefty price tag? These language models are like the muscle cars of the AI world – they need a ton of computational horsepower to flex their linguistic proficiency. It’s no small feat to process millions of words and give out coherent, intelligent responses.

So, why is everyone in the tech race trying to build the biggest AI on the block, despite the skyrocketing costs?

Initially, the industry focused on enhancing model capabilities through increasing parameters.

However, this approach overlooked the escalating operational costs.

As these models have become a necessity globally, there’s a dire need to find sustainable ways of running language models.

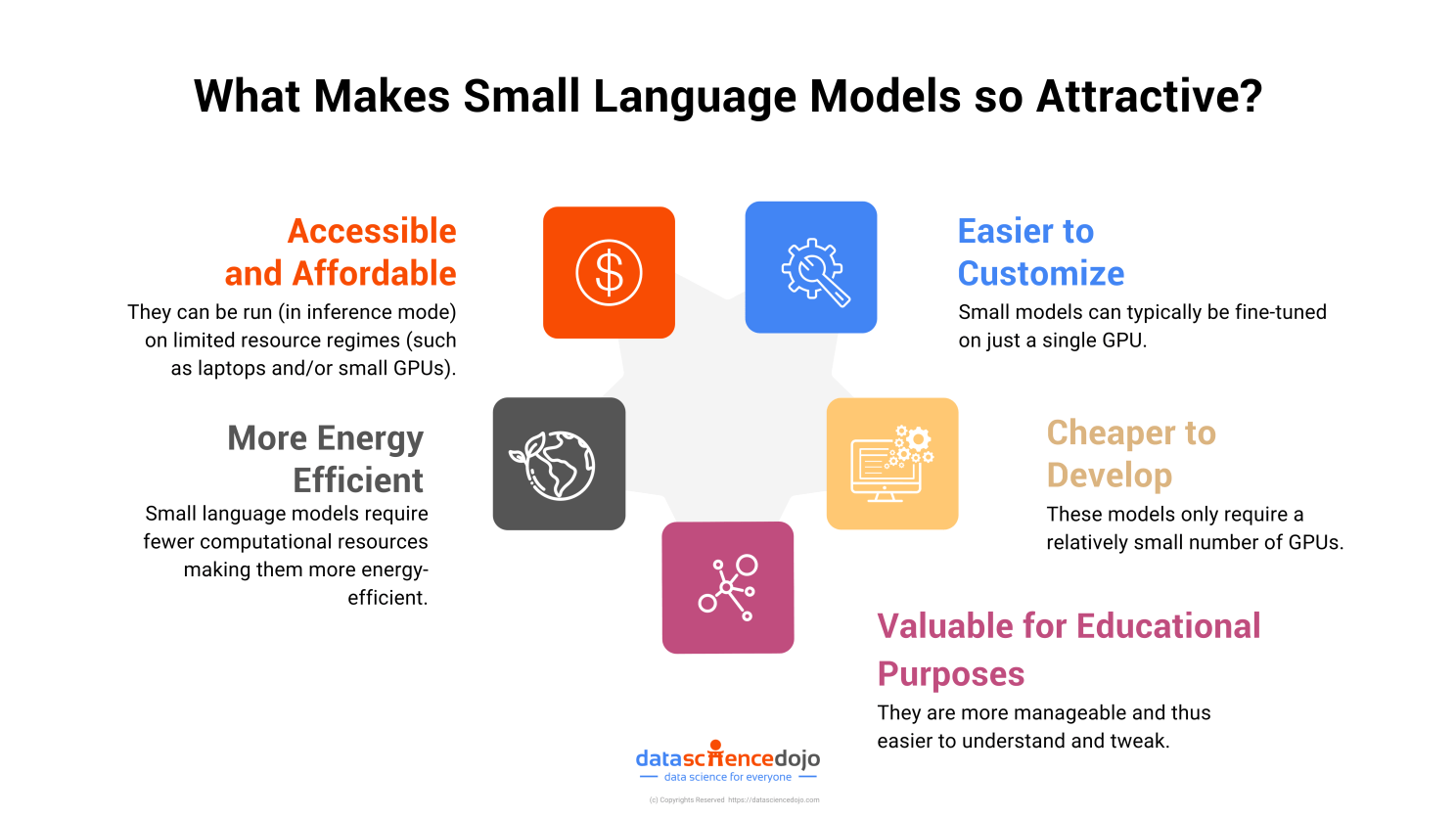

And this is exactly why, small language models joined the generative AI architecture and have gained a lot of popularity.

Not only are they cost-effective, but they offer interesting implications in our personal lives. Let’s explore them together.

What are Small Language Models?

Small language models are scaled-down versions of larger AI models like GPT-3 or GPT-4. They are designed to perform similar tasks—understanding and generating human-like text—but with fewer parameters. Subsequently, they are cheaper and more environmentally responsible.

Read: Small Language Models Simplified

This is why, big tech companies like Microsoft, Google, and Meta are deploying huge teams to create techniques to train SLMs. Not only that, emerging startups like the French unicorn, Mistral AI are also working on building efficient SLMs.

Here’s a comparison of the performance of some leading small language models like Phi 3, Llama 3, Gemma, and more.

Read: The 5 leading small language models of 2024: Phi 3, Llama 3, and more

How Can Small Language Models Function Well with Fewer Parameters?

Well, there are two reasons for this—one, the different training methods than LLMs, and two, their domain-specific nature.

- Domain-Specific Nature of SLMs: SLMs are often domain-specific, which allows them to handle data and problems that are unique to an organization’s industry such as finance, healthcare, or supply chain management. This gives them a significant advantage in performance within their respective domains.

- Training of SLMs: SLMs are more efficient to train and deploy as they require less data. Currently, popular techniques include transfer learning and knowledge distillation. Transfer learning allows SLMs to leverage pre-trained models and build upon their knowledge, reducing the need for large training datasets. Knowledge distillation, on the other hand, involves training SLMs using a larger model’s outputs, effectively condensing the larger model’s knowledge into the smaller one.

How Will SLMs Change Our Daily Lives in the Future?

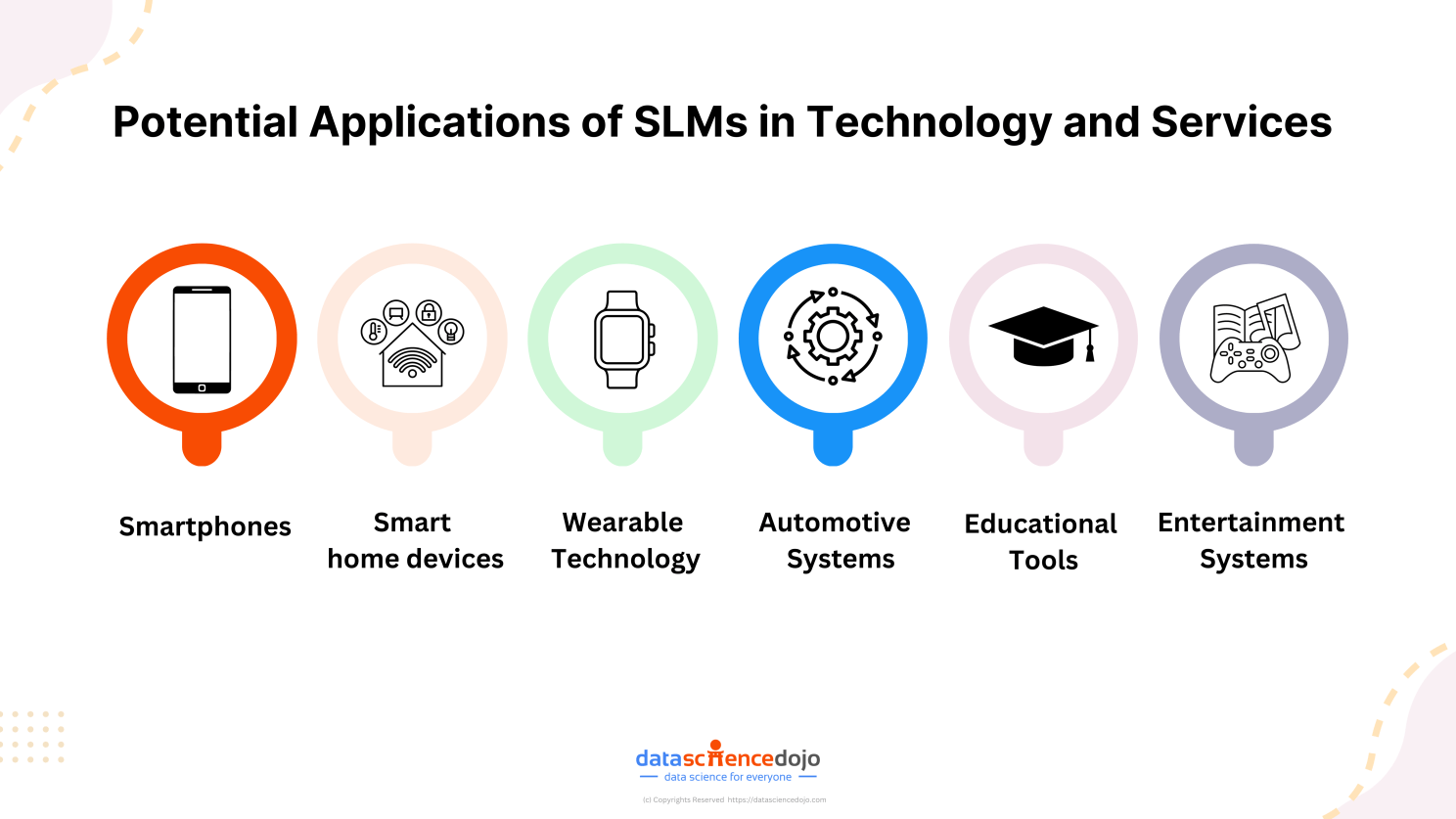

Considering the efficiency of small language models and their ability to run on minimal computational resources, they are set to be embedded in a wide range of everyday tools.

Take smartphones, for instance. Manufacturers are developing these compact language models for integration directly into mobile devices. This will enable users to perform various searches without having to access the internet, offering enhanced privacy in today’s digital era.

Eventually, SLMs will have vast implications in our everyday lives due to their accessibility and ease of use.

Risks of Large Language Models

Small language models do solve various challenges that are posed by LLMs, but we cannot ignore the underlying issues of bias and discrimination that these models have.

Listen to this podcast by Microsoft, where Hanna Wallach, whose research into fairness, accountability, transparency, and ethics in AI and machine learning has helped inform the use of AI in Microsoft products and services for years.

AI Frontiers: Measuring and mitigating harms with Hanna Wallach

Finding the information overload too daunting?Time to sit back and relax!

Redefine the Boundaries of Your Potential With AI

This week’s dispatch has made one thing clear, language models, whether small or large, have vast implications for the future. They are going to be everywhere in our daily lives and businesses. Read more

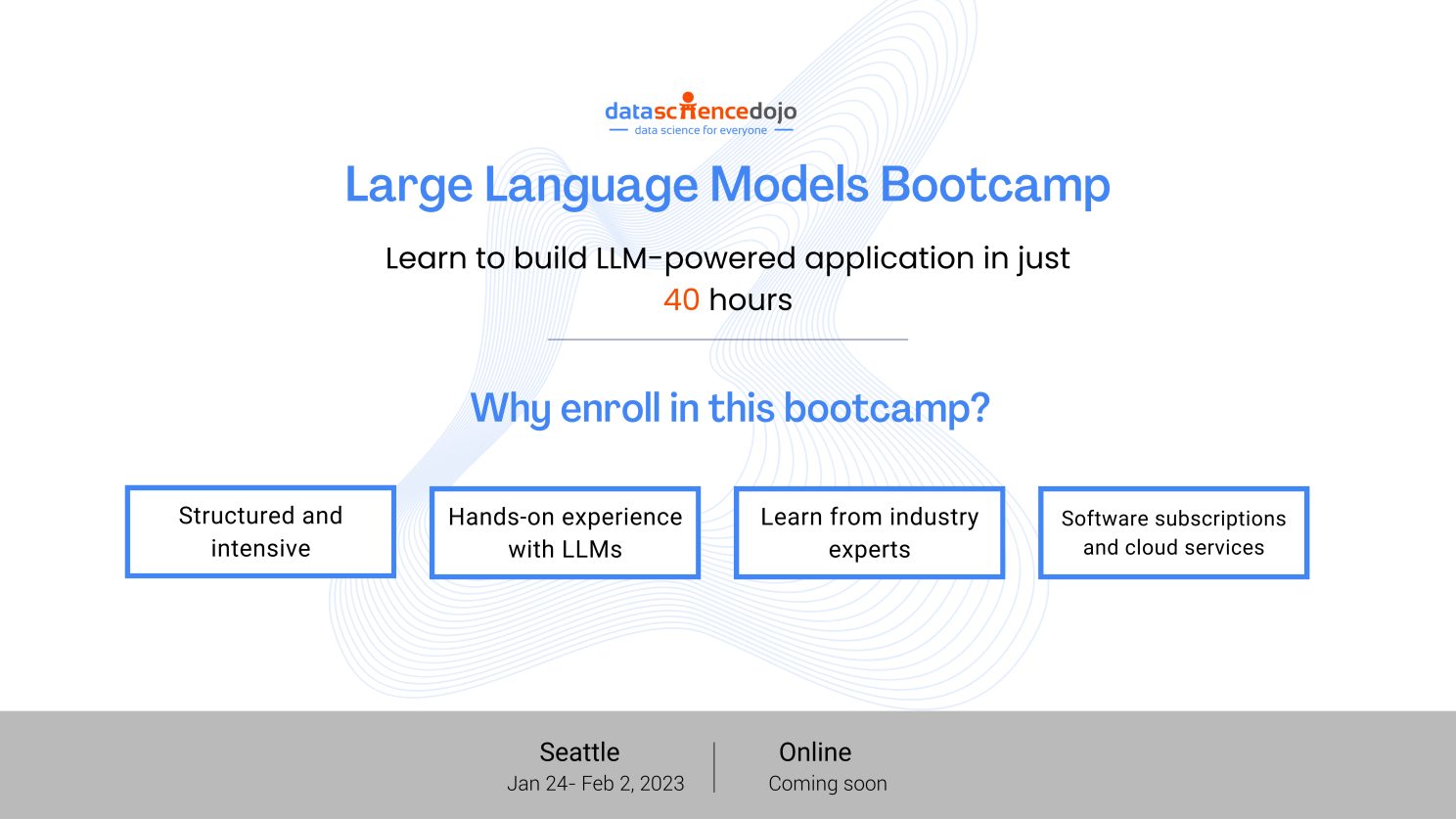

Hence, we must learn how to build language-model-powered applications because they are the gateway to surpassing what we humans have been limited by. Consider enrolling in Data Science Dojo’s Large Language Models Bootcamp; a comprehensive 40-hour training where you can learn it all.

Learn more about the comprehensive curriculum of the bootcamp here.

Finally, let’s end the day with some AI headlines that kept the show alive.

- OpenAI debuts ChatGPT subscription aimed at small teams. Read more

- OpenAI launches a store for custom AI-powered chatbots. Read more

- Google unveils VideoPoet, a simple modeling method that can convert any large language model into a high-quality video generator. Read more

- Microsoft’s Copilot app is now available on iOS; The Copilot app allows users to ask questions, draft text, and generate images. Read more

- France is emerging as a prominent AI hub, attracting tech giants’ research labs, and a surge of strong competition among VC firms. Read more