Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch“.

We have entered an interesting era of AI development. On one hand, we see progress in artificial intelligence happening every day. On the flip side, we are worried if A) these innovation sprouts will lead us to face the deadly consequences of artificial intelligence or B) we’d regulate the technology so much that the innovation will downsize and remain for big tech only.

Hence, more than ever before, effective AI policies are needed! They should help mitigate the potential risks of LLM-powered applications but also systematically keep the fire of innovation alive.

Let’s examine how different countries are regulating AI and how it will impact it on the edge!

What is Regulatory Capture – How Does It Shape Innovation

Regulatory capture occurs when complex laws favor large companies, enabling them to dominate the market.

Think of regulatory capture as a game where the big guys have the upper hand. It’s like this: the rules are so complicated and tough that only the big companies can keep up, like the pharmaceutical world. Smaller businesses? They just can’t afford to play this game. It’s like in a forest where the big trees hog all the sunlight, and the little plants get left in the dark.

So, what happens? Innovation, especially from small and medium enterprises, just doesn’t stand a chance.

Are Globally Rising AI Regulations Taking Us Towards A Regulatory Capture?

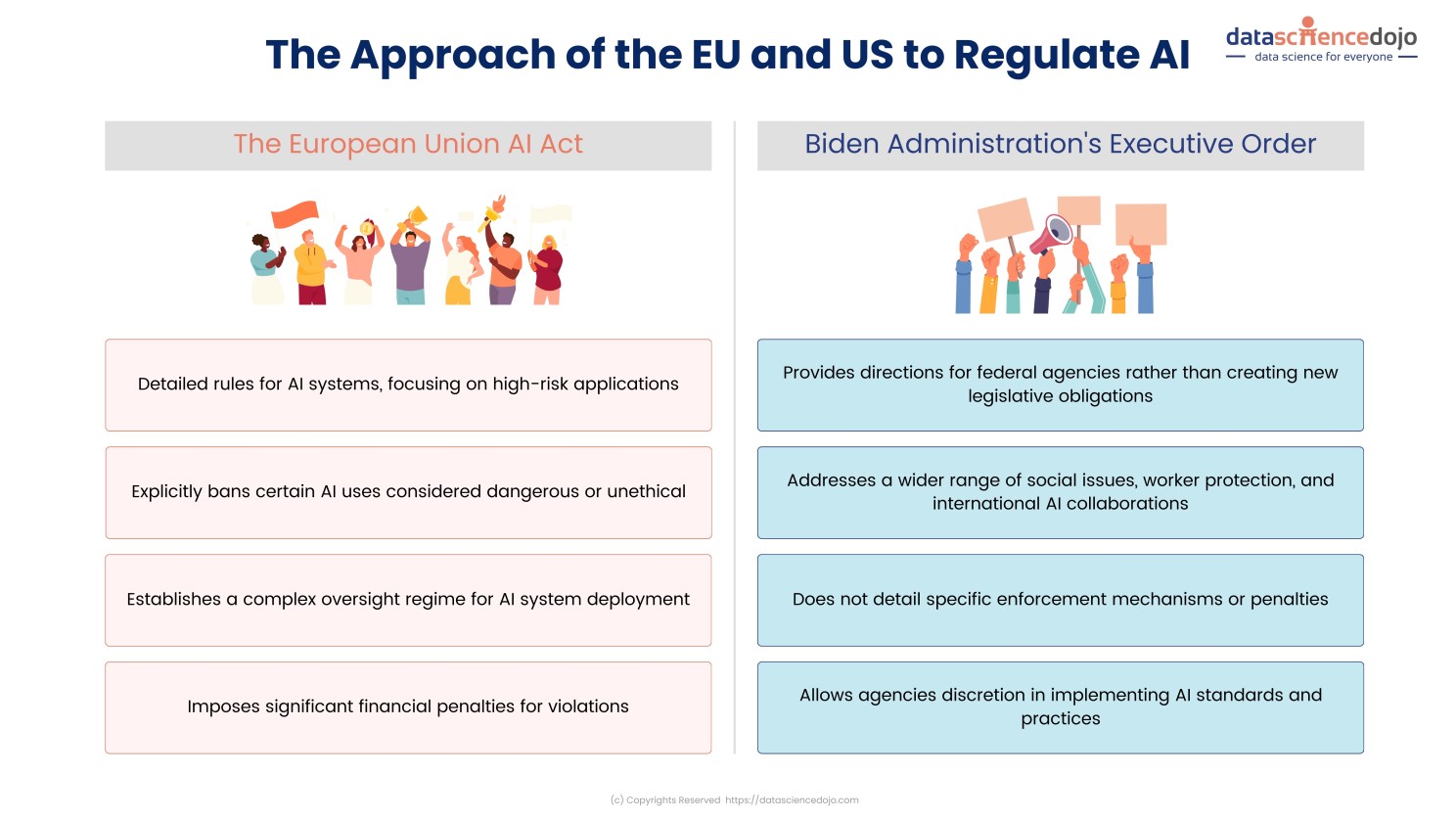

We are seeing increasing pressure towards regulating artificial intelligence amid the rising fears. Recently, the European Union passed the draft for AI Act which aims to implement detailed regulations on AI systems, focusing on high-risk AI with obligations for data governance, testing, post-market monitoring, and prohibiting some LLMs uses.

Despite its limitations on foundation models, the legislation exempts open-source models, a move influenced by lobbying from European open-source artificial intelligence firms like Mistral and Aleph Alpha and benefiting Meta’s open-source LlaMa model.

Read more: Comprehensive AI Act – Tech Revolution by European Union

On the other hand, the Biden administration of the US also released the US executive order for AI. This, in contrast, does not create new legislative obligations but gives directions for agencies, covering social issues, worker protection, and international AI frameworks.

Both laws address high-risk artificial intelligence systems, transparency, and standards but differ in approach and enforcement. The EU Act has a complex oversight regime with significant penalties, while the US EO lacks specific enforcement provisions.

We’re not seeing an EU-wide unity over the AI Act. The Macron Govt believes that this act is likely to push Europe behind the US and China.

Prominent thought leaders in the field are also rejecting the idea of banning the research and development of technology in the first place. Andrew Ng recently said, “Regulating large language models makes as much sense as regulating high horsepower motors”.

The alternate discourse presented is to regulate artificial intelligence applications that are harmful rather than regulating AI itself.

Want to learn more about AI? Our blog is the go-to source for the latest tech news.

2,851 Miles: What’s Lurking Between Tech and Policy?

In our ongoing exploration of regulatory capture and its profound impact on the tech industry, we bring to your attention a pivotal talk by Bill Gurley, a notable Venture Capitalist.

He highlights how regulations, though intended to be beneficial, can inadvertently give an edge to large firms, thereby risking innovation in artificial intelligence. Gurley’s insights are particularly relevant as the EU develops new laws, offering a valuable perspective on their potential impact on both big tech companies and startups.

To connect with LLM and Data Science Professionals, join our discord server.

Time for a chill pill! Can you relate to the programming team’s obsession with automation?😂

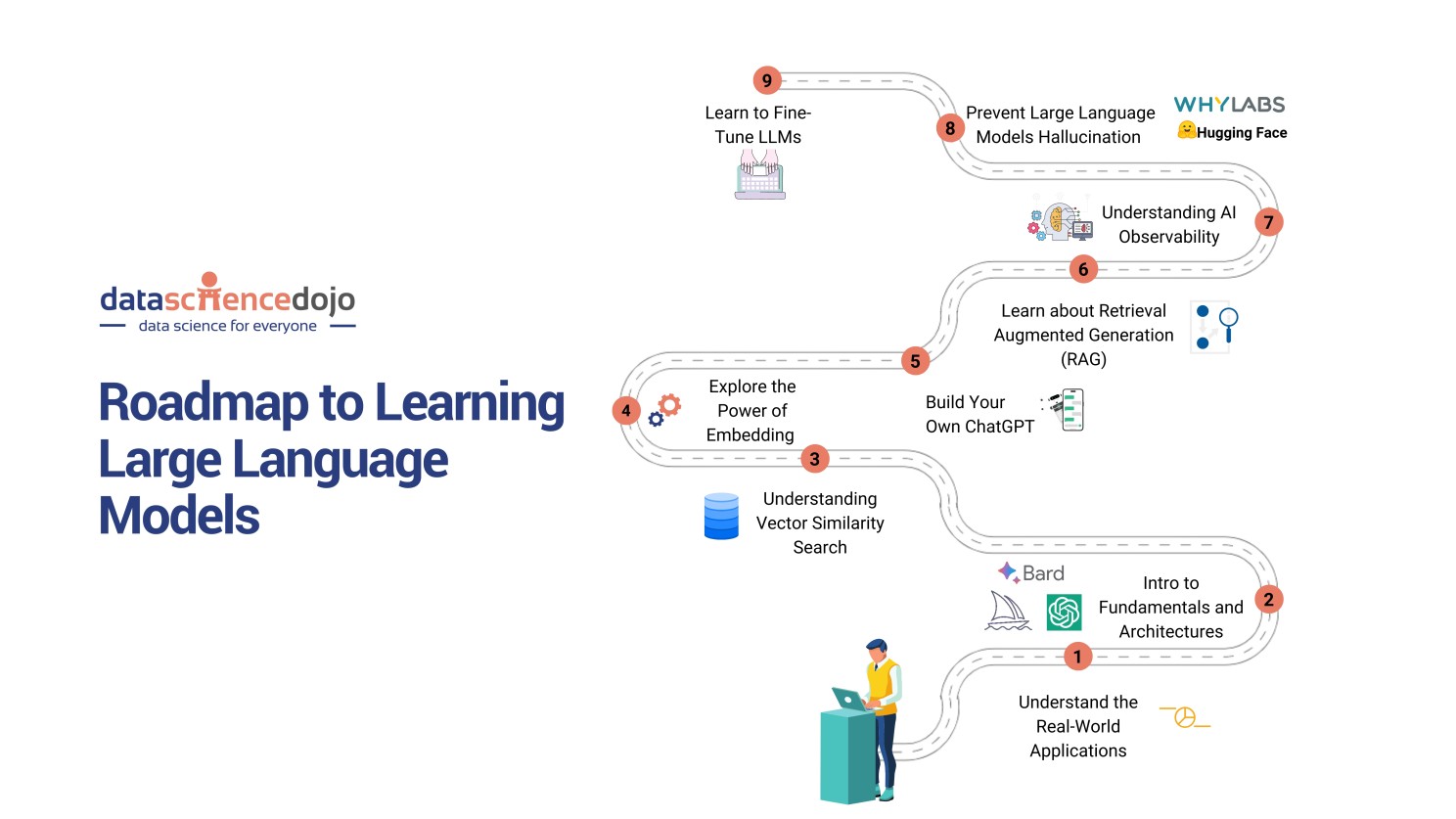

Lead the Chase: Master Large Language Models LLMS

Understanding LLMs thoroughly, including their mechanisms, is crucial. This deep knowledge fosters meaningful conversations about the role and influence of these technologies in diverse sectors, leading to a society that is both technologically literate and informed.

Here’s your brief roadmap for mastering LLMs.

Read: Roadmap to Learning Large Language Models

Finally, let’s end our day with some of the latest headlines for this week!

- Meta introduces Purple Llama, an umbrella project featuring open and safety tools and evaluations to help developers build responsibly with large language models. Read more

- Amidst the risk of Nvidia’s best chips sold out until 2024: Amazon unveils new chips for training and running models. Read more

- A new study exposes racial disparity in autonomous vehicle detection: darker skin is linked to higher non-detection rates and safety concerns. Read more

- Stability.AI introduces SDXL Turbo: A real-time text-to-image generation model, a new technology that reduces the step count from 50 to 1. Read more

- Google Cloud and HashiCorp extend partnership to advance product offerings with generative artificial intelligence. Read more