Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch“.

Generative AI has become a necessity. However, the language models that power generative AI demand enormous computational resources.

Imagining these powerful applications functioning remotely on everyday devices like phones and laptops is daunting.

This challenge involves running massive applications on limited computational power, managing memory constraints, and ensuring efficient model optimization.

Interestingly, Apple has made this dream a reality with its recent announcement of Apple Artificial Intelligence.

Let’s dig into Apple’s most awaited move into the AI sphere, and how it makes a difference, even when it’s quite late into the marathon.

The Rise of On-Device AI with Apple Artificial Intelligence

While cloud-based AI applications like ChatGPT are highly effective, concerns about data leakage and the potential use of personal data for model training make many users uneasy.

On the other hand, on-device AI presents a better approach.

-

Privacy: Your data stays on your device, reducing the risk of breaches and keeping your information secure.

-

Speed: With AI processing happening locally, you get faster responses. No more waiting for data to travel back and forth from the cloud.

-

Reliability: On-device AI works even without an internet connection. This means your smart assistant or AI-powered app is always ready to help, no matter where you are.

What is Apple Intelligence

Apple Intelligence integrates advanced AI technologies into iOS, iPadOS, and macOS.

This means you get powerful AI features such as summarizing your calls, finding docs with semantic search, creating unique emojis, asking Siri to execute your tasks across different apps, and a lot more.

The Dual Model Architecture

Apple has introduced a smart dual-model architecture that uses on-device models for easier tasks such as question-answering, and private cloud computing for tasks that require higher computational power such as data analysis.

-

On-Device Models: These handle tasks that need quick responses and can work offline. They are designed to be fast and efficient, making sure your device performs well without draining too many resources.

-

Server Models: For more complex tasks, server models take over. They use the cloud’s computing power to deliver advanced capabilities without putting a heavy load on your device.

By blending these two approaches, Apple Artificial Intelligence ensures you get the best of both worlds i.e. speedy, reliable performance and advanced AI capabilities.

Read more: Inside Apple Intelligence: Implementing On-Device AI Smartly

Apple’s Strategic Move

This strategy shows Apple’s cautious approach to fully embracing generative AI. While ensuring strong privacy and integration, Apple’s late entry into the AI field can seem like it’s catching up to Google and Microsoft.

However, Apple’s control over its ecosystem gives it a strong platform to distribute and refine AI innovations, positioning it as a potential leader in the generative AI landscape.

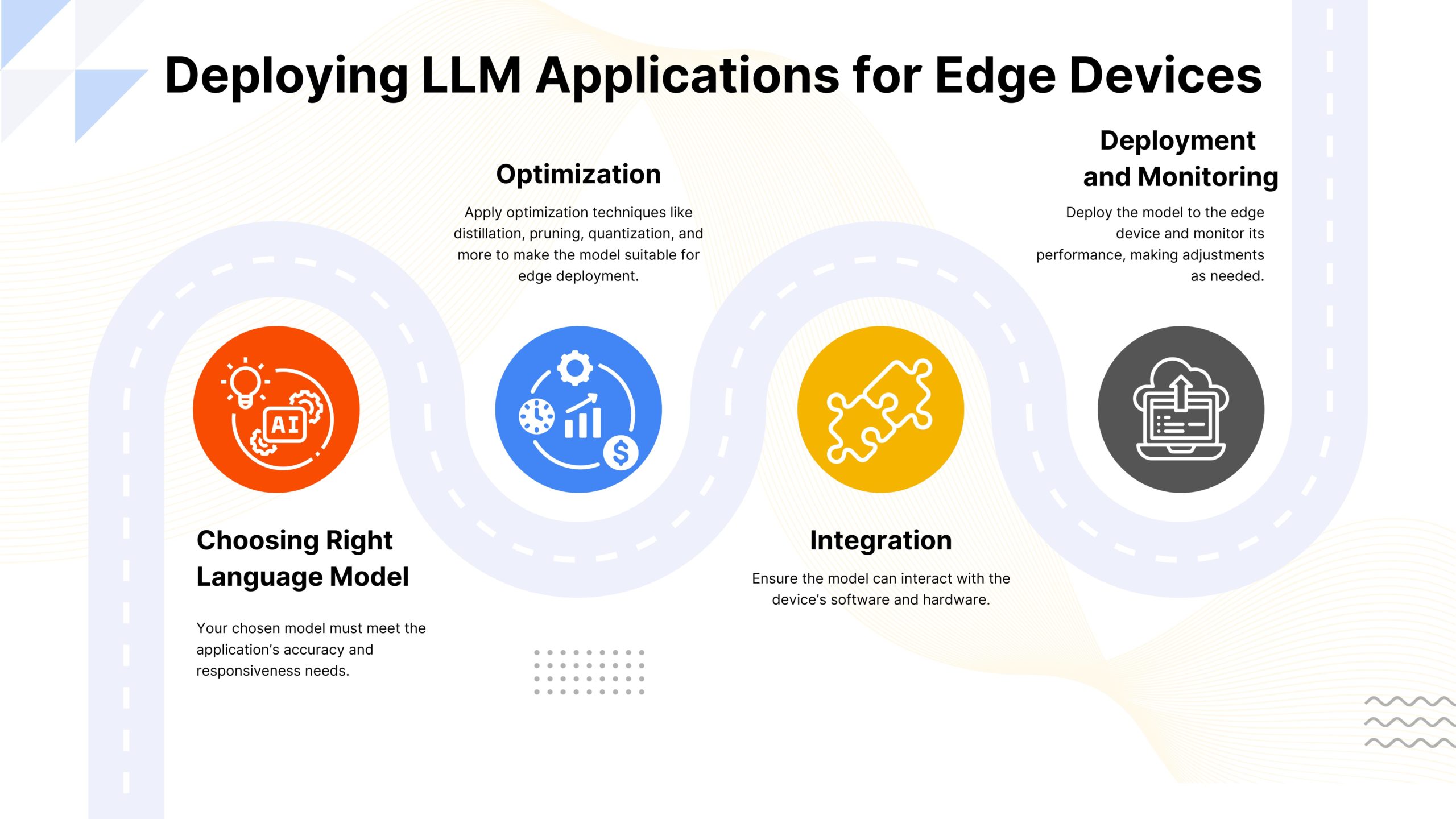

Deploying LLM Applications on Edge Devices

Want to build generative AI applications, optimized for edge devices?

Here’s a roadmap for all the key decisions that you need to take along the way which include selecting the right model, using various optimization techniques, implementing effective deployment strategies, and more.

Read: Roadmap to Deploy LLM Applications on Edge Devices

Tutorial – Generative AI: From Proof of Concept to Production

In this tutorial, Denys Linkov demonstrates how to transition AI from proof of concept to production-ready applications.

He covers key strategies including leveraging Retrieval-Augmented Generation (RAG), evaluating large language models, and developing compelling business cases for AI implementation.

To connect with LLM and Data Science Professionals, join our discord server now!

Podcast – Building a Multi-million Dollar AI Business

Launching a startup is tough, especially when standing out in a crowded market. In this episode, Raja Iqbal, Founder and CEO of Data Science Dojo, talks with Bob van Luijt, Co-founder and CEO of Weaviate.

Bob shares his journey from a tech-enthused childhood to starting his own company. They discuss the highs and lows of entrepreneurship, creating a culture of growth, making critical decisions, and impressing investors to secure funding.

Tune in for valuable insights on building a successful startup.

Subscribe to our podcast on Spotify and stay in the loop with conversations from the front lines of LLM innovation!

Other than Apple’s big launch, here’s more that happened in the AI sphere.

-

NVIDIA releases powerful open-source LLMs for research and commercial use. Read more

-

Alibaba has released its new advanced model, Qwen 2.0, which understands many languages and performs better than before. Read more

-

Stable Diffusion releases stable diffusion 3 medium: Powerful and accessible text-to-image AI. Read more

-

Nvidia tops Microsoft as the most valuable public company. Read more

-

Apple Developer Academy introduces AI training for all students and alumni. Read more