AI security is the primary bottleneck preventing the widespread adoption of AI in enterprises, particularly due to the need for robust data and intellectual property governance.

According to a report from IBM, 75% of global business leaders see AI security and ethics as crucial, and 82% believe trust and transparency in AI can set them apart.

Let’s inspect the AI situation today. The landscape is filled with challenges related to data leakage, generating harmful information from AI models, biased or incorrect outputs, and more.

While the risks of generative AI are massive, they can be overcome!

In this week’s dispatch, we’ll dive into the challenges and explore key practices for creating safe and reliable AI applications.

Data Security Risks of Large Language Model Applications

Enterprise LLM applications are easy to imagine and build a demo out of, but challenging to turn into a reliable business application.

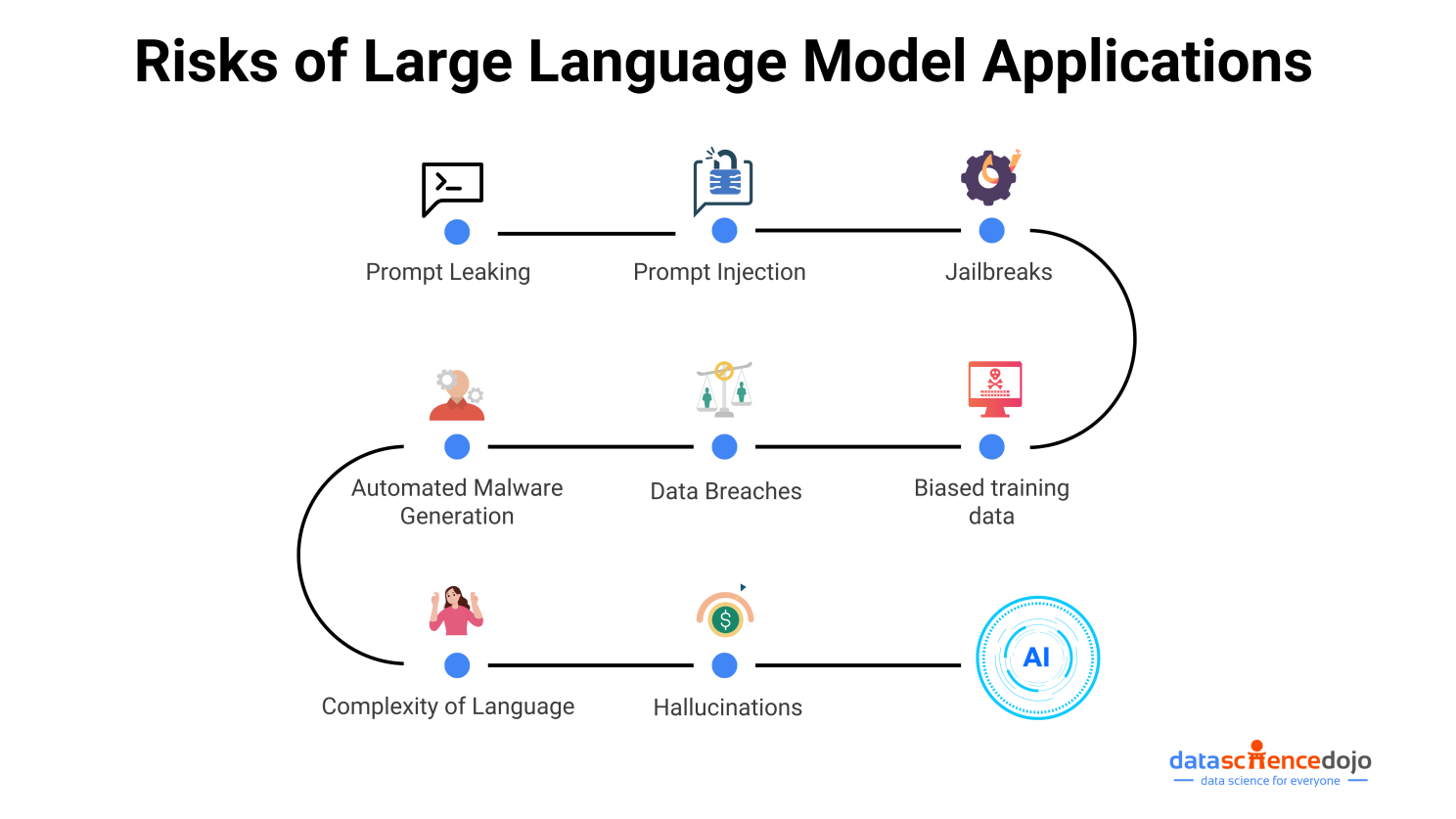

The fragility of prompts, jailbreaking attempts, leakage of personally identifiable information, inaccurate or biased outputs, and repeatability are some of the problems faced during product development.

Hence, if LLM applications aren’t built with the principles of AI governance, they can screw up. Much like how they’ve done so in the past. Cue Tay chatbot blunder by Microsoft.

Here are some major risks of large language models today.

Dive Deeper: 6 Major Challenges of Large Language Models (LLMs)

Integrating AI Governance into LLM Development

AI governance refers to the frameworks, rules, and guardrails that ensure artificial intelligence tools and systems are developed and used safely and ethically.

Integrating AI governance principles with security measures creates a cohesive development strategy by ensuring that ethical standards and security protections work together. This approach ensures that AI systems are not only technically secure but also ethically sound, transparent, and trustworthy.

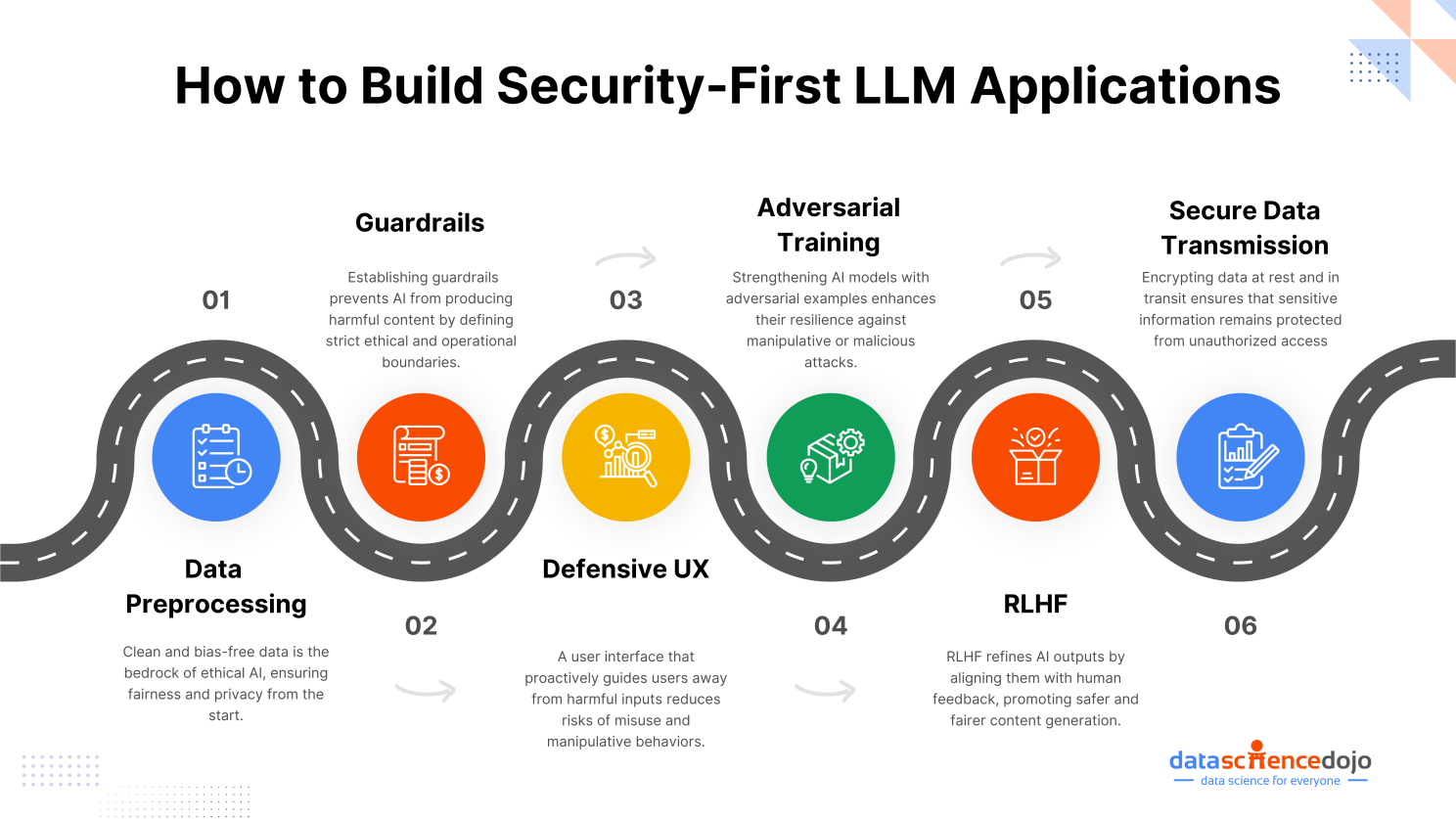

Roadmap to Build LLM Applications that Prioritize AI Security

To build secure and ethical LLM applications, developers must implement a multifaceted approach. This involves careful data preparation, training models to be resilient against adversarial attacks, and ensuring the alignment of AI outputs with human values. By prioritizing these steps, developers can create AI systems that are both powerful and responsible.

Here’s a roadmap discussing the best practices to ensure your application is ready for a wider audience.

Dive Deeper: How to Build Secure LLM Apps with AI Governance at Their Core

Practitioner’s Insights: Major Challenges in Building AI Applications

While tools like ChatGPT seem to effortlessly generate and summarize content, creating custom models for specific business needs is proving to be more complex than initially anticipated.

Raja Iqbal, Chief Data Scientist at Data Science Dojo has led his team of talented data scientists and software engineers in creating several important enterprise applications using large language models.

In this tutorial, he demonstrates the obstacles they encountered during development and how they successfully overcame them.

To connect with LLM and Data Science Professionals, join our discord server now!

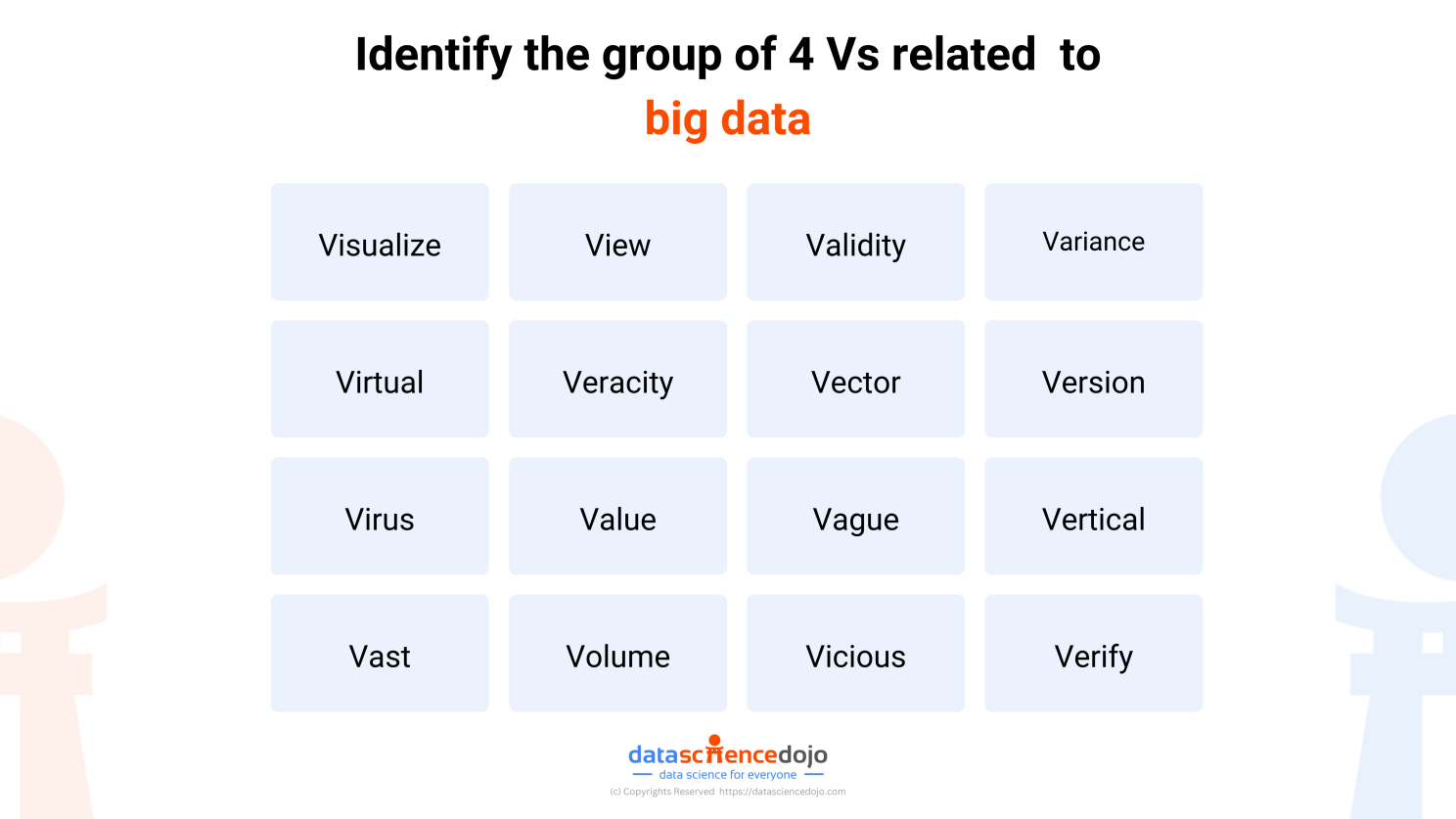

Pop Quiz! Answer the question in the comments at the end of the newsletter.

How to Start Your Journey to Master Large Language Models

The landscape of large language models is complex and the architecture of building LLM applications is still emerging.

If you’re new to LLMs, navigating the landscape can be overwhelming. To help you get started on your journey, we’ve curated two newsletter features designed to guide you through the complexities of LLM architecture and application development.

Part 1: Journey to LLM Expertise – Dominating 9 Essential Domains

The first part of the series goes over a comprehensive roadmap that covers all the domains that you need to learn to take complete hold of LLMs.

Part 2: Journey to LLM Expertise – Leading Large Language Models Courses

The second part of the series goes over industry-leading courses in each domain that you need to master. It also features Data Science Dojo’s LLM Bootcamp, which helps you learn how to build LLM applications in just 40 hours.

Journey to LLM Expertise: Leading Large Language Models Courses

Finally, let’s end this week with the most interesting AI news for you!

- Federal prosecutors are seeking a divestment of Google’s multibillion-dollar online advertising business, saying its monopoly power harms advertisers and publishers. Read more

- AI21 Labs released Jamba 1.5, a model that generates tokens faster than current transformers, especially when processing long inputs. Read more

- OpenAI co-founder Ilya Sutskever’s new safety-focused AI startup SSI raises $1 billion. Explore more

- Google DeepMind’s AlphaProteo generates novel proteins for biology and health research. Dive deeper

- Argentina’s plan to fight crime with AI draws concerns from rights groups. Explore now