Welcome to Data Science Dojo’s weekly AI newsletter, “The Data-Driven Dispatch“.

The generative capabilities of AI shocked the world in 2023 with the release of tools like ChatGPT. However, it was quick for the world to normalize that AI can now write, code, and problem-solve like humans do.

But, when it comes to ai generated music, it’s a hard-to-digest concept, isn’t it?

Music has more than a single dimension. You require different instruments, tones, and vocals to create a good piece.

Moreover, humans tend to be more sensitive toward auditory information and catch the slightest of mistakes.

How can AI then ace such a challenging domain? More importantly, how will this phenomenon fit into the bigger picture, where music creation is considered to be a form of art, and intimate to humans?

Let’s answer these questions for you!

Music’s MidJourney Moment is Here

In April 2024, the music generation situation got a major jolt when the ghostwriter’s AI-generated cover, “Heart on My Sleeve,” went viral, showcasing the powerful potential of AI in producing top-quality music.

This event is just the beginning of a rapid evolution in the AI-generated music industry, where new models and tools are being rolled out at an astonishing rate.

The market for generative AI in music is set to explode, jumping from a modest USD 294 million in 2023 to a massive USD 2,660 million by 2032.

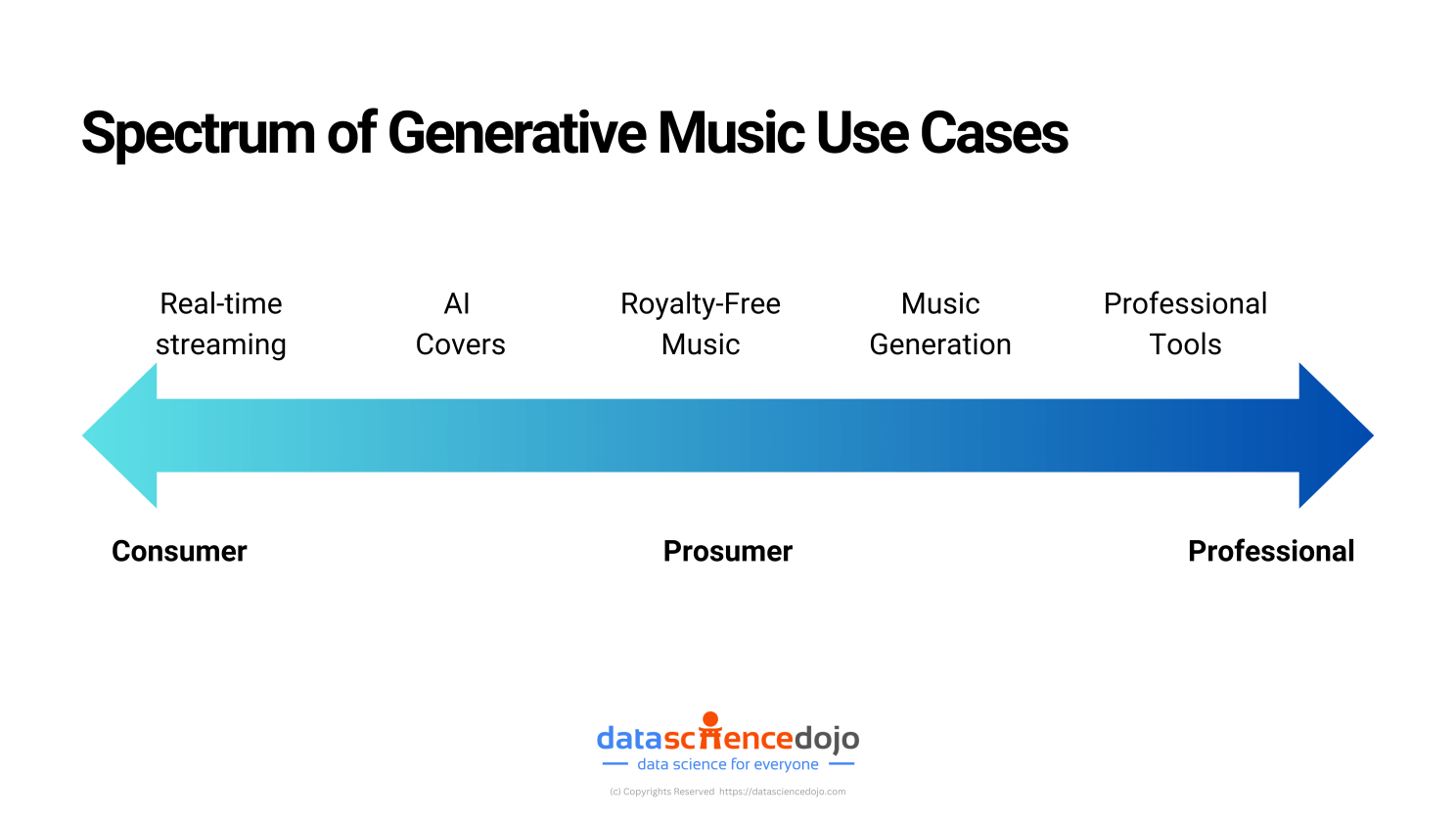

Hence, we will experience a great deal of adaption of AI-generated music tools from enterprises that will use them to create royalty-free music for different purposes such as advertisements, podcasts, and more, to individuals who want to bring their creative music ideas to life.

It is a MidJourney moment for music since it will make it highly accessible for anyone to create all sorts of music.

Tools to Produce AI Generated Music

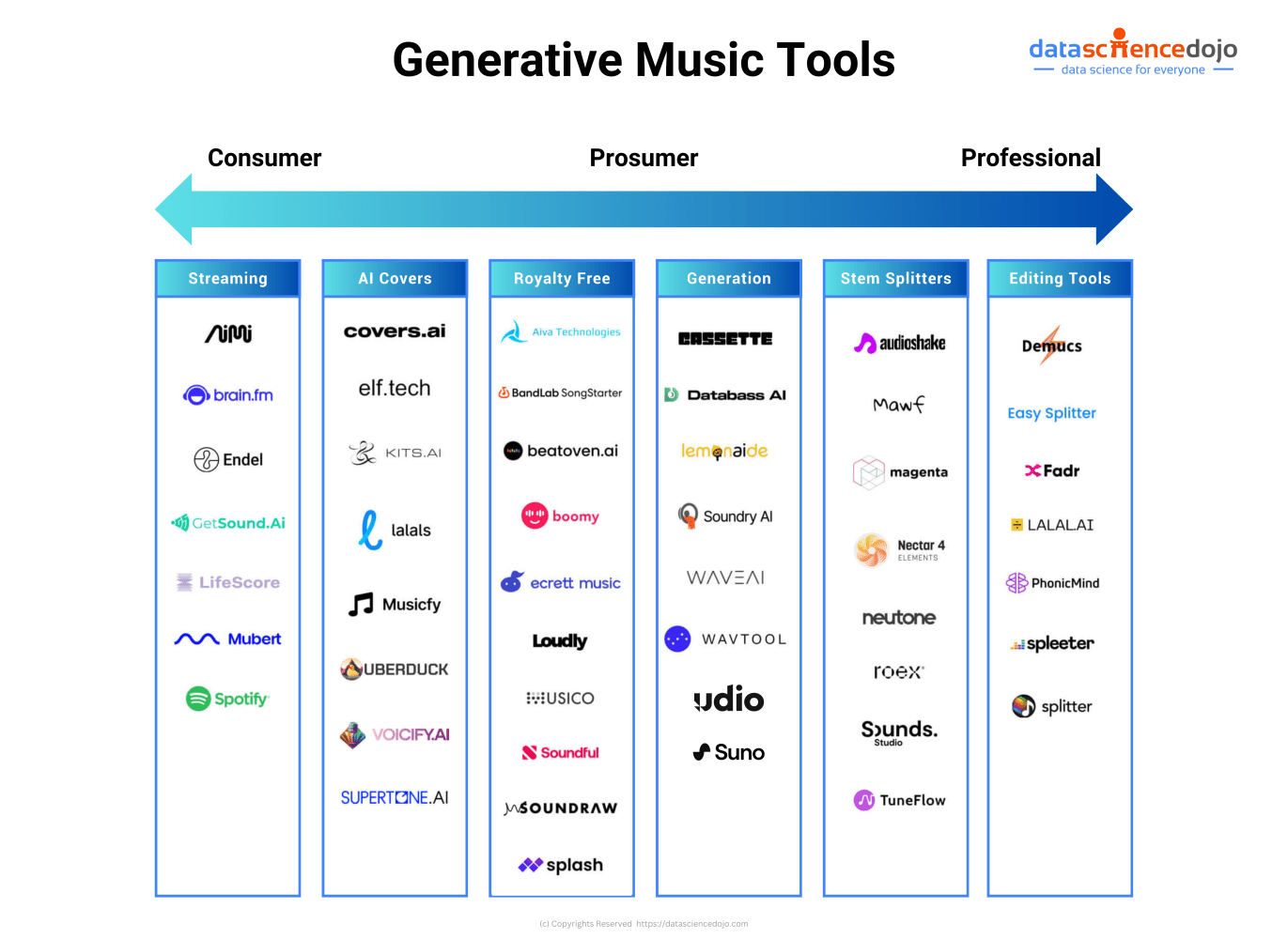

We are already experiencing a rise in generative tools that can assist from creating background music to producing full-fledged songs based on prompts.

AI music generators such as Suno AI and Udio ai music generator have recently gained immense popularity because of amazing features like extending one’s own songs based on prompts for as long as 15 minutes.

Read more: Top 7 AI Music Generator Tools of 2024

Copyright Issues with AI Generated Music

People take it with skepticism and apprehension when it comes to the creative side of the world such as images, music, poetry, and more.

Several lawsuits are currently making their way through the courts, such as Sony’s lawsuit against Suno and Udio, which will likely impact the future of AI-generated music. These lawsuits include cases where artists’ works have been used to train AI systems without their knowledge or consent

Fair Use and Licensing Issues:

There is an ongoing debate about whether training AI models on copyrighted works constitutes “fair use.”

Proponents of allowing the use of AI generated music in creative fields argue that AI functions similarly to how humans learn. We are exposed to vast amounts of information and use that knowledge to create new and original works. Shouldn’t AI be afforded the same ability?

The counter-argument centers around the concept of AI as a tool. Imagine building a house: you wouldn’t be denied a hammer or saw because they can be misused. However, if that hammer is used to damage property, the blame falls on the person wielding it. Similarly, copyright infringement shouldn’t be blamed on the AI, but on the way it’s prompted or used.

The debate hinges on how we view AI. Is it a tool to be used and directed by humans, or a separate entity with its own rights and limitations? The answer will likely determine how copyright law is applied to AI-generated creations in the future.

Read more: Future of AI Innovation at the Mercy of Regulation

How Does AI-Powered Music Generation Work?

Big tech like Meta, Google, Stability AI, Suno AI and more are building powerful music generation foundation models.

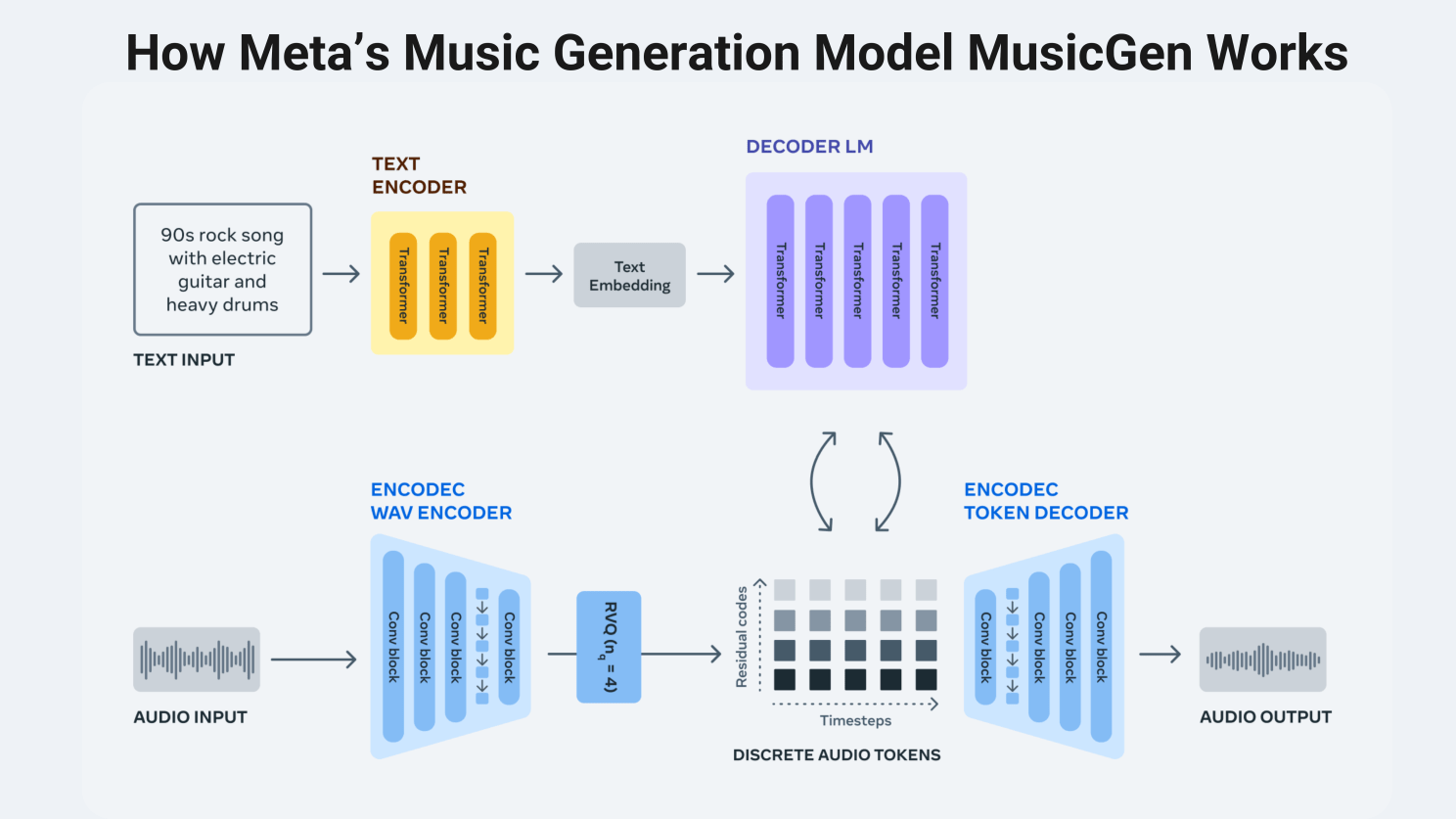

These models leverage deep learning techniques to generate music based on textual descriptions. The architecture of these models generally involves several components designed to interpret text and convert it into musical parameters such as melody, harmony, rhythm, and instrumentation.

Here’s the process of how Meta’s text-to-music model MusicGen generates music.

Dive Deeper: Understand the Architecture of 5 Best Music-Generation Foundation Models

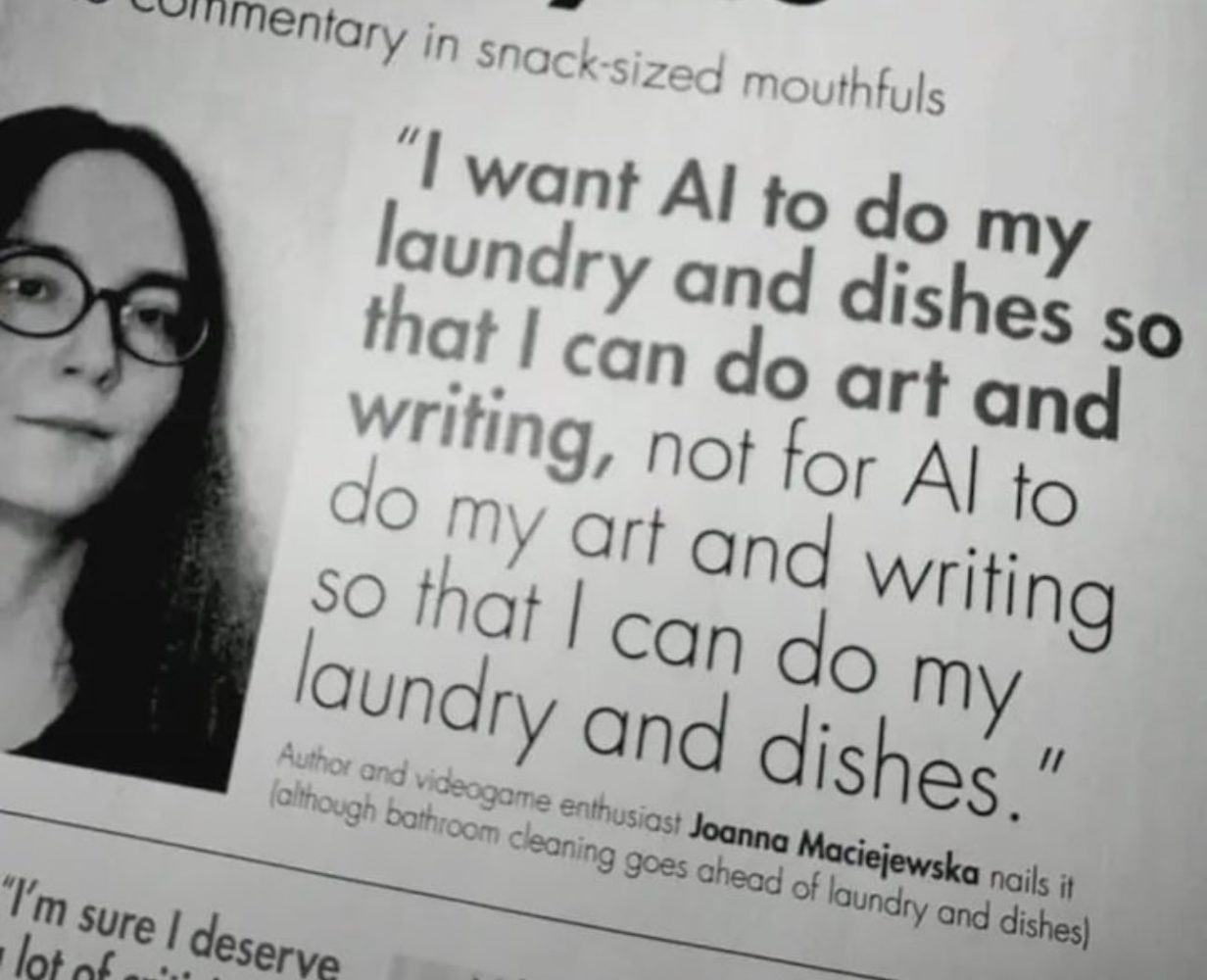

Is AI going in the direction we all wished for?

Sora AI: Explore Ethics, AI, and Personalization

How is video and audio generation AI going to change contemporary society? Is it ethical? How will it impact the jobs?

If similar questions are boggling you, join this informative live session led by Leonard Rodman. He will:

- Dive into the exciting advancements in AI-powered video

- Explore how it is revolutionizing both content creation and consumption

- Understand the complexities surrounding deep fakes and other AI-generated content

- Witness live demonstrations of user-friendly tools like Sora

Excited? Book your seat for the most-awaited talk now.

Get Started with Language Models: Comprehensive YouTube Playlist

If you’re eager to learn about the emerging architecture around language model applications that produce text, audio, and video, this playlist can help.

It comprises different lectures that start from the very basics like embeddings, the transformer architecture, and fine-tuning, all the way to practical tutorials about how to deploy LLM applications, and how to augment them to actually become scalable, and robust.

To connect with LLM and Data Science Professionals, join our discord server now!

Finally, let’s end the dispatch with everything that happened in the AI sphere this week.

- Sony Music, Universal Music Group, and Warner Records are suing AI start-ups Suno and Udio for copyright infringement. Read more

- Amazon is prepping ChatGPT rival ‘Metis’ AI chatbot. Read more

- OpenAI acquires the team behind Multi, formerly Remotion, a startup focused on screen sharing and collaboration. Read more

- The U.S. clears the way for antitrust inquiries of Nvidia, Microsoft, and OpenAI. Read more