Artificial intelligence is evolving at an unprecedented pace, and large concept models (LCMs) represent the next big step in that journey. While large language models (LLMs) such as GPT-4 have revolutionized how machines generate and interpret text, LCMs go further: they are built to represent, connect, and reason about high-level concepts across multiple forms of data. In this blog, we’ll explore the technical underpinnings of LCMs, their architecture, components, and capabilities and examine how they are shaping the future of AI.

Technical Overview of Large Concept Models

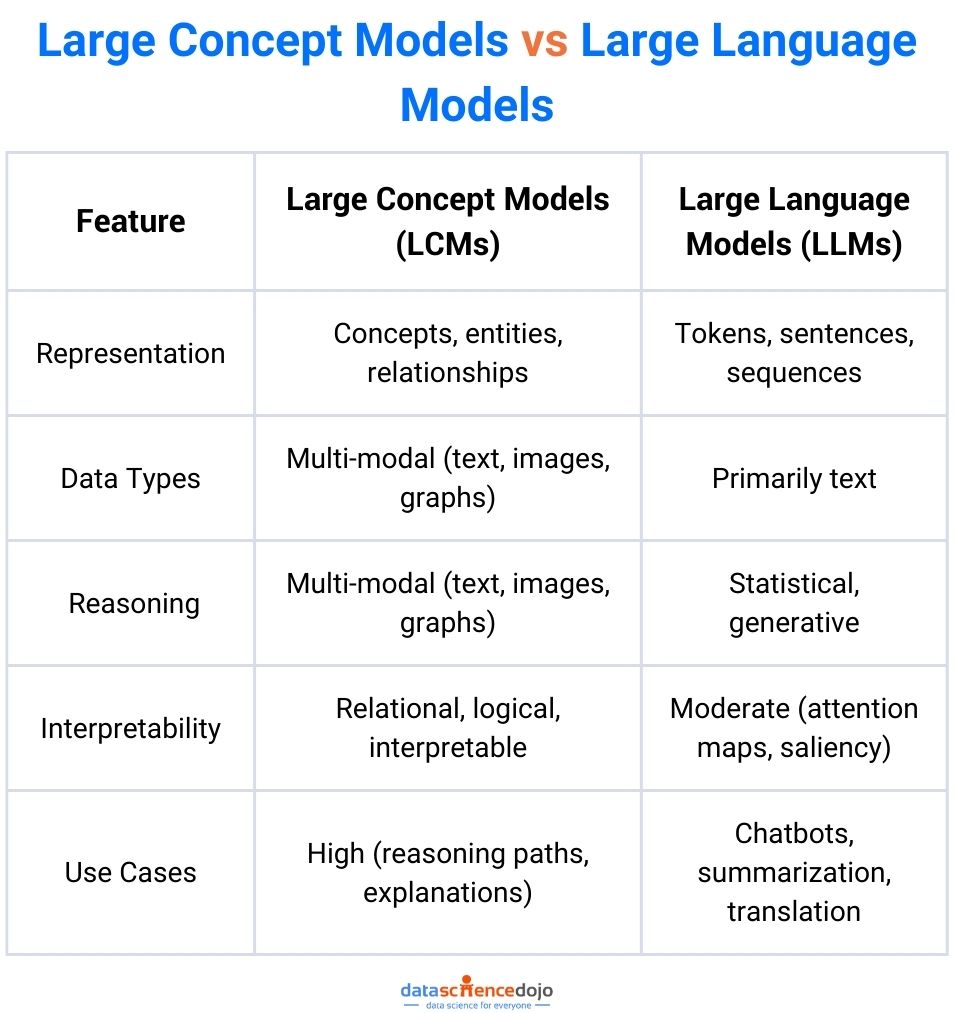

Large concept models (LCMs) are advanced AI systems designed to represent and reason over abstract concepts, relationships, and multi-modal data. Unlike LLMs, which primarily operate in the token or sentence space, LCMs focus on structured representations—often leveraging knowledge graphs, embeddings, and neural-symbolic integration.

Key Technical Features:

1. Concept Representation:

Large Concept Models encode entities, events, and abstract ideas as high-dimensional vectors (embeddings) that capture semantic and relational information.

2. Knowledge Graph Integration:

These models use knowledge graphs, where nodes represent concepts and edges denote relationships (e.g., “insulin resistance” —is-a→ “metabolic disorder”). This enables multi-hop reasoning and relational inference.

3. Multi-Modal Learning:

Large Concept Models process and integrate data from diverse modalities—text, images, structured tables, and even audio—using specialized encoders for each data type.

4. Reasoning Engine:

At their core, Large Concept Models employ neural architectures (such as graph neural networks) and symbolic reasoning modules to infer new relationships, answer complex queries, and provide interpretable outputs.

5. Interpretability:

Large Concept Models are designed to trace their reasoning paths, offering explanations for their outputs—crucial for domains like healthcare, finance, and scientific research.

Discover the metrics and methodologies for evaluating LLMs.

Architecture and Components

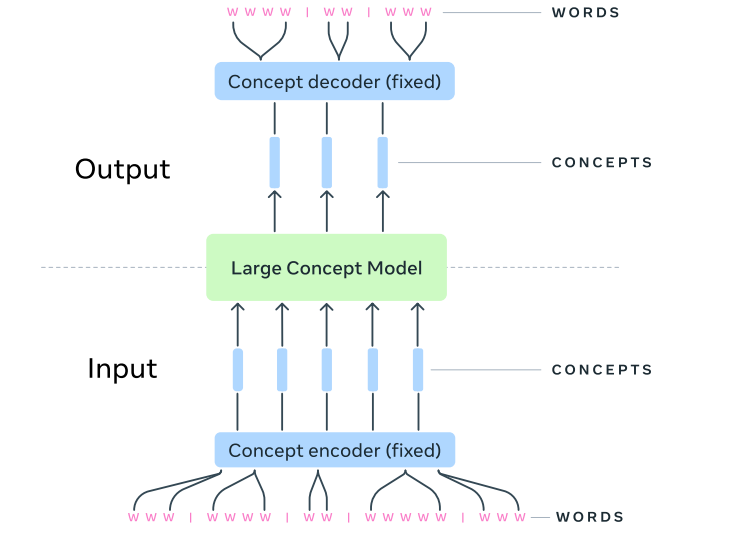

source: https://arxiv.org/pdf/2412.08821

A large concept model (LCM) is not a single monolithic network but a composite system that integrates multiple specialized components into a reasoning pipeline. Its architecture typically blends neural encoders, symbolic structures, and graph-based reasoning engines, working together to build and traverse a dynamic knowledge representation.

Core Components

1. Input Encoders

-

Text Encoder: Transformer-based architectures (e.g., BERT, T5, GPT-like) that map words and sentences into semantic embeddings.

-

Vision Encoder: CNNs, vision transformers (ViTs), or CLIP-style dual encoders that turn images into concept-level features.

-

Structured Data Encoder: Tabular encoders or relational transformers for databases, spreadsheets, and sensor logs.

-

Audio/Video Encoders: Sequence models (e.g., conformers) or multimodal transformers to process temporal signals.

These encoders normalize heterogeneous data into a shared embedding space where concepts can be compared and linked.

2. Concept Graph Builder

-

Constructs or updates a knowledge graph where nodes = concepts and edges = relations (hierarchies, causal links, temporal flows).

-

May rely on graph embedding techniques (e.g., TransE, RotatE, ComplEx) or schema-guided extraction from raw text.

-

Handles dynamic updates, so the graph evolves as new data streams in (important for enterprise or research domains).

See how knowledge graphs are solving LLM hallucinations and powering advanced applications

3. Multi-Modal Fusion Layer

-

Aligns embeddings across modalities into a unified concept space.

-

Often uses cross-attention mechanisms (like in CLIP or Flamingo) to ensure that, for example, an image of “insulin injection” links naturally with the textual concept of “diabetes treatment.”

-

May incorporate contrastive learning to force consistency across modalities.

4. Reasoning and Inference Module

-

The “brain” of the Large Concept Model, combining graph neural networks (GNNs), differentiable logic solvers, or neural-symbolic hybrids.

-

Capabilities:

-

Multi-hop reasoning (chaining concepts together across edges).

-

Constraint satisfaction (ensuring logical consistency).

-

Query answering (traversing the concept graph like a database).

-

-

Advanced Large Concept Models use hybrid architectures: neural nets propose candidate reasoning paths, while symbolic solvers validate logical coherence.

5. Memory & Knowledge Store

-

A persistent memory module maintains long-term conceptual knowledge.

-

May be implemented as a vector database (e.g., FAISS, Milvus) or a symbolic triple store (e.g., RDF, Neo4j).

-

Crucial for retrieval-augmented reasoning—combining stored knowledge with new inference.

6. Explanation Generator

-

Traces reasoning paths through the concept graph and converts them into natural language or structured outputs.

-

Uses attention visualizations, graph traversal maps, or natural language templates to make the inference process transparent.

-

This interpretability is a defining feature of Large Concept Models compared to black-box LLMs.

Architectural Flow (Simplified Pipeline)

-

Raw Input → Encoders → embeddings.

-

Embeddings → Graph Builder → concept graph.

-

Concept Graph + Fusion Layer → unified multimodal representation.

-

Reasoning Module → inference over graph.

-

Memory Store → retrieval of prior knowledge.

-

Explanation Generator → interpretable outputs.

This layered architecture allows LCMs to scale across domains, adapt to new knowledge, and explain their reasoning—three qualities where LLMs often fall short.

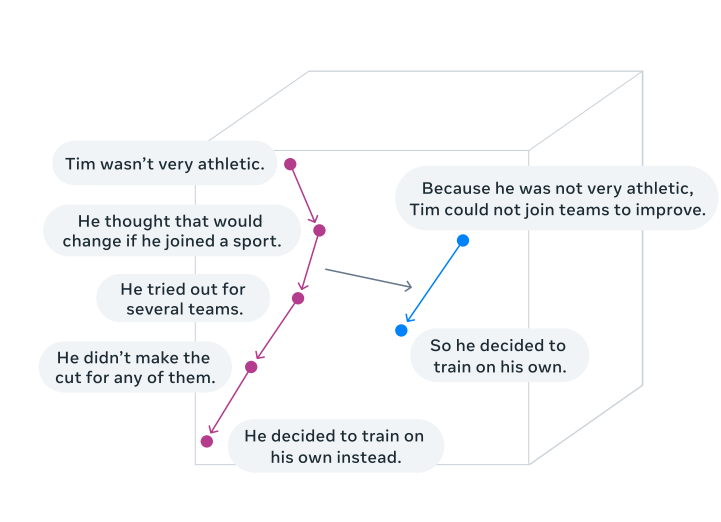

Think of an Large Concept Model as a super-librarian. Instead of just finding books with the right keywords (like a search engine), this librarian understands the content, connects ideas across books, and can explain how different topics relate. If you ask a complex question, the librarian doesn’t just give you a list of books—they walk you through the reasoning, showing how information from different sources fits together.

LCMs vs. LLMs: Key Differences

Real-World Applications

Healthcare:

Integrating patient records, medical images, and research literature to support diagnosis and treatment recommendations with transparent reasoning.

Enterprise Knowledge Management:

Building dynamic knowledge graphs from internal documents, emails, and databases for semantic search and compliance monitoring.

Scientific Research:

Connecting findings across thousands of papers to generate new hypotheses and accelerate discovery.

Finance:

Linking market trends, regulations, and company data for risk analysis and fraud detection.

Education:

Mapping curriculum, student performance, and learning resources to personalize education and automate tutoring.

Challenges and Future Directions

Data Integration:

Combining structured and unstructured data from multiple sources is complex and requires robust data engineering.

Model Complexity:

Building and maintaining large, dynamic concept graphs demands significant computational resources and expertise.

Bias and Fairness:

Ensuring that Large Concept Models provide fair and unbiased reasoning requires careful data curation and ongoing monitoring.

Evaluation:

Traditional benchmarks may not fully capture the reasoning and interpretability strengths of Large Concept Models.

Scalability:

Deploying LCMs at enterprise scale involves challenges in infrastructure, maintenance, and user adoption.

Conclusion & Further Reading

Large concept models represent a significant leap forward in artificial intelligence, enabling machines to reason over complex, multi-modal data and provide transparent, interpretable outputs. By combining technical rigor with accessible analogies, we can appreciate both the power and the promise of Large Concept Models for the future of AI.

Ready to learn more or get hands-on experience?