Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch“.

For years, we human beings have taken pride in our intellect. Our ability to learn and grow has set us apart from everything else in this universe. But it seems that it will not only be humans who possess intellect.

We used to be amazed by LLMs that could understand and generate text. But now, there’s something even more impressive: multimodal models like GPT-4V and Gemini. These models can understand not just text, but also images, sounds, and other types of information.

Why is this a big deal? It’s simple: these models are now closer to thinking like us. For example, combining words and pictures helps them get better at understanding space and shapes, something that was hard for them before.

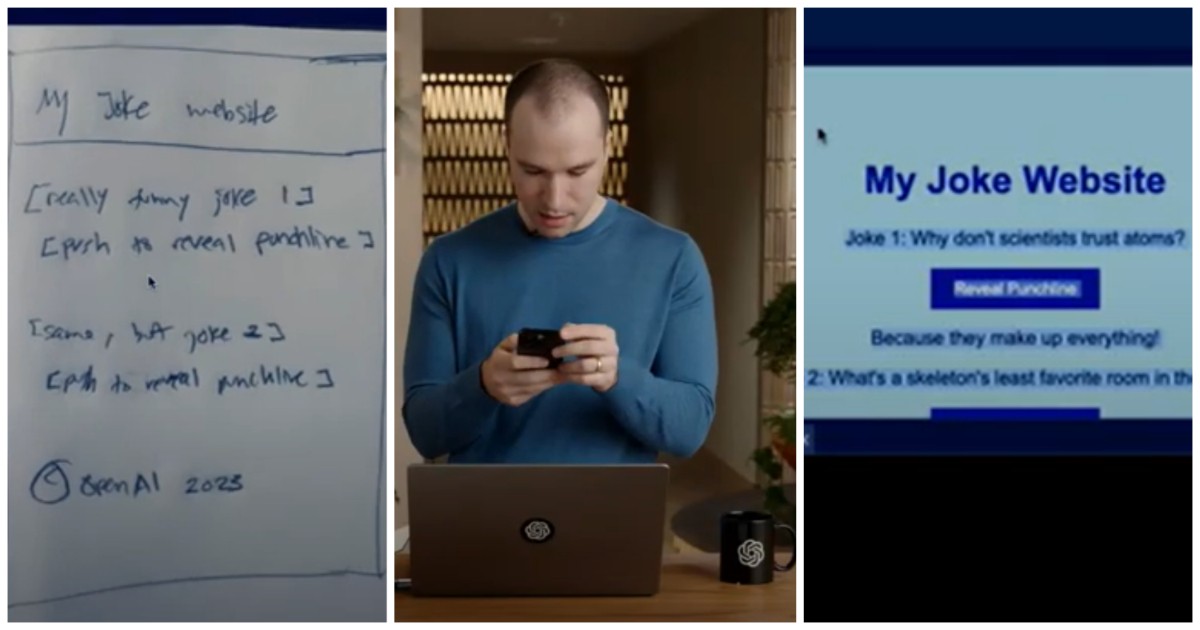

With multimodal tech, a pencil sketch is all it takes – and bam – you’ve got a whole website’s code. That’s the level of power we’re dealing with!

Want to dig deeper into the power of multimodal AI models? Come along!

Imagine this: Your room suddenly starts shaking, and everything trembles. Then, in a flash, your mom bursts in, her words cutting through the chaos: “Earthquake! We need to get out!” In that split second, you’ve processed a whirlwind of sensory inputs – vision, hearing, touch – leading you to one critical conclusion: Evacuation is imperative.

That’s multimodality in action! It’s the art of synthesizing diverse inputs altogether for razor-sharp reasoning.

Proof in Numbers: The Might of Multimodal AI

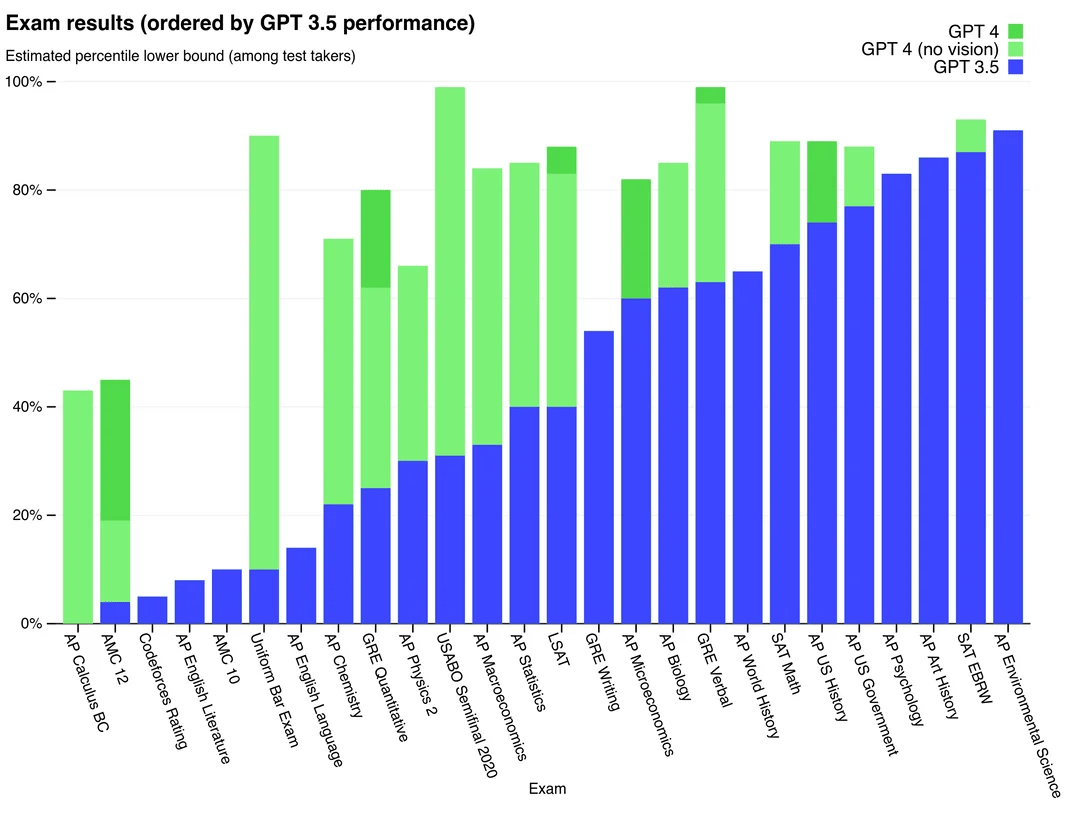

But don’t just take our word for it. Here is how GPT-4 with vision performs significantly better than GPT-3.5 and GPT-4 because of its ability to process information from various sources.

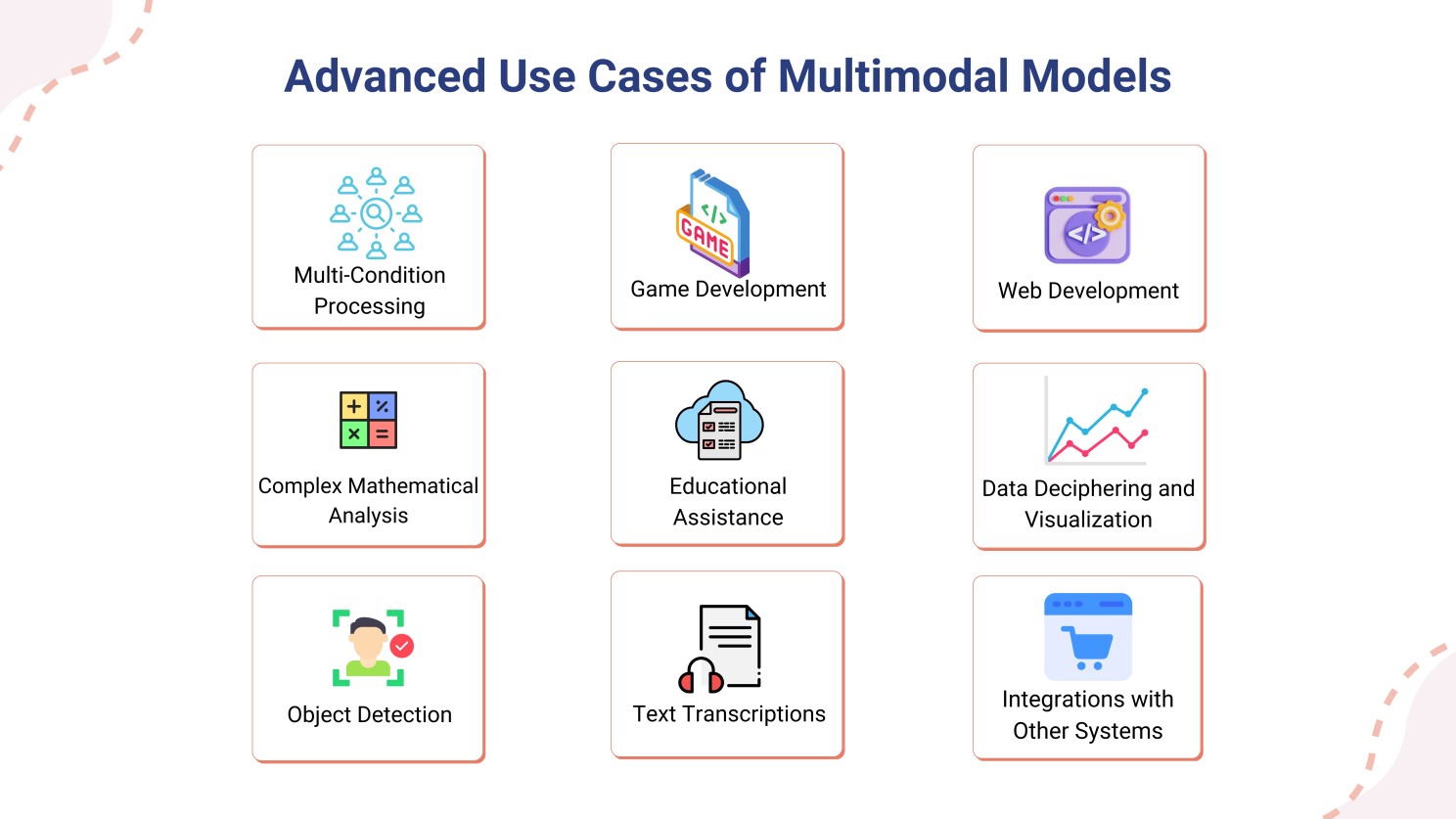

Advanced Use Cases of Multimodal Models

Quite obviously, the use cases that multimodal AI models will bring are vast. Here are some important ones:

Read: Exploring GPT-4 Vision’s Advanced Use-Cases

Latest Rival to GPT-4V – Cue Gemini

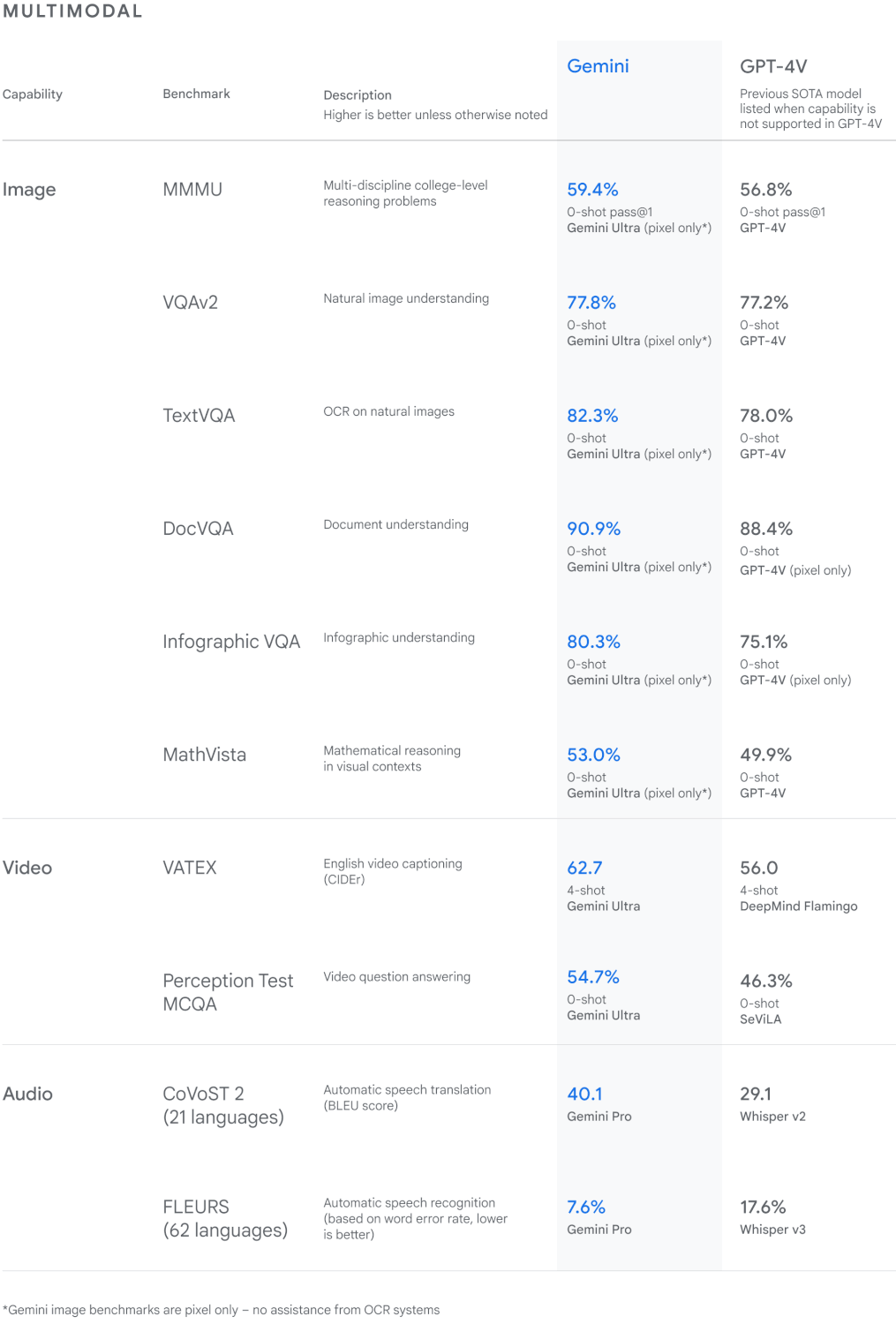

The long wait for Gemini has finally come to an end and we can see the excitement for obvious reasons. Google’s most capable multimodal model has beaten the unbeatable OpenAI‘s GPT-4V in multimodality giving them a tough time for sure. Here’s a comparison of Gemini with GPT-4V:

Read: What sets Gemini AI apart from GPT-4V?

Are Multimodal AI Models Taking Us Towards the Promised Neverland of Artificial General Intelligence (AGI)?

Well, yes! In the most recent paper by Microsoft Research, they talk about how GPT-4V has the sparks of AGI whereby they do have what sets humans apart i.e. common sense grounding which allows these models to not only reason but problem solve for novel situations, plan, and more.

Read: The Sparks of Artificial General Intelligence in GPT-4

Want to learn more about AI? Our blog is the go-to source for the latest tech news.

The Paradox of Open-Sourcing AI

With AI becoming so powerful, we are surrounded by a paradoxical situation:

- LLMs should be open-sourced so that such a huge power is not in the hands of a few big tech companies.

- LLMs should not be open-sourced as such a powerful technology should be protected and regulated extensively.

Explore this important talk where experts in the field including Yann LeCun, Sebastien Bubeck, and Brian Greene explore artificial intelligence and the potential risks and benefits it poses to humanity. They also talk about the fact that big tech companies controlling AI is a bigger risk than AI itself taking over.

Read: Should Large Language Models be Open-Sourced?

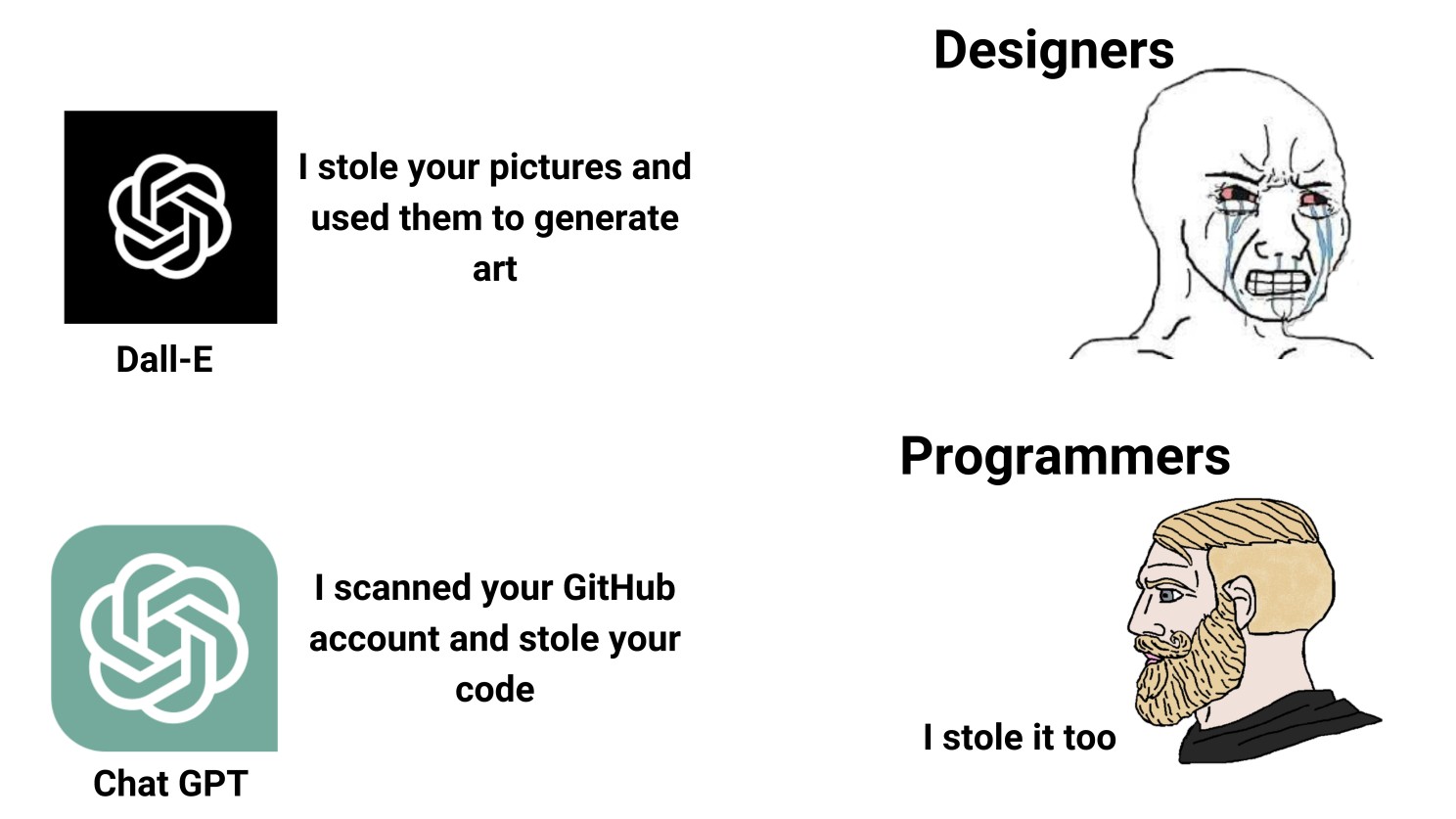

No offense but it is what it is 😂.

It’s time to level up your AI game. Here are some important live sessions and tutorials featuring renowned experts in the field of generative AI.

Explore these live sessions here and book yourself a slotfor the one you are anticipating the most.

Explore these live sessions here and book yourself a slotfor the one you are anticipating the most.If you love networking and miss having physical interaction with professionals, explore these conferences and events happening in North America. Join the one that excited you the most

Explore: Top 8 AI conferences in North America in 2023 and 2024

Finally, let’s end the day with some interesting updates of what’s happening in the AI-verse:

- Google releases Gemini 1.0; It’s the most capable model and beats GPT-4V. Read more

- Amazon introduced Q, an AI-powered assistant that enables employees to query documents and corporate systems. Read more

- AI tool GNoME finds 2.2 million new crystals, including 380,000 stable materials that could power future technologies. Read more

- Siemens and Microsoft have initiated a pilot program using a GPT-powered model to control manufacturing machinery. Read more

- OpenAI signs a non-binding letter of intent to invest $51 million in AI chips from a startup called Rain AI backed by Sam Altman. Read more