Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch“. In this week’s dispatch, we’ll decode generative AI advancements in 2023.

We remember different years for different events, and some years always stand out given how they changed us as a community.

Take the year 2020 for example, who doesn’t remember the masks, excessive use of sanitizers, working from home, virtual meetings, and whatnot? We think of it because it changed how we interacted with the society. We remember it because it established a new normal for us which is working from home or hybrid work environments.

Quite similarly, 2023 marked a significant year in AI advancements. Can you imagine working without ChatGPT in the very next tab? It’s too obvious!

Although the generative AI surge started modestly, it enjoyed tremendous success throughout the year.

- Substantial investments, amounting to $27 billion this year, have been directed towards startups building the architecture of this technology

- Improved foundation models with higher parameters and effectiveness were released

- Progress from language models to multimodal models that can read, watch, and listen

- Discovery via generative AI of new elements, novel cures to diseases, solutions of unsolvable math problems, and comprehension of scrolls from the past that were otherwise mere mysteries.

Given the fast adaption of this technology globally, generative AI is set to bolster corporate profits by as much as $4 trillion annually.

We know this is huge! Hence, let’s take a moment to rewind some milestones that set the path for generative AI in 2023.

Key Generative AI Advancements that Set the Direction

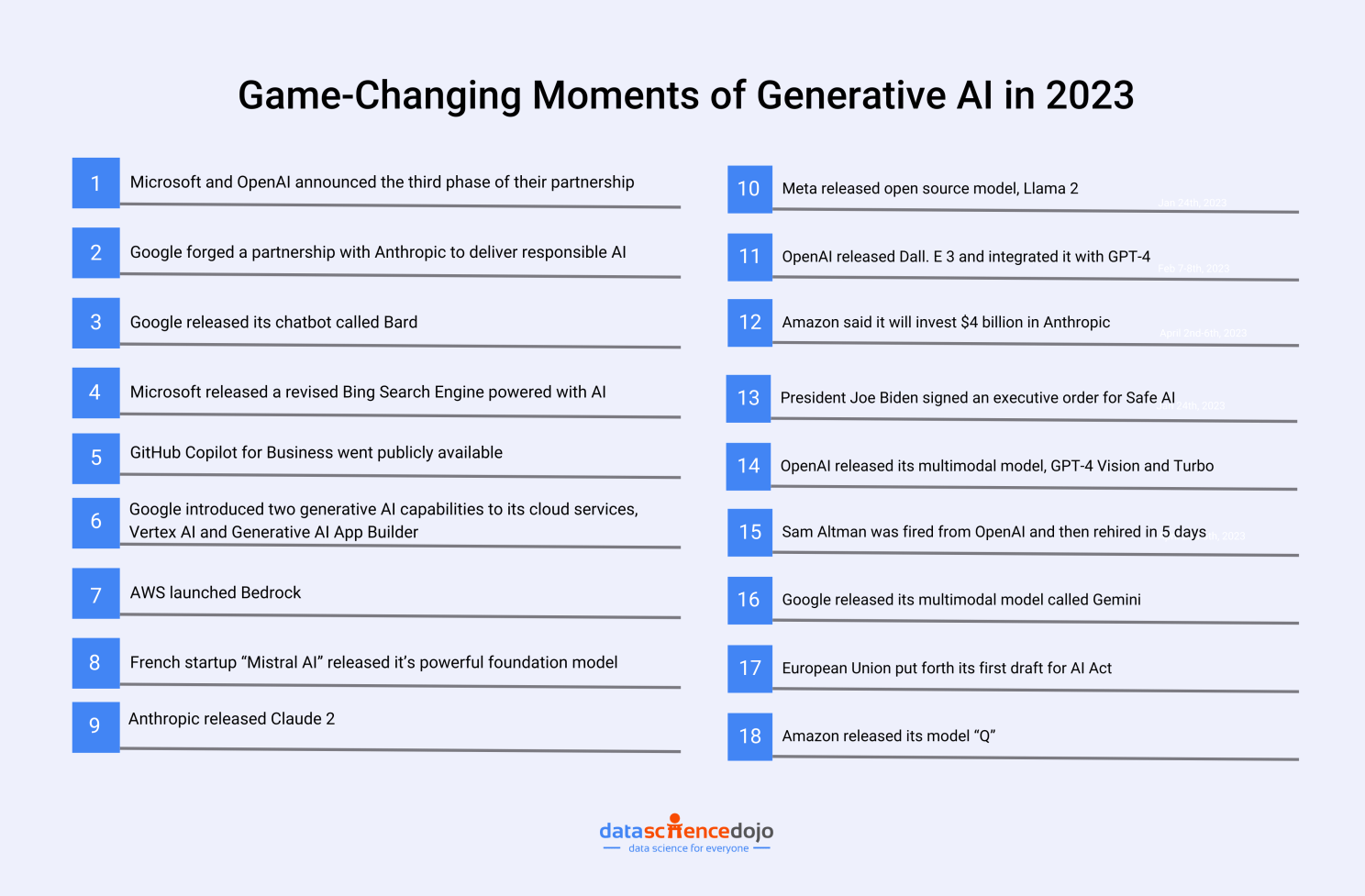

Throughout 2023, generative AI made striking progress globally, with several key players, including Amazon, Google, Microsoft, OpenAI, and Anthropic being engaged in a heated competition, releasing newer and better AI models constantly. These developments catalyzed substantial advancements in AI applications.

Here is a timeline of some major events that took place in 2023:

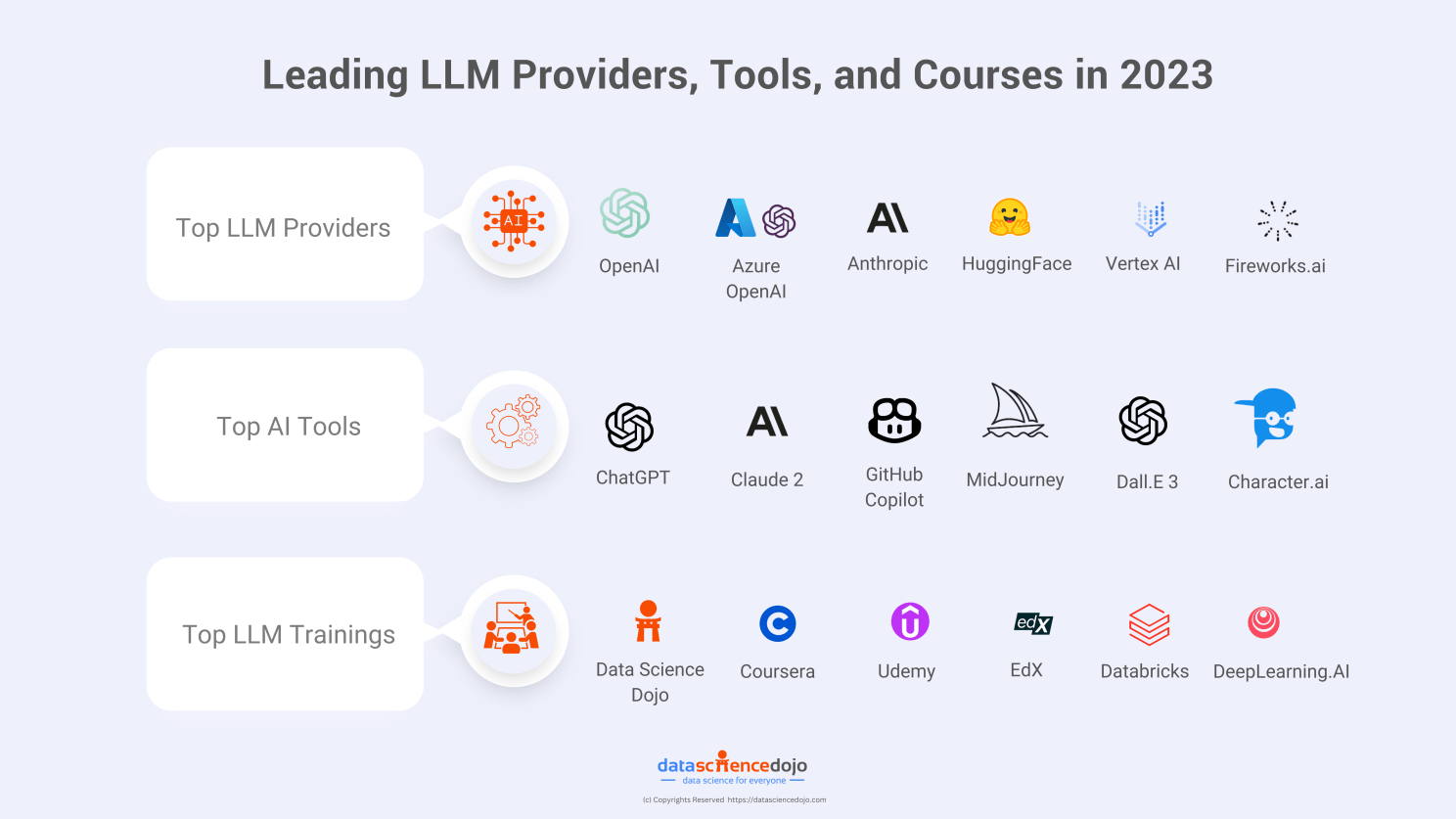

Leading LLM Providers, Tools, and Trainings in 2023

Several remarkable applications were developed to harness LLMs, such as Claude 2 and Character.ai. Additionally, the market witnessed the introduction of top-notch image-generating tools like MidJourney and Dall. E 3. Below are some of the tools, technologies, and training programs that topped the charts.

Pro-Tip: Join Data Science Dojo’s Large Language Models Bootcamp to master building custom LLM-powered applications

Vision of Generative AI for 2024; How Big Are We Aiming

While the progress was huge in 2023, there are several areas where the efforts for improvements will be directed. Here are the most crucial ones:

- Inclusivity in AI through Multilingualism and Multimodality:

We’re about to see some big moves in AI multilingualism. The plan? Closing language gaps worldwide. Picture this: upgraded multilingual models, fueled by diverse research and global input, making AI a global player.

Moreover, the future would also see technological advancements like models being able to process multiple sensory inputs simultaneously, more akin to human interaction. This means moving away from isolated subfields dedicated to language, computer vision, and audio, and transitioning towards multimodal models. This would allow AI models to communicate more effectively and in a more human-like manner, making them more accessible.

2. AI Transparency:

To ensure transparency in AI models, future efforts need to focus on the establishment of robust evaluation standards, transparency indices, and democratic decision-making processes. One of the key approaches will be the implementation of robust evaluation standards to understand the capabilities and risks the AI models pose.

Collaborations with industrial organizations to develop standard safety evaluations will be fostered. This, however, won’t be easy due to the general, open-ended nature of the AI systems and the vast scope of use cases and potential harms, which requires collective efforts from the entire research community.

However, building on fundamental research like the Holistic Evaluation of Language Models (HELM) would be paramount.

3. Smaller, and Smarter AI Models: Given the trend of AI development, the future seems likely to involve smaller yet smarter AI models. This would entail creating models that can learn more from less data – similar to the fascinating learning abilities of a human child – as well as capitalizing on experiences and novelty in learning processes. The implications go beyond just making models more efficient; in fact, these smaller, smarter models could broaden AI’s reach, making it accessible to regions grappling with resource limitations, such as scarce data, electricity, or computing equipment.

The Transformative Potential of Generative AI

Andrew Ng, renowned AI luminary, recently shared his vision for the transformative potential of Generative AI in a thought-provoking talk. This discussion, rich in technical acumen and practical insights, demystifies the hype surrounding this emerging technology, revealing its true capabilities and implications for the future.

For a deeper dive into generative AI, visit our YouTube channel for tutorials and insights.

New Year Resolution: Start 2024 by Learning LLMs

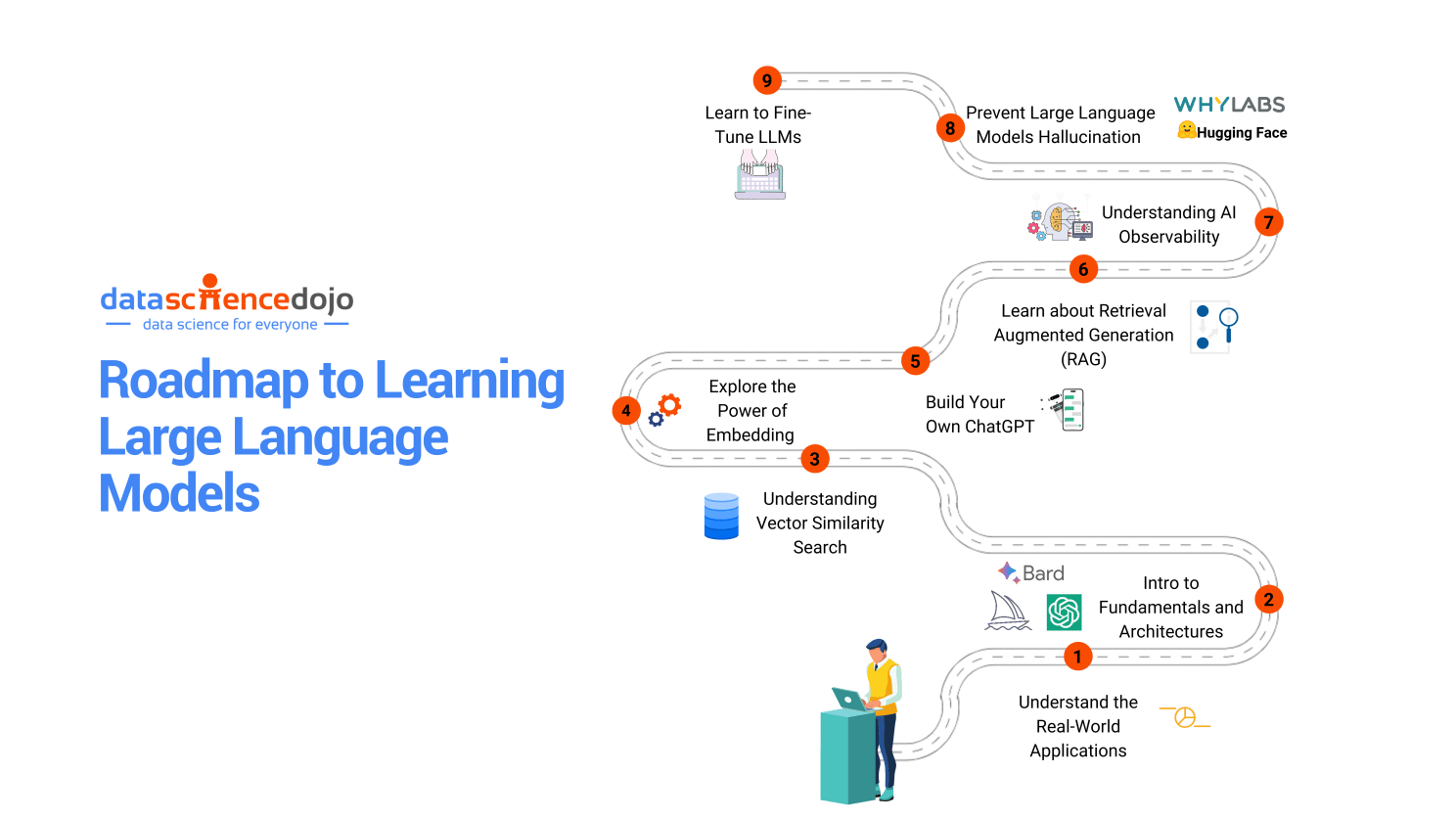

2023 has left no stone unturned to emphasize the power of generative AI. It’s high time we learn about LLMs and reap the benefits of high productivity.

Here’s a roadmap that will help you get on the right track to learn about LLMs.

Read: Roadmap to Learning Large Language Models

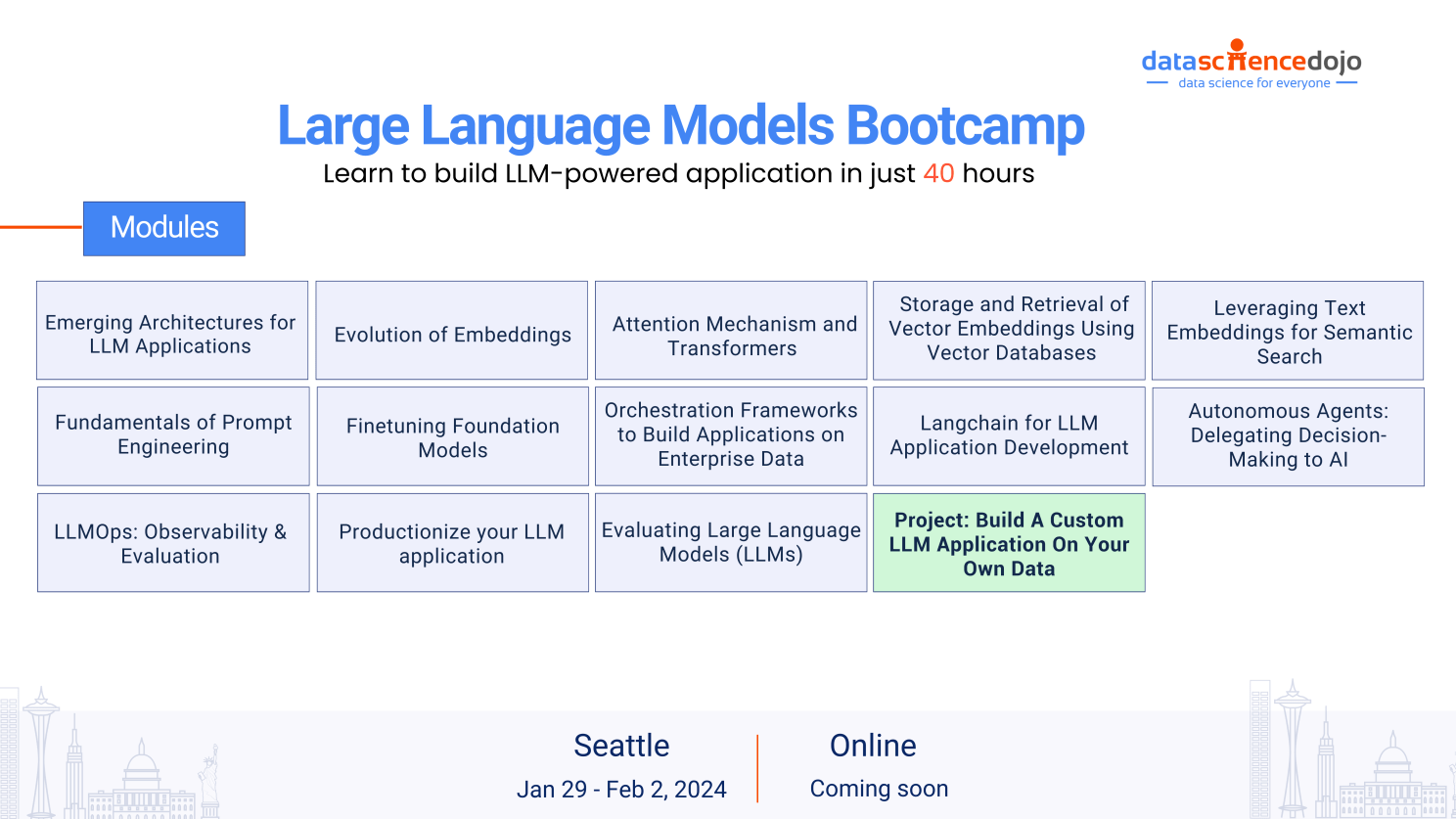

However, if you need expert mentorship to learn about LLMs fully, you can consider enrolling in a comprehensive BootCamp.

Join Data Science Dojo’s Large Language Models Bootcamp – an extensive training that will enable you to build custom LLM-powered applications from scratch.

If you have any questions about the bootcamp, you can book a meeting with our advisors!