Welcome to Data Science Dojo’s weekly newsletter, “The Data-Driven Dispatch”.

Ever heard the phrase ‘Jack of all trades, master of none’? Off-the-shelf models like Bard, ChatGPT, and Claude are true generalists. They can handle everything from summarization to data analysis.

But, can they ace domain-specific questions? What would they answer if you asked these models to provide a SWOT analysis for opening a new outlet of Starbucks by your street? Trustworthy answers need domain-specific fine-tuning.

For finance, medicine, education, and beyond, fine tuning tailors these models to tackle your specific tasks in the way you want. Moreover, you can even train them on your company’s data for specialized roles.

Hang on tight as we answer important questions like what is fine-tuning and how to fine-tune foundation models.

Let’s start with the hottest news of the week:

1. Universal Music Sues Anthropic for Widespread Infringement: Amazon-backed AI startup Anthropic is facing a heavyweight legal battle as it’s slapped with a lawsuit by major music publishers. The publishers accuse Anthropic of systematic and widespread copyright infringement, asserting that Claude, has been spitting out unauthorized copies of copyrighted song lyrics. Read more

2. China’s Latest AI Model Ernie 4.0 Takes on GPT-4: Chinese tech giant Baidu unveiled Ernie 4.0, its newest AI model, claiming it matches up to OpenAI’s GPT-4. Baidu plans to use Ernie’s powers in more of its products, like Baidu Drive and Baidu Maps, making them easier to use with everyday language. Read more

3. How Microsoft is Trying to Lessen Its Addiction to OpenAI as AI Costs Soar: Microsoft’s AI strategy heavily leaned on OpenAI, but rising costs prompted a plan B. Peter Lee, overseeing 1,500 researchers, tasked them with creating more cost-effective conversational AI. Microsoft aims to incorporate this in products, like Bing’s chatbot, to reduce costs and its reliance on OpenAI. Read more

What is Fine Tuning of Large Language Models

Fine-tuning LLMs is like customizing a tool to suit your needs perfectly. Instead of creating everything from scratch, we take a powerful language model like GPT-3.5 Turbo or Llama 2 and tweak it to do exactly what we want. Read more

For example, in the medical field, we can train it on medical research, patient records, and clinical guidelines, turning it into an expert that provides accurate and up-to-date medical information. And the best part? These tailored LLMs can handle different tasks, like summarizing text or analyzing sentiment.

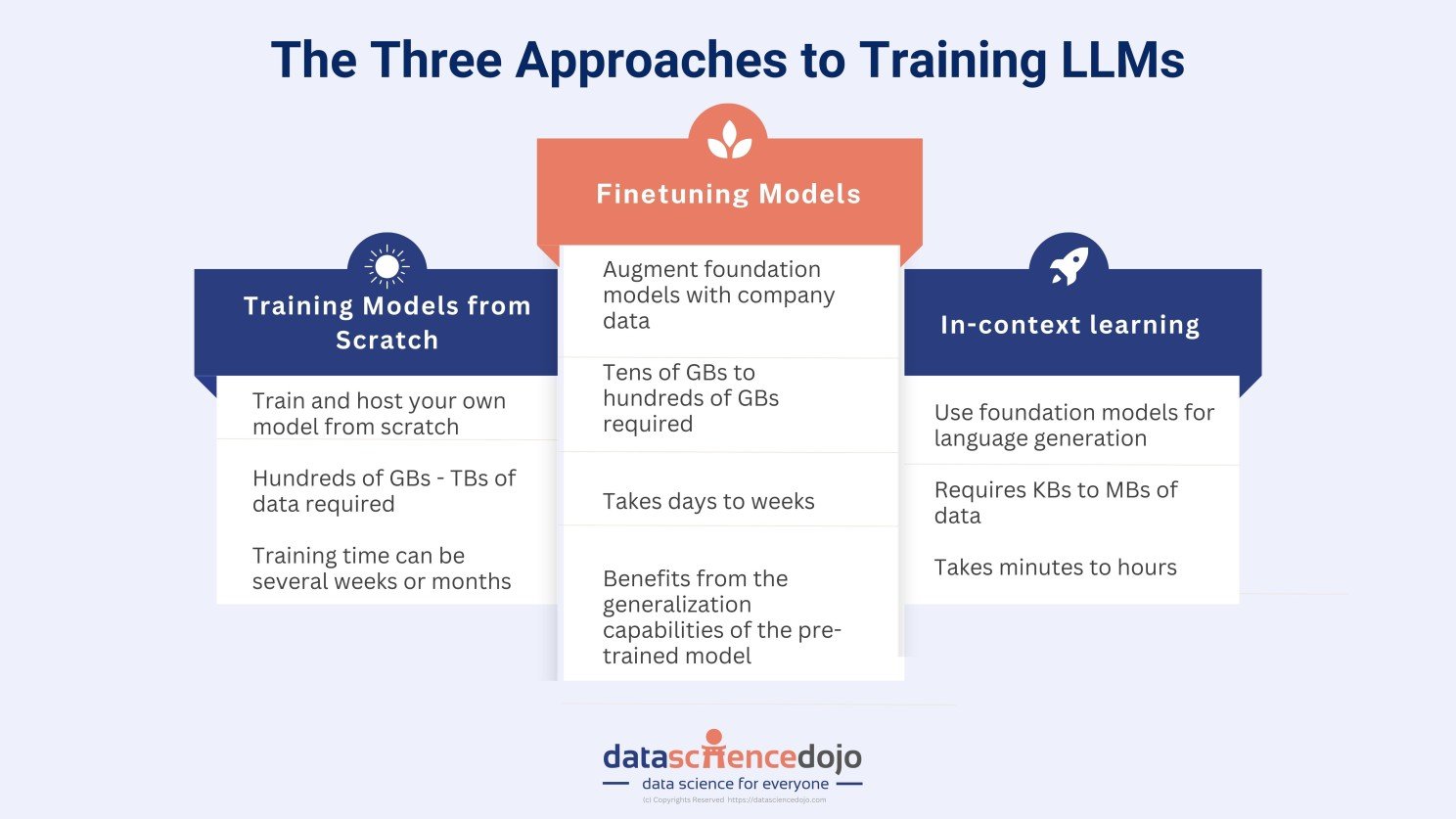

Here’s the key difference between pre-trained models, fine-tuned models, and in-context learning which we’ll discuss in the future.

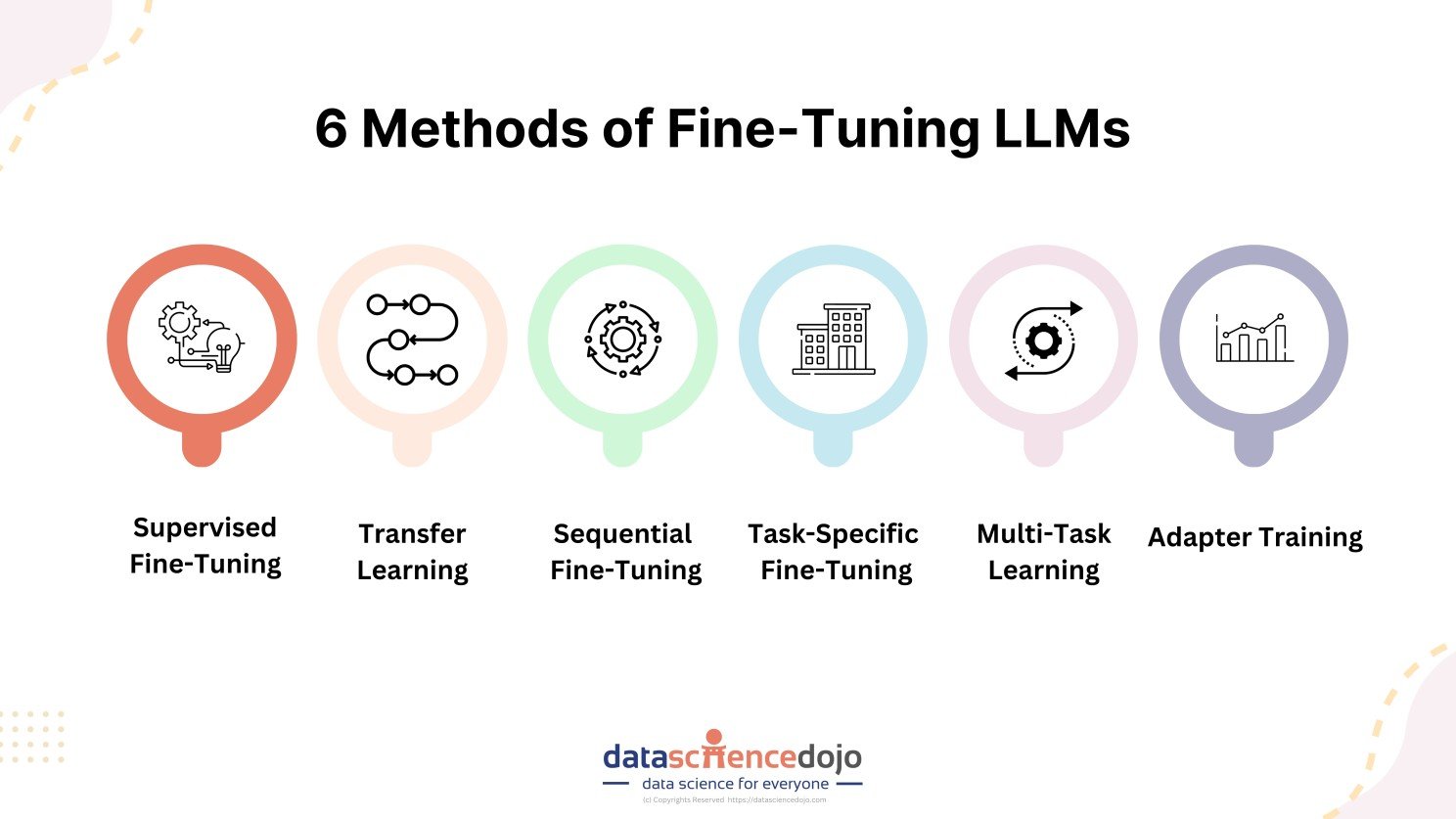

Methods of Fine Tuning LLMs

There are different ways you can enhance the power of LLMs. These techniques allow the users to alter the weights and parameters of the model to excel in a particular task. Read more on different methods to fine-tune LLMs.

Start Leveraging Fine-Tuning

The good news is that fine tuning models is not as difficult as pretraining LLMs. It’s less time-intensive, cheaper, and requires less data. Here are some step-by-step tutorials you can follow to fine-tune different foundation models.

- Revolutionize LLMs with Llama 2 Fine tuning with LoRa

- Fine-Tuning LLaMA 2: A Step-by-Step Guide

- Fine Tuning OpenAI’s GPT 3.5 Turbo

Crash Course on Fine-Tuning GPT 3.5 Turbo

Kickstart using GPT 3.5 Turbo for solving real-world problems! we’d recommend you follow this comprehensive crash course by Syed Hyder Ali Zaidi. It’s a great resource to learn hands-on fine-tuning.

We trust that this tutorial will set the pace for you to fine-tune LLMs for your particular use cases.

While GPT 3.5 Turbo is known for its user-friendly nature, Llama 2 shines in data security and for having the latest information.

Join Zaid Ahmed‘s live session on fine tuning Llama 2 using RunPod and level up your LLM expertise. Secure your slot now!

To connect with LLM and Data Science Professionals, join our discord server now!

Time to fall in love with AI again! Here is a recreation of Leonardo Da Vinci’s famous painting, “The Last Supper” by DALL.E in the style of Frida Kahlo.

The landscape of the world is changing. This AI-led revolution is equivalent to the internet-led change. Hence, we need to embrace it before we get left behind. However, we understand that things are new and dispersed all over.

Attending live conferences and events can help. It will allow us to catch up with the new tools and technologies that are developing along with the people who are actively driving the change.

Here are some AI conferences and events happening in the US and online.

Explore more: Top 9 AI Conferences and Events in USA – 2023.

What’s the Way Forward to Embracing LLMs?

It is important for enterprises to implement large language models to reap the benefits of increased productivity. Read: The Rise of LLM-Powered Enterprises

Fine-tuning LLMs is just one method of not only increasing the efficiency of LLMs but also altering them for usage on enterprise data.

However, there are a lot of components that allow better usability of LLMs such as orchestration frameworks, retrieval augmented generation, and more!

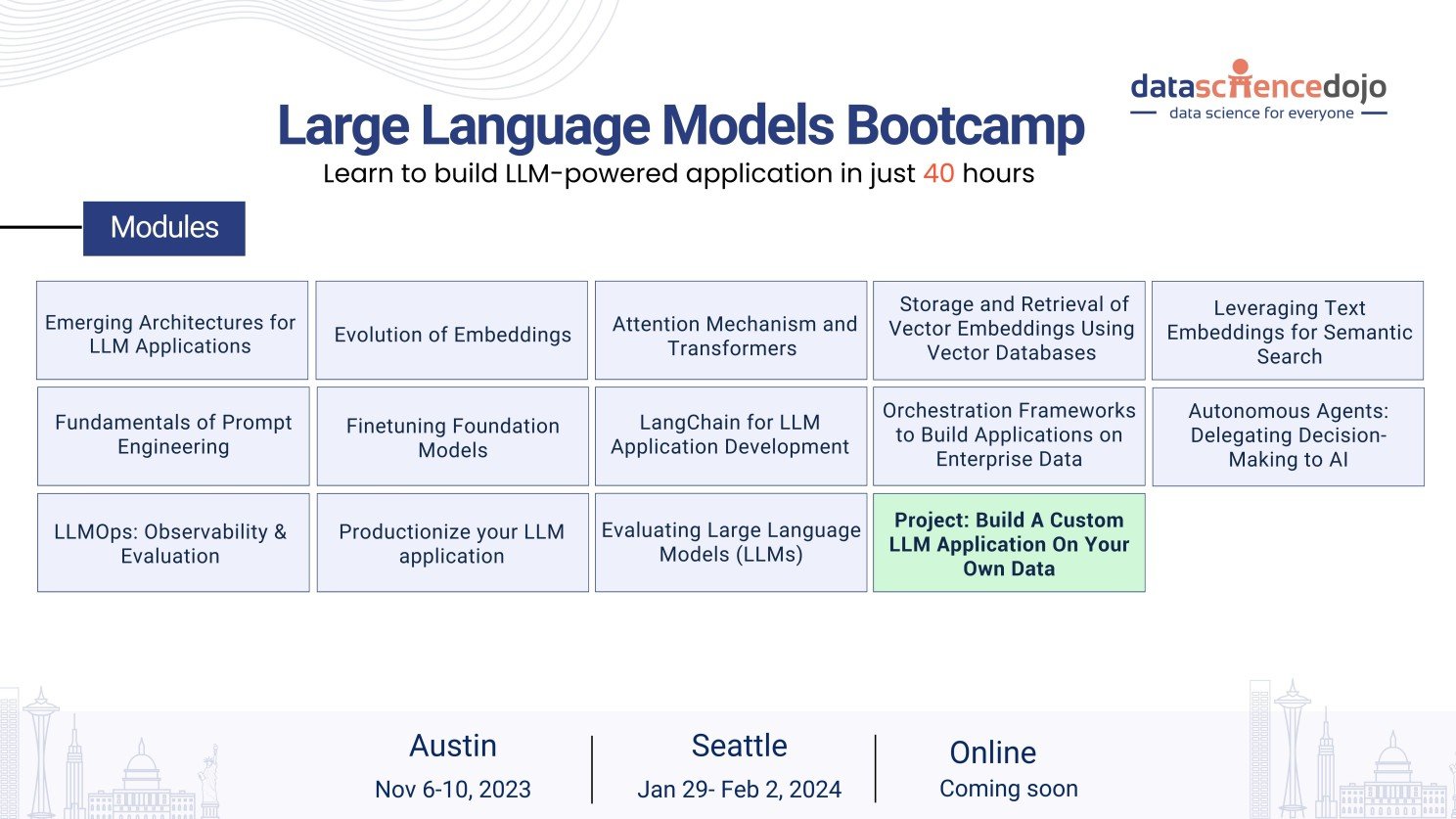

If you want to learn it all in one go, you must attend Data Science Dojo’s Large Language Model Bootcamp. It’s an intensive 40-hour training to allow you to build custom LLM applications.

If you have any questions about the bootcamp, you can book a meeting with our advisors!