With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why Fine-Tune LLM?

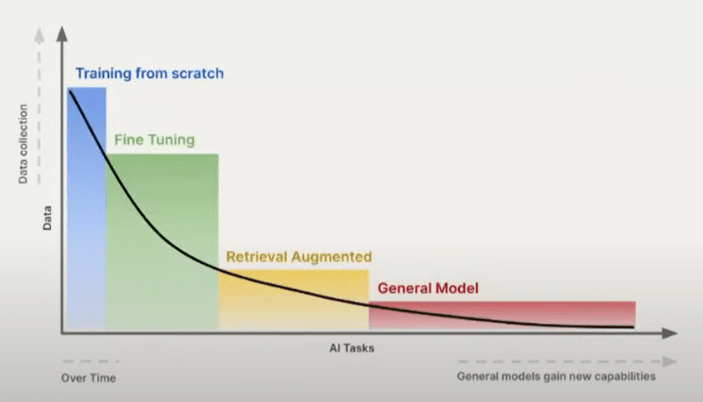

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost.

Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models.

However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

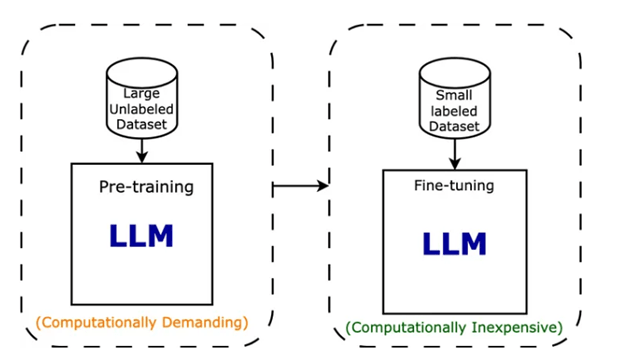

Source: Intuitive Tutorials

Why LLaMA 2 Fine Tuning?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

Read more about Palm 2 vs Llama 2 in this blog

- Diverse range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive training ata: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped query attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open source vs. closed source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)

Parameter Efficient Fine-Tuning involves adapting pre-trained models to new tasks while making minimal changes to the model’s parameters. This is especially important for large neural network models like BERT, GPT, and similar ones. Let’s delve into why PEFT is so significant:

- Reduced overfitting: Limited datasets can be problematic. Making too many parameter adjustments can lead to model overfitting. PEFT allows us to strike a balance between the model’s flexibility and tailoring it to new tasks.

- Faster training: Making fewer parameter changes results in fewer computations, which in turn leads to faster training sessions.

- Resource efficiency: Training deep neural networks requires substantial computational resources. PEFT minimizes the computational and memory demands, making it more practical to deploy in resource-constrained environments.

- Knowledge preservation: Extensive pretraining on diverse datasets equips models with valuable general knowledge. PEFT ensures that this wealth of knowledge is retained when adapting the model to new tasks.

PEFT Technique

The most popular PEFT technique is LoRA. Let’s see what it offers:

-

LoRA

LoRA, or Low-Rank Adaptation, represents a groundbreaking advancement in the realm of large language models. At the beginning of the year, these models seemed accessible only to wealthy companies. However, LoRA has changed the landscape.

LoRA has made the use of large language models accessible to a wider audience. Its low-rank adaptation approach has significantly reduced the number of trainable parameters by up to 10,000 times. This results in:

- A threefold reduction in GPU requirements, which is typically a major bottleneck.

- Comparable, if not superior, performance even without fine-tuning the entire model.

In traditional fine-tuning, we modify the existing weights of a pre-trained model using new examples. Conventionally, this required a matrix of the same size. However, by employing creative methods and the concept of rank factorization, a matrix can be split into two smaller matrices. When multiplied together, they approximate the original matrix.

To illustrate, imagine a 1000×1000 matrix with 1,000,000 parameters. Through rank factorization, if the rank is, for instance, five, we could have two matrices, each sized 1000×5. When combined, they represent just 10,000 parameters, resulting in a significant reduction.

In recent days, researchers have introduced an extension of LoRA known as QLoRA.

-

QLoRA

QLoRA is an extension of LoRA that further introduces quantization to enhance parameter efficiency during fine-tuning. It builds on the principles of LoRA while introducing 4-bit NormalFloat (NF4) quantization and Double Quantization techniques.

Environment Setup

About Dataset

The dataset has undergone special processing to ensure a seamless match with Llama 2’s prompt format, making it ready for training without the need for additional modifications.

Since the data has already been adapted to Llama 2’s prompt format, it can be directly employed to tune the model for particular applications.

Configuring the Model and Tokenizer

We start by specifying the pre-trained Llama 2 model and prepare for an improved version called “llama-2-7b-enhanced”. We load the tokenizer and make slight adjustments to ensure compatibility with half-precision floating-point numbers (fp16) operations. Working with fp16 can offer various advantages, including reduced memory usage and faster model training. However, it’s important to note that not all operations work seamlessly with this lower precision format, and tokenization, a crucial step in preparing text data for model training, is one of them.

Next, we load the pre-trained Llama 2 model with our quantization configurations. We then deactivate caching and configure a pretraining temperature parameter.

In order to shrink the model’s size and boost inference speed, we employ 4-bit quantization provided by the BitsAndBytesConfig. Quantization involves representing the model’s weights in a way that consumes less memory.

The configuration mentioned here uses the ‘nf4’ type for quantization. You can experiment with different quantization types to explore potential performance variations.

Quantization Configuration

In the context of training a machine learning model using Low-Rank Adaptation (LoRA), several parameters play a significant role. Here’s a simplified explanation of each:

Parameters Specific to LoRA:

- Dropout Rate (lora_dropout): This parameter represents the probability that the output of each neuron is set to zero during training. It is used to prevent overfitting, which occurs when the model becomes too tailored to the training data.

- Rank (r): Rank measures how the original weight matrices are decomposed into simpler, smaller matrices. This decomposition reduces computational demands and memory usage. Lower ranks can make the model faster but may impact its performance. The original LoRA paper suggests starting with a rank of 8, but for QLoRA, a rank of 64 is recommended.

- Lora_alpha: This parameter controls the scaling of the low-rank approximation. It’s like finding the right balance between the original model and the low-rank approximation. Higher values can make the approximation more influential during the fine-tuning process, which can affect both performance and computational cost.

By adjusting these parameters, particularly lora_alpha and r, you can observe how the model’s performance and resource utilization change. This allows you to fine-tune the model for your specific task and find the optimal configuration.

You can find the code of this notebook here.

Conclusion

Fine-tuning LLaMA 2 unlocks its full potential, making it more efficient and adaptable for specific tasks. By leveraging techniques like Full Fine-Tuning, QLoRA, and PEFT, users can optimize model performance while balancing computational costs. Whether you’re enhancing language models for research, business applications, or AI-driven solutions, choosing the right fine-tuning approach is crucial.

As AI continues to evolve, efficient model customization will play a key role in advancing NLP capabilities. By understanding and implementing these fine-tuning techniques, data scientists and AI practitioners can harness the true power of LLaMA 2 for a wide range of real-world applications.

Revolutionize LLM with Llama 2 Fine-Tuning

With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming a standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why fine-tune LLM?

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost. Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models. However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

Source: Intuitive Tutorials

Why LLaMA 2?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

- Diverse Range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive Training Data: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced Context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped Query Attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance Excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open Source vs. Closed Source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)

Parameter Efficient Fine-Tuning involves adapting pre-trained models to new tasks while making minimal changes to the model’s parameters. This is especially important for large neural network models like BERT, GPT, and similar ones. Let’s delve into why PEFT is so significant:

- Reduced Overfitting: Limited datasets can be problematic. Making too many parameter adjustments can lead to the model overfitting. PEFT allows us to strike a balance between the model’s flexibility and tailoring it to new tasks.

- Faster Training: Making fewer parameter changes results in fewer computations, which in turn leads to faster training sessions.

- Resource Efficiency: Training deep neural networks requires substantial computational resources. PEFT minimizes the computational and memory demands, making it more practical to deploy in resource-constrained environments.

- Knowledge Preservation: Extensive pretraining on diverse datasets equips models with valuable general knowledge. PEFT ensures that this wealth of knowledge is retained when adapting the model to new tasks.

PEFT Technique

The most popular PEFT technique is LoRA. Let’s see what it offers:

LoRA

LoRA, or Low Rank Adaptation, represents a groundbreaking advancement in the realm of large language models. At the beginning of the year, these models seemed accessible only to wealthy companies. However, LoRA has changed the landscape.

LoRA has made the use of large language models accessible to a wider audience. Its low-rank adaptation approach has significantly reduced the number of trainable parameters by up to 10,000 times. This results in:

- A threefold reduction in GPU requirements, which is typically a major bottleneck.

- Comparable, if not superior, performance even without fine-tuning the entire model.

In traditional fine-tuning, we modify the existing weights of a pre-trained model using new examples. Conventionally, this required a matrix of the same size. However, by employing creative methods and the concept of rank factorization, a matrix can be split into two smaller matrices. When multiplied together, they approximate the original matrix.

To illustrate, imagine a 1000×1000 matrix with 1,000,000 parameters. Through rank factorization, if the rank is, for instance, five, we could have two matrices, each sized 1000×5. When combined, they represent just 10,000 parameters, resulting in a significant reduction.

In recent days, researchers have introduced an extension of LoRA known as QLoRA.

QLoRA

QLoRA is an extension of LoRA that further introduces quantization to enhance parameter efficiency during fine-tuning. It builds on the principles of LoRA while introducing 4-bit NormalFloat (NF4) quantization and Double Quantization techniques.

Environment setup

!pip install -q torch peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7 accelerate

import torch

from datasets import load_dataset

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments, pipeline

from peft import LoraConfig

from trl import SFTTrainer

import os

About dataset

Source: HuggingFace

The dataset has undergone special processing to ensure a seamless match with Llama 2’s prompt format, making it ready for training without the need for additional modifications.

Since the data has already been adapted to Llama 2’s prompt format, it can be directly employed to tune the model for particular applications.

# Dataset

data_name = “m0hammadjaan/Dummy-NED-Positions” # Your dataset here

training_data = load_dataset(data_name, split=“train”)

Configuring the Model and Tokenizer

We start by specifying the pre-trained Llama 2 model and prepare for an improved version called “llama-2-7b-enhanced“. We load the tokenizer and make slight adjustments to ensure compatibility with half-precision floating-point numbers (fp16) operations. Working with fp16 can offer various advantages, including reduced memory usage and faster model training. However, it’s important to note that not all operations work seamlessly with this lower precision format, and tokenization, a crucial step in preparing text data for model training, is one of them.

Next, we load the pre-trained Llama 2 model with our quantization configurations. We then deactivate caching and configure a pretraining temperature parameter.

In order to shrink the model’s size and boost inference speed, we employ 4-bit quantization provided by the BitsAndBytesConfig. Quantization involves representing the model’s weights in a way that consumes less memory.

The configuration mentioned here uses the ‘nf4‘ type for quantization. You can experiment with different quantization types to explore potential performance variations.

# Model and tokenizer names

base_model_name = “NousResearch/Llama-2-7b-chat-hf”

refined_model = “llama-2-7b-enhanced”

# Tokenizer

llama_tokenizer = AutoTokenizer.from_pretrained(base_model_name, trust_remote_code=True)

llama_tokenizer.pad_token = llama_tokenizer.eos_token

llama_tokenizer.padding_side = “right” # Fix for fp16

# Quantization Config

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type=“nf4”,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=False

)

# Model

base_model = AutoModelForCausalLM.from_pretrained(

base_model_name,

quantization_config=quant_config,

device_map = ‘auto’

)

base_model.config.use_cache = False

base_model.config.pretraining_tp = 1

Quantization Configuration

In the context of training a machine learning model using Low-Rank Adaptation (LoRA), several parameters play a significant role. Here’s a simplified explanation of each:

Parameters Specific to LoRA:

- Dropout Rate (lora_dropout): This parameter represents the probability that the output of each neuron is set to zero during training. It is used to prevent overfitting, which occurs when the model becomes too tailored to the training data.

- Rank (r): Rank measures how the original weight matrices are decomposed into simpler, smaller matrices. This decomposition reduces computational demands and memory usage. Lower ranks can make the model faster but may impact its performance. The original LoRA paper suggests starting with a rank of 8, but for QLoRA, a rank of 64 is recommended.

- Lora_alpha: This parameter controls the scaling of the low-rank approximation. It’s like finding the right balance between the original model and the low-rank approximation. Higher values can make the approximation more influential during the fine-tuning process, which can affect both performance and computational cost.

By adjusting these parameters, particularly lora_alpha and r, you can observe how the model’s performance and resource utilization change. This allows you to fine-tune the model for your specific task and find the optimal configuration.

# Recommended if you are using free google cloab GPU else you’ll get CUDA out of memory

os.environ[“PYTORCH_CUDA_ALLOC_CONF”] = “400”

# LoRA Config

peft_parameters = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=8,

bias=“none”,

task_type=“CAUSAL_LM”

)

# Training Params

train_params = TrainingArguments(

output_dir=“./results_modified”, # Output directory for saving model checkpoints and logs

num_train_epochs=1, # Number of training epochs

per_device_train_batch_size=4, # Batch size per device during training

gradient_accumulation_steps=1, # Number of gradient accumulation steps

optim=“paged_adamw_32bit”, # Optimizer choice (paged_adamw_32bit)

save_steps=25, # Save model checkpoints every 25 steps

logging_steps=25, # Log training information every 25 steps

learning_rate=2e-4, # Learning rate for the optimizer

weight_decay=0.001, # Weight decay for regularization

fp16=False, # Use 16-bit floating-point precision (False)

bf16=False, # Use 16-bit bfloat16 precision (False)

max_grad_norm=0.3, # Maximum gradient norm during training

max_steps=-1, # Maximum number of training steps (-1 means no limit)

warmup_ratio=0.03, # Warm-up ratio for the learning rate scheduler

group_by_length=True, # Group examples by input sequence length during training

lr_scheduler_type=“constant”, # Learning rate scheduler type (constant)

report_to=“tensorboard” # Report training metrics to TensorBoard

)

# Trainer

fine_tuning = SFTTrainer(

model=base_model, # Base model for fine-tuning

train_dataset=training_data, # Training dataset

peft_config=peft_parameters, # Configuration for peft

dataset_text_field=“text”, # Field in the dataset containing text data

tokenizer=llama_tokenizer, # Tokenizer for preprocessing text

args=train_params # Training arguments

)

# Training

fine_tuning.train() # Start the training process

# Save Model

fine_tuning.model.save_pretrained(refined_model) # Save the trained model to the specified directory

Query Fine-Tuned model

# Generate Text

query = “Are there any research center at NED University of Engineering and Technology?”

text_gen = pipeline(task=“text-generation”, model=base_model, tokenizer=llama_tokenizer, max_length=200)

output = text_gen(f“<s>[INST] {query} [/INST]”)

print(output[0][‘generated_text’])

You can find the code of this notebook here.

Conclusion

I asked both the fine-tuned and unfine-tuned models of LLaMA 2 about a university, and the fine-tuned model provided the correct result. The unfine-tuned model does not know about the query therefore it hallucinated the response.

Unfine-tuned

Fine-tuned

Revolutionize LLM with Llama 2 Fine-Tuning

With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming a standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why fine-tune LLM?

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost. Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models. However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

Source: Intuitive Tutorials

Why LLaMA 2?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

- Diverse Range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive Training Data: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced Context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped Query Attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance Excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open Source vs. Closed Source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)

Parameter Efficient Fine-Tuning involves adapting pre-trained models to new tasks while making minimal changes to the model’s parameters. This is especially important for large neural network models like BERT, GPT, and similar ones. Let’s delve into why PEFT is so significant:

- Reduced Overfitting: Limited datasets can be problematic. Making too many parameter adjustments can lead to the model overfitting. PEFT allows us to strike a balance between the model’s flexibility and tailoring it to new tasks.

- Faster Training: Making fewer parameter changes results in fewer computations, which in turn leads to faster training sessions.

- Resource Efficiency: Training deep neural networks requires substantial computational resources. PEFT minimizes the computational and memory demands, making it more practical to deploy in resource-constrained environments.

- Knowledge Preservation: Extensive pretraining on diverse datasets equips models with valuable general knowledge. PEFT ensures that this wealth of knowledge is retained when adapting the model to new tasks.

PEFT Technique

The most popular PEFT technique is LoRA. Let’s see what it offers:

LoRA

LoRA, or Low Rank Adaptation, represents a groundbreaking advancement in the realm of large language models. At the beginning of the year, these models seemed accessible only to wealthy companies. However, LoRA has changed the landscape.

LoRA has made the use of large language models accessible to a wider audience. Its low-rank adaptation approach has significantly reduced the number of trainable parameters by up to 10,000 times. This results in:

- A threefold reduction in GPU requirements, which is typically a major bottleneck.

- Comparable, if not superior, performance even without fine-tuning the entire model.

In traditional fine-tuning, we modify the existing weights of a pre-trained model using new examples. Conventionally, this required a matrix of the same size. However, by employing creative methods and the concept of rank factorization, a matrix can be split into two smaller matrices. When multiplied together, they approximate the original matrix.

To illustrate, imagine a 1000×1000 matrix with 1,000,000 parameters. Through rank factorization, if the rank is, for instance, five, we could have two matrices, each sized 1000×5. When combined, they represent just 10,000 parameters, resulting in a significant reduction.

In recent days, researchers have introduced an extension of LoRA known as QLoRA.

QLoRA

QLoRA is an extension of LoRA that further introduces quantization to enhance parameter efficiency during fine-tuning. It builds on the principles of LoRA while introducing 4-bit NormalFloat (NF4) quantization and Double Quantization techniques.

Environment setup

!pip install -q torch peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7 accelerate

import torch

from datasets import load_dataset

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments, pipeline

from peft import LoraConfig

from trl import SFTTrainer

import os

About dataset

Source: HuggingFace

The dataset has undergone special processing to ensure a seamless match with Llama 2’s prompt format, making it ready for training without the need for additional modifications.

Since the data has already been adapted to Llama 2’s prompt format, it can be directly employed to tune the model for particular applications.

# Dataset

data_name = “m0hammadjaan/Dummy-NED-Positions” # Your dataset here

training_data = load_dataset(data_name, split=“train”)

Configuring the Model and Tokenizer

We start by specifying the pre-trained Llama 2 model and prepare for an improved version called “llama-2-7b-enhanced“. We load the tokenizer and make slight adjustments to ensure compatibility with half-precision floating-point numbers (fp16) operations. Working with fp16 can offer various advantages, including reduced memory usage and faster model training. However, it’s important to note that not all operations work seamlessly with this lower precision format, and tokenization, a crucial step in preparing text data for model training, is one of them.

Next, we load the pre-trained Llama 2 model with our quantization configurations. We then deactivate caching and configure a pretraining temperature parameter.

In order to shrink the model’s size and boost inference speed, we employ 4-bit quantization provided by the BitsAndBytesConfig. Quantization involves representing the model’s weights in a way that consumes less memory.

The configuration mentioned here uses the ‘nf4‘ type for quantization. You can experiment with different quantization types to explore potential performance variations.

# Model and tokenizer names

base_model_name = “NousResearch/Llama-2-7b-chat-hf”

refined_model = “llama-2-7b-enhanced”

# Tokenizer

llama_tokenizer = AutoTokenizer.from_pretrained(base_model_name, trust_remote_code=True)

llama_tokenizer.pad_token = llama_tokenizer.eos_token

llama_tokenizer.padding_side = “right” # Fix for fp16

# Quantization Config

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type=“nf4”,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=False

)

# Model

base_model = AutoModelForCausalLM.from_pretrained(

base_model_name,

quantization_config=quant_config,

device_map = ‘auto’

)

base_model.config.use_cache = False

base_model.config.pretraining_tp = 1

Quantization Configuration

In the context of training a machine learning model using Low-Rank Adaptation (LoRA), several parameters play a significant role. Here’s a simplified explanation of each:

Parameters Specific to LoRA:

- Dropout Rate (lora_dropout): This parameter represents the probability that the output of each neuron is set to zero during training. It is used to prevent overfitting, which occurs when the model becomes too tailored to the training data.

- Rank (r): Rank measures how the original weight matrices are decomposed into simpler, smaller matrices. This decomposition reduces computational demands and memory usage. Lower ranks can make the model faster but may impact its performance. The original LoRA paper suggests starting with a rank of 8, but for QLoRA, a rank of 64 is recommended.

- Lora_alpha: This parameter controls the scaling of the low-rank approximation. It’s like finding the right balance between the original model and the low-rank approximation. Higher values can make the approximation more influential during the fine-tuning process, which can affect both performance and computational cost.

By adjusting these parameters, particularly lora_alpha and r, you can observe how the model’s performance and resource utilization change. This allows you to fine-tune the model for your specific task and find the optimal configuration.

# Recommended if you are using free google cloab GPU else you’ll get CUDA out of memory

os.environ[“PYTORCH_CUDA_ALLOC_CONF”] = “400”

# LoRA Config

peft_parameters = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=8,

bias=“none”,

task_type=“CAUSAL_LM”

)

# Training Params

train_params = TrainingArguments(

output_dir=“./results_modified”, # Output directory for saving model checkpoints and logs

num_train_epochs=1, # Number of training epochs

per_device_train_batch_size=4, # Batch size per device during training

gradient_accumulation_steps=1, # Number of gradient accumulation steps

optim=“paged_adamw_32bit”, # Optimizer choice (paged_adamw_32bit)

save_steps=25, # Save model checkpoints every 25 steps

logging_steps=25, # Log training information every 25 steps

learning_rate=2e-4, # Learning rate for the optimizer

weight_decay=0.001, # Weight decay for regularization

fp16=False, # Use 16-bit floating-point precision (False)

bf16=False, # Use 16-bit bfloat16 precision (False)

max_grad_norm=0.3, # Maximum gradient norm during training

max_steps=-1, # Maximum number of training steps (-1 means no limit)

warmup_ratio=0.03, # Warm-up ratio for the learning rate scheduler

group_by_length=True, # Group examples by input sequence length during training

lr_scheduler_type=“constant”, # Learning rate scheduler type (constant)

report_to=“tensorboard” # Report training metrics to TensorBoard

)

# Trainer

fine_tuning = SFTTrainer(

model=base_model, # Base model for fine-tuning

train_dataset=training_data, # Training dataset

peft_config=peft_parameters, # Configuration for peft

dataset_text_field=“text”, # Field in the dataset containing text data

tokenizer=llama_tokenizer, # Tokenizer for preprocessing text

args=train_params # Training arguments

)

# Training

fine_tuning.train() # Start the training process

# Save Model

fine_tuning.model.save_pretrained(refined_model) # Save the trained model to the specified directory

Query Fine-Tuned model

# Generate Text

query = “Are there any research center at NED University of Engineering and Technology?”

text_gen = pipeline(task=“text-generation”, model=base_model, tokenizer=llama_tokenizer, max_length=200)

output = text_gen(f“<s>[INST] {query} [/INST]”)

print(output[0][‘generated_text’])

You can find the code of this notebook here.

Conclusion

I asked both the fine-tuned and unfine-tuned models of LLaMA 2 about a university, and the fine-tuned model provided the correct result. The unfine-tuned model does not know about the query therefore it hallucinated the response.

Unfine-tuned

Fine-tuned

Revolutionize LLM with Llama 2 Fine-Tuning

With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming a standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why fine-tune LLM?

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost. Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models. However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

Source: Intuitive Tutorials

Why LLaMA 2?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

- Diverse Range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive Training Data: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced Context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped Query Attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance Excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open Source vs. Closed Source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)

Parameter Efficient Fine-Tuning involves adapting pre-trained models to new tasks while making minimal changes to the model’s parameters. This is especially important for large neural network models like BERT, GPT, and similar ones. Let’s delve into why PEFT is so significant:

- Reduced Overfitting: Limited datasets can be problematic. Making too many parameter adjustments can lead to the model overfitting. PEFT allows us to strike a balance between the model’s flexibility and tailoring it to new tasks.

- Faster Training: Making fewer parameter changes results in fewer computations, which in turn leads to faster training sessions.

- Resource Efficiency: Training deep neural networks requires substantial computational resources. PEFT minimizes the computational and memory demands, making it more practical to deploy in resource-constrained environments.

- Knowledge Preservation: Extensive pretraining on diverse datasets equips models with valuable general knowledge. PEFT ensures that this wealth of knowledge is retained when adapting the model to new tasks.

PEFT Technique

The most popular PEFT technique is LoRA. Let’s see what it offers:

LoRA

LoRA, or Low Rank Adaptation, represents a groundbreaking advancement in the realm of large language models. At the beginning of the year, these models seemed accessible only to wealthy companies. However, LoRA has changed the landscape.

LoRA has made the use of large language models accessible to a wider audience. Its low-rank adaptation approach has significantly reduced the number of trainable parameters by up to 10,000 times. This results in:

- A threefold reduction in GPU requirements, which is typically a major bottleneck.

- Comparable, if not superior, performance even without fine-tuning the entire model.

In traditional fine-tuning, we modify the existing weights of a pre-trained model using new examples. Conventionally, this required a matrix of the same size. However, by employing creative methods and the concept of rank factorization, a matrix can be split into two smaller matrices. When multiplied together, they approximate the original matrix.

To illustrate, imagine a 1000×1000 matrix with 1,000,000 parameters. Through rank factorization, if the rank is, for instance, five, we could have two matrices, each sized 1000×5. When combined, they represent just 10,000 parameters, resulting in a significant reduction.

In recent days, researchers have introduced an extension of LoRA known as QLoRA.

QLoRA

QLoRA is an extension of LoRA that further introduces quantization to enhance parameter efficiency during fine-tuning. It builds on the principles of LoRA while introducing 4-bit NormalFloat (NF4) quantization and Double Quantization techniques.

Environment setup

!pip install -q torch peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7 accelerate

import torch

from datasets import load_dataset

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments, pipeline

from peft import LoraConfig

from trl import SFTTrainer

import os

About dataset

Source: HuggingFace

The dataset has undergone special processing to ensure a seamless match with Llama 2’s prompt format, making it ready for training without the need for additional modifications.

Since the data has already been adapted to Llama 2’s prompt format, it can be directly employed to tune the model for particular applications.

# Dataset

data_name = “m0hammadjaan/Dummy-NED-Positions” # Your dataset here

training_data = load_dataset(data_name, split=“train”)

Configuring the Model and Tokenizer

We start by specifying the pre-trained Llama 2 model and prepare for an improved version called “llama-2-7b-enhanced“. We load the tokenizer and make slight adjustments to ensure compatibility with half-precision floating-point numbers (fp16) operations. Working with fp16 can offer various advantages, including reduced memory usage and faster model training. However, it’s important to note that not all operations work seamlessly with this lower precision format, and tokenization, a crucial step in preparing text data for model training, is one of them.

Next, we load the pre-trained Llama 2 model with our quantization configurations. We then deactivate caching and configure a pretraining temperature parameter.

In order to shrink the model’s size and boost inference speed, we employ 4-bit quantization provided by the BitsAndBytesConfig. Quantization involves representing the model’s weights in a way that consumes less memory.

The configuration mentioned here uses the ‘nf4‘ type for quantization. You can experiment with different quantization types to explore potential performance variations.

# Model and tokenizer names

base_model_name = “NousResearch/Llama-2-7b-chat-hf”

refined_model = “llama-2-7b-enhanced”

# Tokenizer

llama_tokenizer = AutoTokenizer.from_pretrained(base_model_name, trust_remote_code=True)

llama_tokenizer.pad_token = llama_tokenizer.eos_token

llama_tokenizer.padding_side = “right” # Fix for fp16

# Quantization Config

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type=“nf4”,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=False

)

# Model

base_model = AutoModelForCausalLM.from_pretrained(

base_model_name,

quantization_config=quant_config,

device_map = ‘auto’

)

base_model.config.use_cache = False

base_model.config.pretraining_tp = 1

Quantization Configuration

In the context of training a machine learning model using Low-Rank Adaptation (LoRA), several parameters play a significant role. Here’s a simplified explanation of each:

Parameters Specific to LoRA:

- Dropout Rate (lora_dropout): This parameter represents the probability that the output of each neuron is set to zero during training. It is used to prevent overfitting, which occurs when the model becomes too tailored to the training data.

- Rank (r): Rank measures how the original weight matrices are decomposed into simpler, smaller matrices. This decomposition reduces computational demands and memory usage. Lower ranks can make the model faster but may impact its performance. The original LoRA paper suggests starting with a rank of 8, but for QLoRA, a rank of 64 is recommended.

- Lora_alpha: This parameter controls the scaling of the low-rank approximation. It’s like finding the right balance between the original model and the low-rank approximation. Higher values can make the approximation more influential during the fine-tuning process, which can affect both performance and computational cost.

By adjusting these parameters, particularly lora_alpha and r, you can observe how the model’s performance and resource utilization change. This allows you to fine-tune the model for your specific task and find the optimal configuration.

# Recommended if you are using free google cloab GPU else you’ll get CUDA out of memory

os.environ[“PYTORCH_CUDA_ALLOC_CONF”] = “400”

# LoRA Config

peft_parameters = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=8,

bias=“none”,

task_type=“CAUSAL_LM”

)

# Training Params

train_params = TrainingArguments(

output_dir=“./results_modified”, # Output directory for saving model checkpoints and logs

num_train_epochs=1, # Number of training epochs

per_device_train_batch_size=4, # Batch size per device during training

gradient_accumulation_steps=1, # Number of gradient accumulation steps

optim=“paged_adamw_32bit”, # Optimizer choice (paged_adamw_32bit)

save_steps=25, # Save model checkpoints every 25 steps

logging_steps=25, # Log training information every 25 steps

learning_rate=2e-4, # Learning rate for the optimizer

weight_decay=0.001, # Weight decay for regularization

fp16=False, # Use 16-bit floating-point precision (False)

bf16=False, # Use 16-bit bfloat16 precision (False)

max_grad_norm=0.3, # Maximum gradient norm during training

max_steps=-1, # Maximum number of training steps (-1 means no limit)

warmup_ratio=0.03, # Warm-up ratio for the learning rate scheduler

group_by_length=True, # Group examples by input sequence length during training

lr_scheduler_type=“constant”, # Learning rate scheduler type (constant)

report_to=“tensorboard” # Report training metrics to TensorBoard

)

# Trainer

fine_tuning = SFTTrainer(

model=base_model, # Base model for fine-tuning

train_dataset=training_data, # Training dataset

peft_config=peft_parameters, # Configuration for peft

dataset_text_field=“text”, # Field in the dataset containing text data

tokenizer=llama_tokenizer, # Tokenizer for preprocessing text

args=train_params # Training arguments

)

# Training

fine_tuning.train() # Start the training process

# Save Model

fine_tuning.model.save_pretrained(refined_model) # Save the trained model to the specified directory

Query Fine-Tuned model

# Generate Text

query = “Are there any research center at NED University of Engineering and Technology?”

text_gen = pipeline(task=“text-generation”, model=base_model, tokenizer=llama_tokenizer, max_length=200)

output = text_gen(f“<s>[INST] {query} [/INST]”)

print(output[0][‘generated_text’])

You can find the code of this notebook here.

Conclusion

I asked both the fine-tuned and unfine-tuned models of LLaMA 2 about a university, and the fine-tuned model provided the correct result. The unfine-tuned model does not know about the query therefore it hallucinated the response.

Unfine-tuned

Fine-tuned

Revolutionize LLM with Llama 2 Fine-Tuning

With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming a standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why fine-tune LLM?

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost. Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models. However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

Source: Intuitive Tutorials

Why LLaMA 2?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

- Diverse Range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive Training Data: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced Context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped Query Attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance Excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open Source vs. Closed Source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)

Parameter Efficient Fine-Tuning involves adapting pre-trained models to new tasks while making minimal changes to the model’s parameters. This is especially important for large neural network models like BERT, GPT, and similar ones. Let’s delve into why PEFT is so significant:

- Reduced Overfitting: Limited datasets can be problematic. Making too many parameter adjustments can lead to the model overfitting. PEFT allows us to strike a balance between the model’s flexibility and tailoring it to new tasks.

- Faster Training: Making fewer parameter changes results in fewer computations, which in turn leads to faster training sessions.

- Resource Efficiency: Training deep neural networks requires substantial computational resources. PEFT minimizes the computational and memory demands, making it more practical to deploy in resource-constrained environments.

- Knowledge Preservation: Extensive pretraining on diverse datasets equips models with valuable general knowledge. PEFT ensures that this wealth of knowledge is retained when adapting the model to new tasks.

PEFT Technique

The most popular PEFT technique is LoRA. Let’s see what it offers:

LoRA

LoRA, or Low Rank Adaptation, represents a groundbreaking advancement in the realm of large language models. At the beginning of the year, these models seemed accessible only to wealthy companies. However, LoRA has changed the landscape.

LoRA has made the use of large language models accessible to a wider audience. Its low-rank adaptation approach has significantly reduced the number of trainable parameters by up to 10,000 times. This results in:

- A threefold reduction in GPU requirements, which is typically a major bottleneck.

- Comparable, if not superior, performance even without fine-tuning the entire model.

In traditional fine-tuning, we modify the existing weights of a pre-trained model using new examples. Conventionally, this required a matrix of the same size. However, by employing creative methods and the concept of rank factorization, a matrix can be split into two smaller matrices. When multiplied together, they approximate the original matrix.

To illustrate, imagine a 1000×1000 matrix with 1,000,000 parameters. Through rank factorization, if the rank is, for instance, five, we could have two matrices, each sized 1000×5. When combined, they represent just 10,000 parameters, resulting in a significant reduction.

In recent days, researchers have introduced an extension of LoRA known as QLoRA.

QLoRA

QLoRA is an extension of LoRA that further introduces quantization to enhance parameter efficiency during fine-tuning. It builds on the principles of LoRA while introducing 4-bit NormalFloat (NF4) quantization and Double Quantization techniques.

Environment setup

!pip install -q torch peft==0.4.0 bitsandbytes==0.40.2 transformers==4.31.0 trl==0.4.7 accelerate

import torch

from datasets import load_dataset

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments, pipeline

from peft import LoraConfig

from trl import SFTTrainer

import os

About dataset

Source: HuggingFace

The dataset has undergone special processing to ensure a seamless match with Llama 2’s prompt format, making it ready for training without the need for additional modifications.

Since the data has already been adapted to Llama 2’s prompt format, it can be directly employed to tune the model for particular applications.

# Dataset

data_name = “m0hammadjaan/Dummy-NED-Positions” # Your dataset here

training_data = load_dataset(data_name, split=“train”)

Configuring the Model and Tokenizer

We start by specifying the pre-trained Llama 2 model and prepare for an improved version called “llama-2-7b-enhanced“. We load the tokenizer and make slight adjustments to ensure compatibility with half-precision floating-point numbers (fp16) operations. Working with fp16 can offer various advantages, including reduced memory usage and faster model training. However, it’s important to note that not all operations work seamlessly with this lower precision format, and tokenization, a crucial step in preparing text data for model training, is one of them.

Next, we load the pre-trained Llama 2 model with our quantization configurations. We then deactivate caching and configure a pretraining temperature parameter.

In order to shrink the model’s size and boost inference speed, we employ 4-bit quantization provided by the BitsAndBytesConfig. Quantization involves representing the model’s weights in a way that consumes less memory.

The configuration mentioned here uses the ‘nf4‘ type for quantization. You can experiment with different quantization types to explore potential performance variations.

# Model and tokenizer names

base_model_name = “NousResearch/Llama-2-7b-chat-hf”

refined_model = “llama-2-7b-enhanced”

# Tokenizer

llama_tokenizer = AutoTokenizer.from_pretrained(base_model_name, trust_remote_code=True)

llama_tokenizer.pad_token = llama_tokenizer.eos_token

llama_tokenizer.padding_side = “right” # Fix for fp16

# Quantization Config

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type=“nf4”,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=False

)

# Model

base_model = AutoModelForCausalLM.from_pretrained(

base_model_name,

quantization_config=quant_config,

device_map = ‘auto’

)

base_model.config.use_cache = False

base_model.config.pretraining_tp = 1

Quantization Configuration

In the context of training a machine learning model using Low-Rank Adaptation (LoRA), several parameters play a significant role. Here’s a simplified explanation of each:

Parameters Specific to LoRA:

- Dropout Rate (lora_dropout): This parameter represents the probability that the output of each neuron is set to zero during training. It is used to prevent overfitting, which occurs when the model becomes too tailored to the training data.

- Rank (r): Rank measures how the original weight matrices are decomposed into simpler, smaller matrices. This decomposition reduces computational demands and memory usage. Lower ranks can make the model faster but may impact its performance. The original LoRA paper suggests starting with a rank of 8, but for QLoRA, a rank of 64 is recommended.

- Lora_alpha: This parameter controls the scaling of the low-rank approximation. It’s like finding the right balance between the original model and the low-rank approximation. Higher values can make the approximation more influential during the fine-tuning process, which can affect both performance and computational cost.

By adjusting these parameters, particularly lora_alpha and r, you can observe how the model’s performance and resource utilization change. This allows you to fine-tune the model for your specific task and find the optimal configuration.

# Recommended if you are using free google cloab GPU else you’ll get CUDA out of memory

os.environ[“PYTORCH_CUDA_ALLOC_CONF”] = “400”

# LoRA Config

peft_parameters = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=8,

bias=“none”,

task_type=“CAUSAL_LM”

)

# Training Params

train_params = TrainingArguments(

output_dir=“./results_modified”, # Output directory for saving model checkpoints and logs

num_train_epochs=1, # Number of training epochs

per_device_train_batch_size=4, # Batch size per device during training

gradient_accumulation_steps=1, # Number of gradient accumulation steps

optim=“paged_adamw_32bit”, # Optimizer choice (paged_adamw_32bit)

save_steps=25, # Save model checkpoints every 25 steps

logging_steps=25, # Log training information every 25 steps

learning_rate=2e-4, # Learning rate for the optimizer

weight_decay=0.001, # Weight decay for regularization

fp16=False, # Use 16-bit floating-point precision (False)

bf16=False, # Use 16-bit bfloat16 precision (False)

max_grad_norm=0.3, # Maximum gradient norm during training

max_steps=-1, # Maximum number of training steps (-1 means no limit)

warmup_ratio=0.03, # Warm-up ratio for the learning rate scheduler

group_by_length=True, # Group examples by input sequence length during training

lr_scheduler_type=“constant”, # Learning rate scheduler type (constant)

report_to=“tensorboard” # Report training metrics to TensorBoard

)

# Trainer

fine_tuning = SFTTrainer(

model=base_model, # Base model for fine-tuning

train_dataset=training_data, # Training dataset

peft_config=peft_parameters, # Configuration for peft

dataset_text_field=“text”, # Field in the dataset containing text data

tokenizer=llama_tokenizer, # Tokenizer for preprocessing text

args=train_params # Training arguments

)

# Training

fine_tuning.train() # Start the training process

# Save Model

fine_tuning.model.save_pretrained(refined_model) # Save the trained model to the specified directory

Query Fine-Tuned model

# Generate Text

query = “Are there any research center at NED University of Engineering and Technology?”

text_gen = pipeline(task=“text-generation”, model=base_model, tokenizer=llama_tokenizer, max_length=200)

output = text_gen(f“<s>[INST] {query} [/INST]”)

print(output[0][‘generated_text’])

You can find the code of this notebook here.

Conclusion

I asked both the fine-tuned and unfine-tuned models of LLaMA 2 about a university, and the fine-tuned model provided the correct result. The unfine-tuned model does not know about the query therefore it hallucinated the response.

Unfine-tuned

Fine-tuned

Revolutionize LLM with Llama 2 Fine-Tuning

With the introduction of LLaMA v1, we witnessed a surge in customized models like Alpaca, Vicuna, and WizardLM. This surge motivated various businesses to launch their own foundational models, such as OpenLLaMA, Falcon, and XGen, with licenses suitable for commercial purposes. LLaMA 2, the latest release, now combines the strengths of both approaches, offering an efficient foundational model with a more permissive license.

In the first half of 2023, the software landscape underwent a significant transformation with the widespread adoption of APIs like OpenAI API to build infrastructures based on Large Language Models (LLMs). Libraries like LangChain and LlamaIndex played crucial roles in this evolution.

As we move into the latter part of the year, fine-tuning or instruction tuning of these models is becoming a standard practice in the LLMOps workflow. This trend is motivated by several factors, including

- Potential cost savings

- The capacity to handle sensitive data

- The opportunity to develop models that can outperform well-known models like ChatGPT and GPT-4 in specific tasks.

Fine-Tuning:

Fine-tuning methods refer to various techniques used to enhance the performance of a pre-trained model by adapting it to a specific task or domain. These methods are valuable for optimizing a model’s weights and parameters to excel in the target task. Here are different fine-tuning methods:

- Supervised Fine-Tuning: This method involves further training a pre-trained language model (LLM) on a specific downstream task using labeled data. The model’s parameters are updated to excel in this task, such as text classification, named entity recognition, or sentiment analysis.

- Transfer Learning: Transfer learning involves repurposing a pre-trained model’s architecture and weights for a new task or domain. Typically, the model is initially trained on a broad dataset and is then fine-tuned to adapt to specific tasks or domains, making it an efficient approach.

- Sequential Fine-tuning: Sequential fine-tuning entails the gradual adaptation of a pre-trained model on multiple related tasks or domains in succession. This sequential learning helps the model capture intricate language patterns across various tasks, leading to improved generalization and performance.

- Task-specific Fine-tuning: Task-specific fine-tuning is a method where the pre-trained model undergoes further training on a dedicated dataset for a particular task or domain. While it demands more data and time than transfer learning, it can yield higher performance tailored to the specific task.

- Multi-task Learning: Multi-task learning involves fine-tuning the pre-trained model on several tasks simultaneously. This strategy enables the model to learn and leverage common features and representations across different tasks, ultimately enhancing its ability to generalize and perform well.

- Adapter Training: Adapter training entails training lightweight modules that are integrated into the pre-trained model. These adapters allow for fine-tuning on specific tasks without interfering with the original model’s performance on other tasks. This approach maintains efficiency while adapting to task-specific requirements.

Why fine-tune LLM?

Source: DeepLearningAI

The figure discusses the allocation of AI tasks within organizations, taking into account the amount of available data. On the left side of the spectrum, having a substantial amount of data allows organizations to train their own models from scratch, albeit at a high cost. Alternatively, if an organization possesses a moderate amount of data, it can fine-tune pre-existing models to achieve excellent performance. For those with limited data, the recommended approach is in-context learning, specifically through techniques like retrieval augmented generation using general models. However, our focus will be on the fine-tuning aspect, as it offers a favorable balance between accuracy, performance, and speed compared to larger, more general models.

Source: Intuitive Tutorials

Why LLaMA 2?

Before we dive into the detailed guide, let’s take a quick look at the benefits of Llama 2.

- Diverse Range: Llama 2 comes in various sizes, from 7 billion to a massive 70 billion parameters. It shares a similar architecture with Llama 1 but boasts improved capabilities.

- Extensive Training Data: This model has been trained on a massive dataset of 2 trillion tokens, demonstrating its vast exposure to a wide range of information.

- Enhanced Context: With an extended context length of 4,000 tokens, the model can better understand and generate extensive content.

- Grouped Query Attention (GQA): GQA has been introduced to enhance inference scalability, making attention calculations faster by storing previous token pair information.

- Performance Excellence: Llama 2 models consistently outperform their predecessors, particularly the Llama 2 70B version. They excel in various benchmarks, competing strongly with models like Llama 1 65B and even Falcon models.

- Open Source vs. Closed Source LLMs: When compared to models like GPT-3.5 or PaLM (540B), Llama 2 70B demonstrates impressive performance. While there may be a slight gap in certain benchmarks when compared to GPT-4 and PaLM-2, the model’s potential is evident.

Parameter Efficient Fine-Tuning (PEFT)