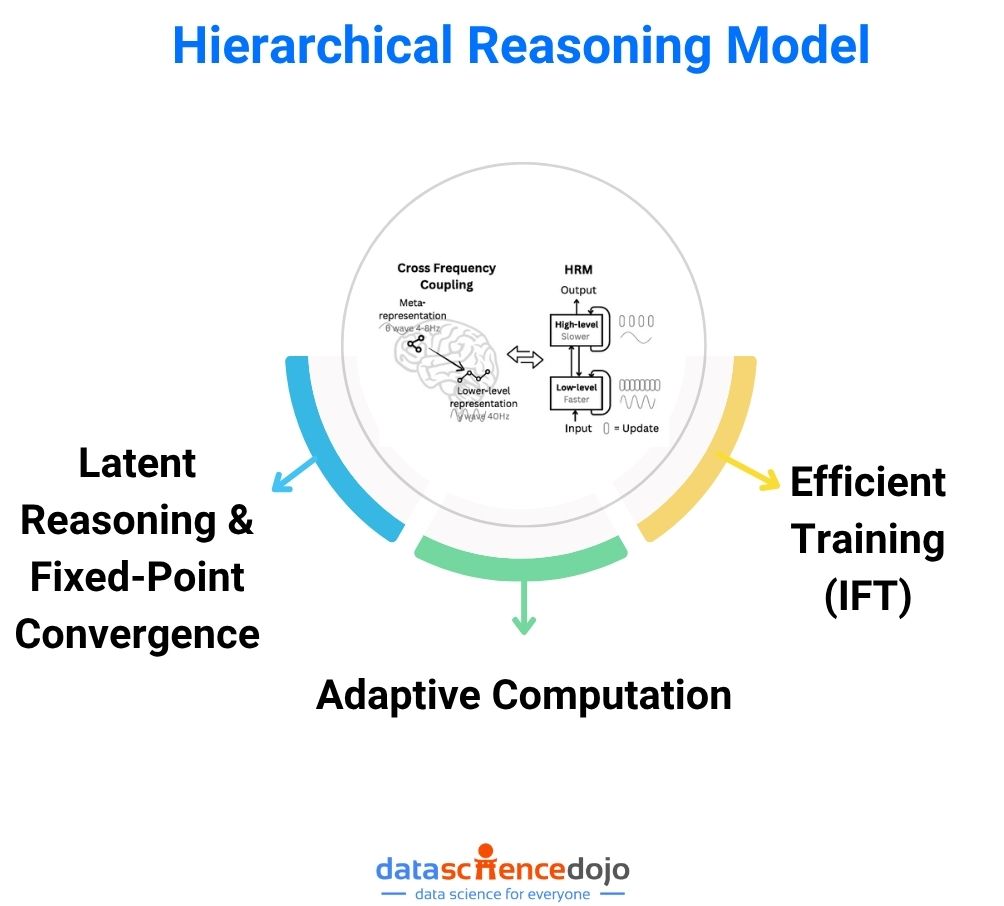

The hierarchical reasoning model is revolutionizing how artificial intelligence (AI) systems approach complex problem-solving. At the very beginning of this post, let’s clarify: the hierarchical reasoning model is a brain-inspired architecture that enables AI to break down and solve intricate tasks by leveraging multi-level reasoning, adaptive computation, and deep latent processing. This approach is rapidly gaining traction in the data science and machine learning communities, promising a leap toward true artificial general intelligence.

What is a Hierarchical Reasoning Model?

A hierarchical reasoning model (HRM) is an advanced AI architecture designed to mimic the brain’s ability to process information at multiple levels of abstraction and timescales. Unlike traditional deep learning architectures, which often rely on fixed-depth layers, HRMs employ a nested, recurrent structure. This allows them to perform multi-level reasoning—from high-level planning to low-level execution—within a single, unified model.

Why Standard AI Models Hit a Ceiling

Most large language models (LLMs) and deep learning systems use a fixed number of layers. Whether solving a simple math problem or navigating a complex maze, the data passes through the same computational depth. This limitation, known as fixed computational depth, restricts the model’s ability to handle tasks that require extended, step-by-step reasoning.

Chain-of-thought prompting has been a workaround, where models are guided to break down problems into intermediate steps. However, this approach is brittle, data-hungry, and often slow, especially for tasks demanding deep logical inference or symbolic manipulation.

The Brain-Inspired Solution: Hierarchical Reasoning Model Explained

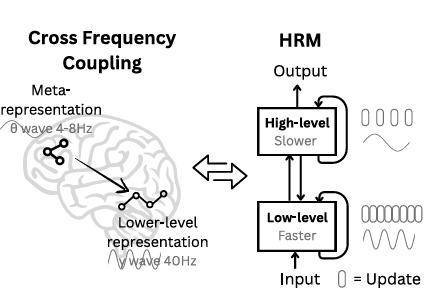

The hierarchical reasoning model draws inspiration from the human brain’s hierarchical and multi-timescale processing. In the brain, higher-order regions handle abstract planning over longer timescales, while lower-level circuits execute rapid, detailed computations. HRM replicates this by integrating two interdependent recurrent modules:

High-Level Module: Responsible for slow, abstract planning and global strategy.

Low-Level Module: Handles fast, detailed computations and local problem-solving.

This nested loop allows the model to achieve significant computational depth and flexibility, overcoming the limitations of fixed-layer architectures.

Technical Architecture: How Hierarchical Reasoning Model Works

1. Latent Reasoning and Fixed-Point Convergence

Latent reasoning in HRM refers to the model’s ability to perform complex, multi-step computations entirely within its internal neural states—without externalizing intermediate steps as text, as is done in chain-of-thought (CoT) prompting. This is a fundamental shift: while CoT models “think out loud” by generating step-by-step text, HRM “thinks silently,” iterating internally until it converges on a solution.

How HRM Achieves Latent Reasoning

- Hierarchical Modules: HRM consists of two interdependent recurrent modules:

- A high-level module (H) for slow, abstract planning.

- A low-level module (L) for rapid, detailed computation.

- Nested Iteration: For each high-level step, the low-level module performs multiple fast iterations, refining its state based on the current high-level context.

- Hierarchical Convergence: The low-level module converges to a local equilibrium (fixed point) within each high-level cycle. After several such cycles, the high-level module itself converges to a global fixed point representing the solution.

- Fixed-Point Solution: The process continues until both modules reach a stable state—this is the “fixed point.” The final output is generated from this converged high-level state.

Analogy:

Imagine a manager (high-level) assigning a task to an intern (low-level). The intern works intensely, reports back, and the manager updates the plan. This loop continues until both agree the task is complete. All this “reasoning” happens internally, not as a written log.

Why is this powerful?

- It allows the model to perform arbitrarily deep reasoning in a single forward pass, breaking free from the fixed-depth limitation of standard Transformers.

- It enables the model to “think” as long as needed for each problem, rather than being constrained by a fixed number of layers or steps.

2. Efficient Training with the Implicit Function Theorem

Training deep, recurrent models like Hierarchical Reasoning Model is challenging because traditional backpropagation through time (BPTT) requires storing all intermediate states, leading to high memory and computational costs.

HRM’s Solution: The Implicit Function Theorem (IFT)

- Fixed-Point Gradients: If a recurrent network converges to a fixed point, the gradient of the loss with respect to the model parameters can be computed directly at that fixed point, without unrolling all intermediate steps.

- 1-Step Gradient Approximation: In practice, HRM uses a “1-step gradient” approximation, replacing the matrix inverse with the identity matrix for efficiency.

- This allows gradients to be computed using only the final states, drastically reducing memory usage (from O(T) to O(1), where T is the number of steps).

Benefits:

- Scalability: Enables training of very deep or recurrent models without running out of memory.

- Biological Plausibility: Mirrors how the brain might perform credit assignment without replaying all past activity.

- Practicality: Works well in practice for equilibrium models like HRM, as shown in recent research.

3. Adaptive Computation with Q-Learning

Not all problems require the same amount of reasoning. HRM incorporates an adaptive computation mechanism to dynamically allocate more computational resources to harder problems and stop early on easier ones.

How Adaptive Computation Works in HRM

- Q-Head: Hierarchical Reasoning Model includes a Q-learning “head” that predicts the value of two actions at each reasoning segment: “halt” or “continue.”

- Decision Process:

- After each segment (a set of reasoning cycles), the Q-head evaluates whether to halt (output the current solution) or continue reasoning.

- The decision is based on the predicted Q-values and a minimum/maximum segment threshold.

- Reinforcement Learning: The Q-head is trained using Q-learning, where:

- Halting yields a reward if the prediction is correct.

- Continuing yields no immediate reward but allows further refinement.

- Stability: HRM achieves stable Q-learning without the usual tricks (like replay buffers) by using architectural features such as RMSNorm and AdamW, which keep weights bounded.

Benefits:

- Efficiency: The model learns to “think fast” on easy problems and “think slow” (i.e., reason longer) on hard ones, mirroring human cognition.

- Resource Allocation: Computational resources are used where they matter most, improving both speed and accuracy.

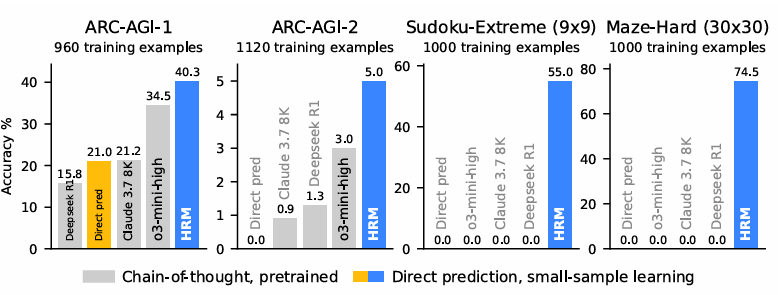

Key Advantages Over Chain-of-Thought and Transformers

- Greater Computational Depth: Hierarchical Reasoning Model can perform arbitrarily deep reasoning within a single forward pass, unlike fixed-depth Transformers.

- Data Efficiency: Achieves high performance on complex tasks with fewer training samples.

- Biological Plausibility: Mimics the brain’s hierarchical organization, leading to emergent properties like dimensionality hierarchy.

- Scalability: Efficient memory usage and training stability, even for long reasoning chains.

Demystify large language models and uncover the secrets powering conversational AI like ChatGPT.

Real-World Applications

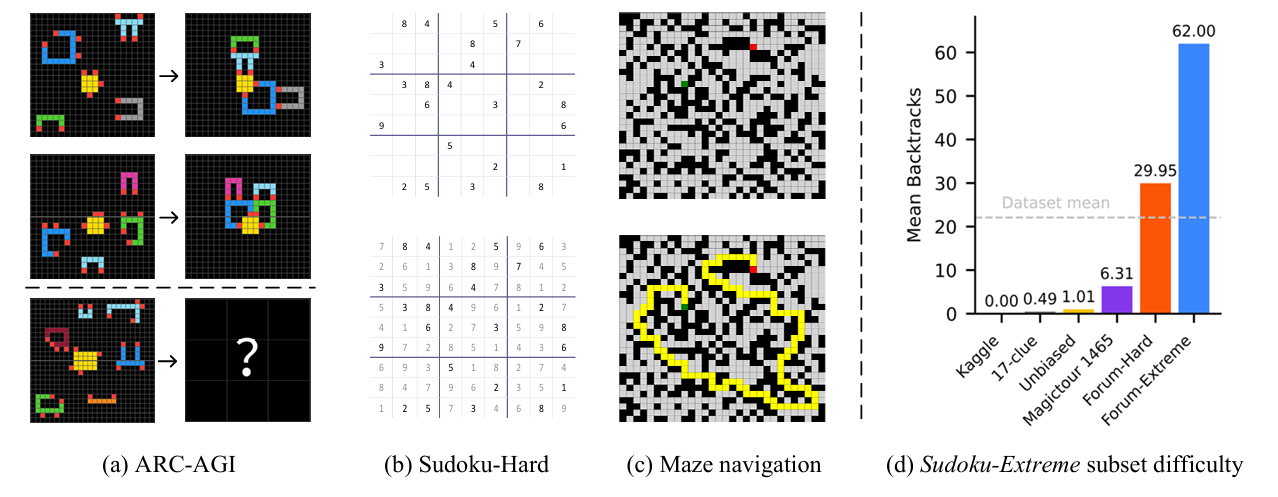

The hierarchical reasoning model has demonstrated exceptional results in:

- Solving complex Sudoku puzzles and symbolic logic tasks

- Optimal pathfinding in large mazes

- Abstraction and Reasoning Corpus (ARC) benchmarks—a key test for artificial general intelligence

- General-purpose planning and decision-making in agentic AI systems

These applications highlight HRM’s potential to power next-generation AI systems capable of robust, flexible, and generalizable reasoning.

Challenges and Future Directions

While the hierarchical reasoning model is a breakthrough, several challenges remain:

Interpretability:

Understanding the internal reasoning strategies of HRMs is still an open research area.

Integration with memory and attention:

Future models may combine HRM with hierarchical memory systems for even greater capability.

Broader adoption:

As HRM matures, expect to see its principles integrated into mainstream AI frameworks and libraries.

Frequently Asked Questions (FAQ)

Q1: What makes the hierarchical reasoning model different from standard neural networks?

A: HRM uses a nested, recurrent structure that allows for multi-level, adaptive reasoning, unlike standard fixed-depth networks.

Q2: How does Hierarchical Reasoning Model achieve better performance on complex reasoning tasks?

A: By leveraging hierarchical modules and latent reasoning, HRM can perform deep, iterative computations efficiently.

Q3: Is HRM biologically plausible?

A: Yes, HRM’s architecture is inspired by the brain’s hierarchical processing and has shown emergent properties similar to those observed in neuroscience.

Q4: Where can I learn more about HRM?

A: Check out the arXiv paper on Hierarchical Reasoning Model by Sapient Intelligence and Data Science Dojo’s blog on advanced AI architectures.

Conclusion & Next Steps

The hierarchical reasoning model represents a paradigm shift in AI, moving beyond shallow, fixed-depth architectures to embrace the power of hierarchy, recurrence, and adaptive computation. As research progresses, expect HRM to play a central role in the development of truly intelligent, general-purpose AI systems.

Ready to dive deeper?

Explore more on Data Science Dojo’s blog for tutorials, case studies, and the latest in AI research.