Shape your model performance using LLM parameters.

Imagine you have a super-smart computer program. You type something into it, like a question or a sentence, and you want it to guess what words should come next. This program doesn’t just guess randomly; it’s like a detective that looks at all the possibilities and says, “Hmm, these words are more likely to come next.”

It makes an extensive list of words and says, “Here are all the possible words that could come next, and here’s how likely each one is.” But here’s the catch: it only gives you one word, and that word depends on how you tell the program to make its guess. You set the rules, and the program follows them.

So, it’s like asking your computer buddy to finish your sentences, but it’s super smart and calculates the odds of each word being the right fit based on what you’ve typed before.

That’s how this model works, like a word-guessing detective, giving you one word based on how you want it to guess.

A Brief Introduction to Large Language Model Parameters

Large Language Model (LLM) parameters are the fundamental components that define how an AI model processes, understands, and generates human-like text. These parameters include neural network weights, attention mechanisms, and layer configurations, all of which influence the model’s ability to learn patterns, interpret context, and produce meaningful responses.

The more parameters an LLM has, the more sophisticated its understanding and text generation capabilities become. These models power various natural language processing (NLP) tasks, such as machine translation, chatbots, content creation, and sentiment analysis, making them essential tools in modern AI applications.

How do LLM Parameters Work

LLM parameters include the architecture, model size, training data, and hyperparameters. The core component is the transformer architecture, which enables LLMs to process and generate text efficiently. LLMs are trained in vast datasets, learning patterns and relationships between words and phrases.

They use vectors to represent words numerically, allowing them to understand and generate text. During training, these models adjust their parameters (weights and biases) to minimize the difference between their predictions and the actual data. Let’s have a look at the key parameters in detail.

Learn in detail about fine tuning LLMs

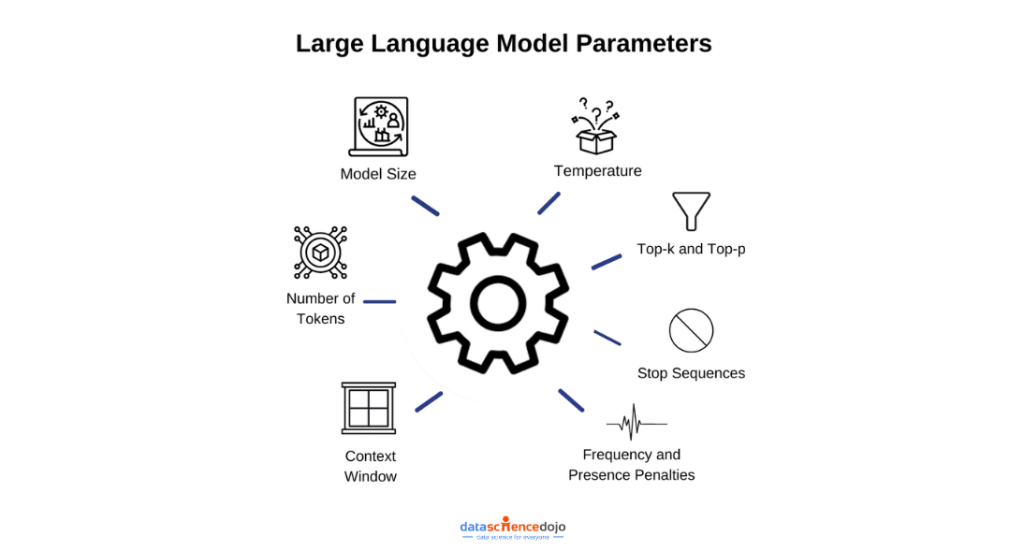

1. Model:

The model size refers to the number of parameters in the LLM. A parameter is a variable that is learned by the LLM during training. The model size is typically measured in billions or trillions of parameters. A larger model size will typically result in better performance, but it will also require more computing resources to train and run.

Also, it is a specific instance of an LLM trained on a corpus of text. Different models have varying sizes and are suitable for different tasks. For example, GPT-3 is a large model with 175 billion parameters, making it highly capable in various natural language understanding and generation tasks.

2. Number of Tokens:

The number of tokens refers to the size of the vocabulary that the LLM is trained on. A token is a unit of text, such as a word, a punctuation mark, or a number.

The number of tokens in a vocabulary can vary greatly, from a few thousand to several million. A larger vocabulary allows the LLM to generate more creative and accurate text, but it also requires more computing resources to train and run.

The number of tokens in an LLM’s vocabulary impacts its language understanding. For instance, GPT-2 has a vocabulary size of 1.5 billion tokens. Larger vocabulary allows the model to comprehend a wider range of words and phrases.

3. Temperature:

The temperature is a parameter that controls the randomness of the LLM’s output. A higher temperature will result in more creative and imaginative text, while a lower temperature will result in more accurate and factual text.

For example, if you set the temperature to 1.0, the LLM will always generate the most likely next word. However, if you set the temperature to 2.0, the LLM will be more likely to generate less likely next words, which could result in more creative text.

4. Context Window:

The context window is the number of words that the LLM considers when generating text. A larger context window will allow the LLM to generate more contextually relevant text, but it will also make the training process more computationally expensive.

For example, if the context window is set to 2, the LLM will consider the two words before and after the current word when generating the next word.

The context window determines how far back in the text the model looks when generating responses. A longer context window enhances coherence in conversation, crucial for chatbots.

For example, when generating a story, a context window of 1024 tokens can ensure consistency and context preservation.

Learn about Build custom LLM applications

5. Top-k and Top-p:

These techniques filter token selection. Top-k selects the top-k most likely tokens, ensuring high-quality output. Top-p, on the other hand, sets a cumulative probability threshold, retaining tokens with a total probability above it. Top-k is useful for avoiding nonsensical responses, while Top-p can ensure diversity.

For example, if you set Top-k to 10, the LLM will only consider the 10 most probable next words. This will result in more fluent text, but it will also reduce the diversity of the text. If you set Top-p to 0.9, the LLM will only generate words that have a probability of at least 0.9. This will result in more diverse text, but it could also result in less fluent text.

6. Stop Sequences:

LLMs can be programmed to avoid generating specific sequences, such as profanity or sensitive information. For example, a content moderation system can use stop sequences to prevent the model from generating harmful content.

For example, you could add the stop sequence “spam” to the LLM, so that it would never generate the word “spam”.

7. Frequency and Presence Penalties:

Frequency Penalty penalizes the LLM for generating words that are frequently used. This can be useful for preventing the LLM from generating repetitive text. Presence Penalty penalizes the LLM for generating words that have not been used recently. This can be useful for preventing the LLM from generating irrelevant text.

These penalties influence token generation. A presence penalty discourages the use of specific tokens, while a frequency penalty encourages token use. For instance, in language translation, a frequency penalty can be applied to ensure that rare words are used more often.

LLM Parameters Example

Consider a chatbot using GPT-3 (model). To maintain coherent conversations, it uses a longer context window (context window). To avoid inappropriate responses, it employs stop sequences to filter out offensive content (stop sequences). Temperature is set lower to provide precise, on-topic answers, and Top-k ensures the best token selection for each response (temperature, Top-k).

These parameters enable fine-tuning of LLM behavior, making them adaptable to diverse applications, from chatbots to content generation and translation.

Also explore: LLMs for code generation

Shape the Capabilities of LLMs

LLMs have diverse applications, such as chatbots (e.g., ChatGPT), language translation, text generation, sentiment analysis, and more. They can generate human-like text, answer questions, and perform various language-related tasks. LLMs have found use in automating customer support, content creation, language translation, and data analysis, among other fields.

For example, in customer support, LLMs can provide instant responses to user queries, improving efficiency. In content creation, they can generate articles, reports, and even code snippets based on provided prompts. In language translation, LLMs can translate text between languages with high accuracy.

In summary, large language model parameters are essential for shaping the capabilities and behavior of LLMs, making them powerful tools for a wide range of natural language processing tasks.