Language models have become the go-to technology for chatbots and virtual assistants, handling basic tasks like generating text and answering straightforward questions. However, when it comes to solving complex problems in areas such as drug discovery, materials science, or advanced coding, these models fall short.

This is where OpenAI’s new o1 model series comes in. By shifting focus from simple language tasks to complex reasoning, o1 aims to bridge the gap and make AI a valuable tool for tackling sophisticated, real-world challenges.

In this week’s dispatch, we’ll explore what makes the OpenAI o1 series different, its unique training approach, and how it could transform knowledge work in fields like scientific research and mathematics.

The Must-Read Section

Why OpenAI o1 Series: What Makes It Different?

The o1 series, which includes both o1-preview and o1-mini, is a product of OpenAI’s continuous effort to develop more intelligent and versatile models. It is specifically built to think more, and reason.

The model o1 is trained using a completely different method than the previous GPT models, and simultaneously, the way it processes your queries is different as well.

Let’s first understand how it has been trained differently.

1. Chain-of-Thought Reasoning:

The OpenAI o1 models introduce a novel approach known as “chain-of-thought” reasoning, which enables them to break down complex problems into smaller, more manageable steps.

This method allows the models to handle intricate tasks that require multi-step reasoning, such as solving advanced mathematical problems, debugging complex code, or conducting in-depth scientific analysis.

As the model breaks down a task, reflects on it, or sets a plan to execute it, it tends to get a deeper understanding and hence better reasoning, much like what humans do.

Learn more about various agentic frameworks that can improve the performance of AI apps

2. Smarter Training with Human Guidance:

The OpenAI o1 models are trained with reinforcement learning with human feedback (RLHF). This means they’re not just learning to generate text; they’re learning to think in ways that align with human reasoning and values. This training helps them navigate complex queries more effectively, adhering to nuanced safety and ethical guidelines, making them more reliable and trustworthy in sensitive applications.

3. Hidden Thought Process:

A unique aspect of the OpenAI o1 series is its use of “reasoning tokens.” These tokens let the model internally explore multiple approaches before finalizing a response, kind of like brainstorming different solutions before settling on the best one. While you don’t see this internal process, it results in a more thoughtful and refined answer without overwhelming users with too much information.

4. Strategic Use of Compute Power:

o1’s strength also lies in how it uses computational resources. Traditional models put a lot of emphasis on the pretraining phase, but o1 allocates more compute to the reasoning process during both training and inference.

This means it can take the time to think through a problem more thoroughly, resulting in higher accuracy and more sophisticated reasoning. It’s like giving the model the ability to “pause and think” before answering.

Why It Matters: A New Frontier, But at a Cost

The OpenAI o1 model’s enhanced reasoning capabilities set a new standard for AI, making it a powerful tool for complex problem-solving and not just for smaller tasks like language generation.

Its ability to think through problems step-by-step allows it to excel in areas like advanced coding, scientific research, and strategic planning. This depth of reasoning could transform how AI is used in critical fields, providing more reliable and nuanced insights.

However, these advancements come at a high price—both literally and in terms of speed. The o1 models require significantly more computational resources, making them slower and up to six times more expensive than their predecessors. This makes them less suitable for applications where cost-efficiency and response time are crucial. Despite these limitations, the o1 models’ potential to handle complex, high-stakes scenarios with greater accuracy could justify the investment for those who need advanced reasoning capabilities.

The Incorporation of Agentic Framework in Language Models for Better Performance

The o1 model’s focus on structured reasoning and decision-making highlights the potential of agentic frameworks for more complex tasks. These frameworks enable language models to act autonomously, making decisions and adapting to changing conditions in real-time. By integrating frameworks like planning, memory, and goal-driven behavior, agentic designs allow AI to tackle intricate, multi-step problems more effectively.

Here’s a great talk by the Founder of Coursera, Andrew Ng where he talks about the potential of agentic patterns in AI.

To connect with LLM and Data Science Professionals, join our discord server now!

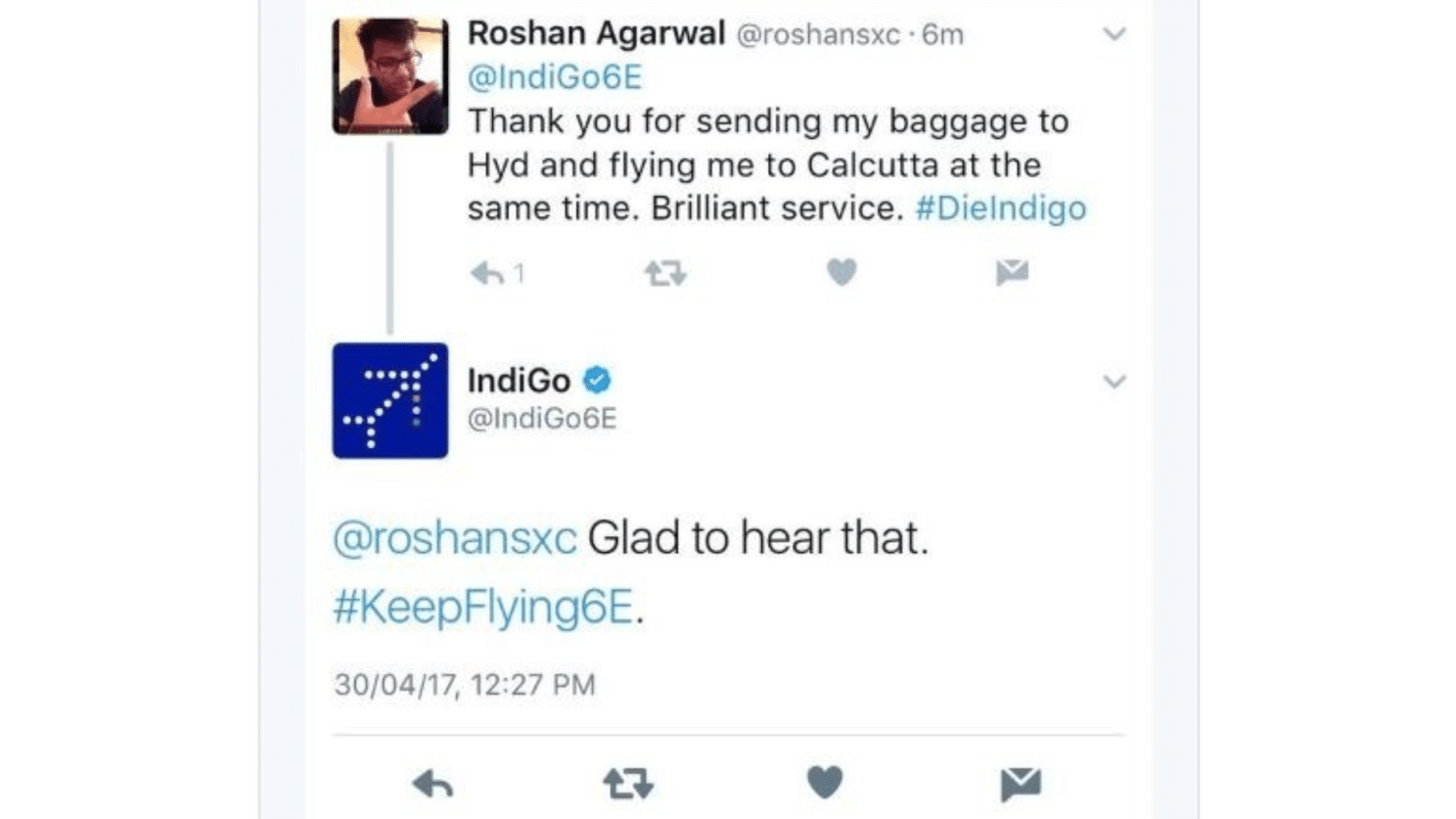

This one never gets old!

Gain Practical Experience in Building LLM Applications

Want to learn how to build secure and scalable enterprise AI applications with the latest language models?

Data Science Dojo brings the most comprehensive bootcamp in the industry, that teaches how to build end-to-end LLM Applications. Our program offers the right mix of theory and hands-on exercises so that you’re equipped to lead AI projects in your organizations.

The best part about the bootcamp is that it is an immersive experience. You can get your questions answered live.

It’s the only training you’ll need! Talk to an advisor now.

To close out this week, let’s look at some intriguing developments unfolding in the AI story.

- SambaNova has made the largest language models in the Llama 3.1 family accessible at a faster speed, and it’s free to use. Learn more

- Amazon has acquired Covariant’s core team and technology, including its AI models. Explore more

- California is considering 38 AI-related bills, including SB 1047, addressing various AI issues from existential risks to deepfakes and AI clones of deceased performers. Explore now

- Jony Ive confirms he’s working on a new device with OpenAI. Decode now

- Google CEO Sundar Pichai announces $120M fund for global AI education. Explore more