Organizations increasingly rely on Linkedin to build brand presence, run campaigns, and engage with their professional community. While LinkedIn provides native dashboards, most companies want to bring Linkedin data into their data warehouse, such as Azure Synapse, for unified analytics alongside CRM, financial, and other marketing data.

This guide shows you how to:

- Get Linkedin Community Management API access

- Authenticate and fetch post statistics

- Build an Azure Synapse pipeline to land data into ADLS as Parquet

Learn how to integrate Google Ads data into Azure Synapse for comprehensive marketing analytics.

Why Extract Linkedin Data?

LinkedIn is one of the most valuable platforms for B2B marketing, employer branding, and thought leadership. However, relying solely on LinkedIn’s native dashboards limits how deeply organizations can analyze their campaign and engagement data. Exporting this data into Azure Synapse unlocks richer insights and advanced analytics by enabling cross-platform comparisons and custom reporting.

Here’s why organizations extract LinkedIn data into Azure Synapse:

- Understand What Works: Identify which posts, campaigns, or content types generate the highest impressions, clicks, and engagement rates over time.

- Know Your Audience: Analyze audience demographics such as job titles, industries, and company sizes to better tailor your messaging.

- Measure ROI: Combine campaign data with ad spend and lead metrics to calculate true marketing ROI.

- Create Custom Dashboards: Go beyond LinkedIn’s standard analytics with Power BI visualizations that blend multiple data sources.

- Connect the Dots: Integrate LinkedIn analytics with data from Google Ads, Facebook, HubSpot, or Salesforce for a unified marketing performance view.

- Optimize Performance: Use machine learning and automation within Azure Synapse to predict engagement trends and optimize posting strategies.

By centralizing LinkedIn analytics in Azure Synapse, businesses move from reactive monitoring to proactive decision-making — enabling data-driven campaign planning, deeper audience insights, and unified performance tracking.

Let’s Get Started with the Tutorial

In this section, we’ll walk through the complete setup process, from creating a Linkedin app and generating API access tokens to configuring a REST connection and building a data pipeline in Azure Synapse. By the end, you’ll have an automated workflow that pulls your Linkedin Page analytics directly into your Azure Synapse workspace for unified reporting and analysis.

Step 1: Create a LinkedIn App

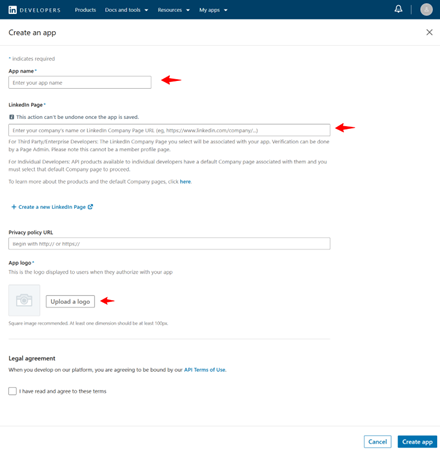

- Visit the LinkedIn Developer portal.

- Click the Create App button.

- Fill in the required information, make sure you have a LinkedIn Page.

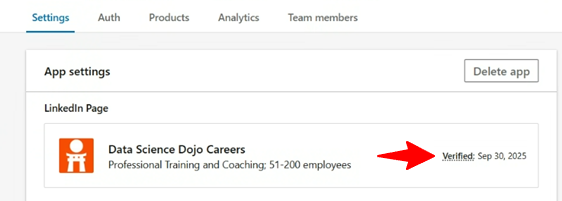

- Go to the Settings tab and click the Verify button. Share the link with the administrator of your LinkedIn company page. The administrator must verify the app to grant it access to company data. Please, ensure that the app is verified.

Step 2: Request Access to the API & Generate Access Token

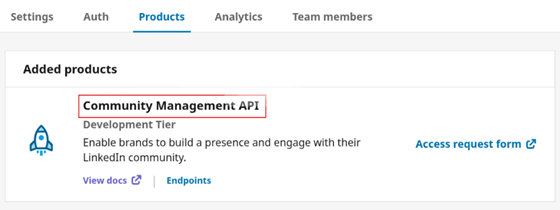

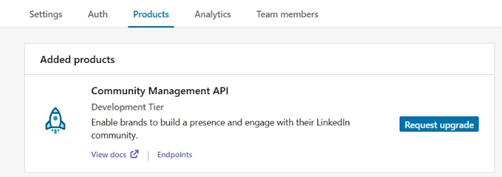

- Once your app is verified, navigate to the Products tab and request access to the necessary APIs.

- If you need access to LinkedIn Page data to work with organic content and page analytics, such as posts, followers, reactions, comments, shares, and engagement metrics, request access to Community Management API. The Community Management API enables developers to manage LinkedIn company pages on behalf of clients and access related account details (admins, roles, follower details) and analytics, including comments, reactions, and other page update activity.

- Fill in the Access Request Form

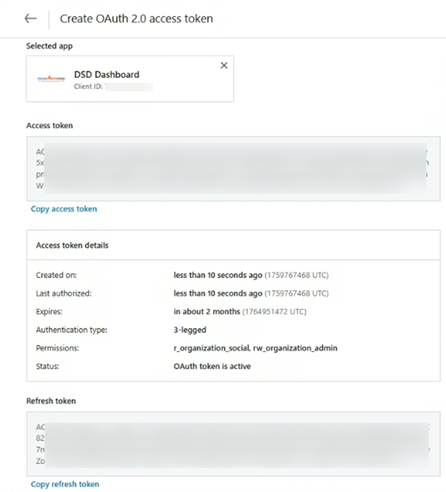

- Once the application has been approved for the development tier you will then have to generate access token.

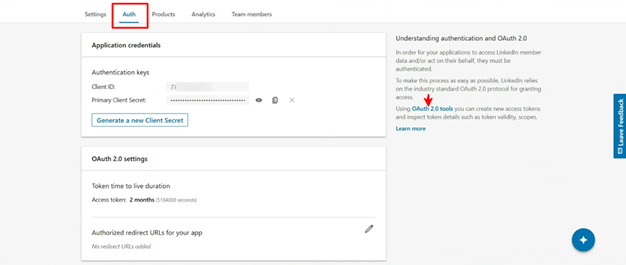

- Click OAuth 2.0 tools on the right-hand side of the page under Auth.

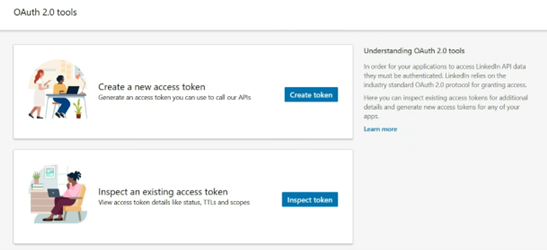

- Click the Create token button to begin the authorization process.

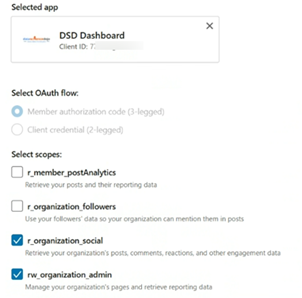

- Select the required scopes for pages data access:

-

- r_organization_social

- rw_organization_admin

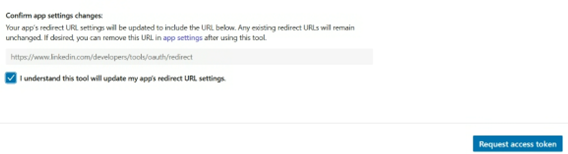

- After selecting the appropriate scopes, click Request access token.

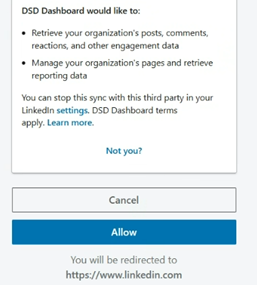

- On the next screen, click Allow to authorize the app.

- After the token is generated, copy and securely store your Access & Refresh Token.

Step 3: Create a REST linked service in Azure Synapse or Data Factory using UI

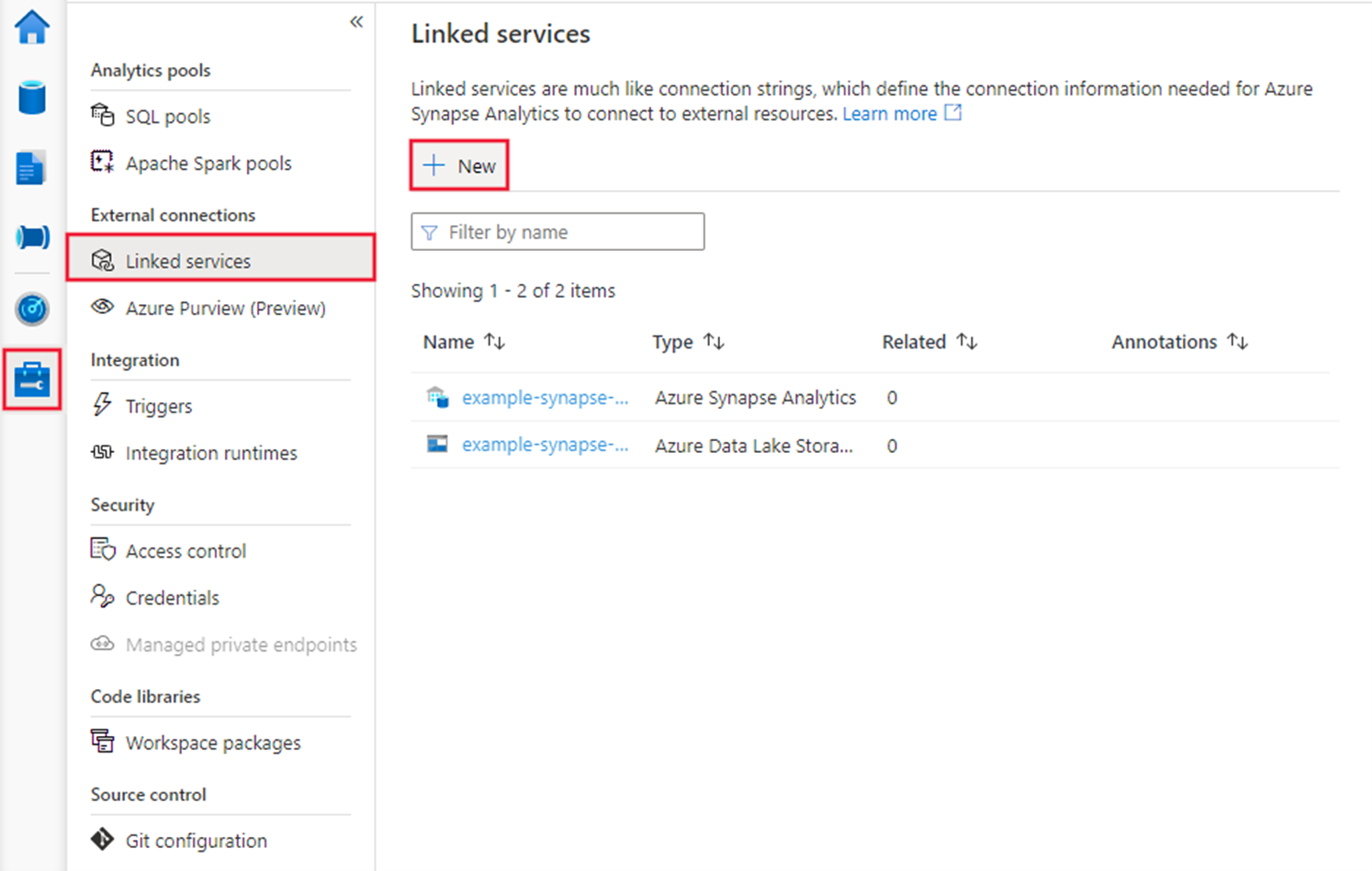

- Browse to the Manage tab in your Azure Synapse or Data Factory workspace and select Linked Services, then select New:

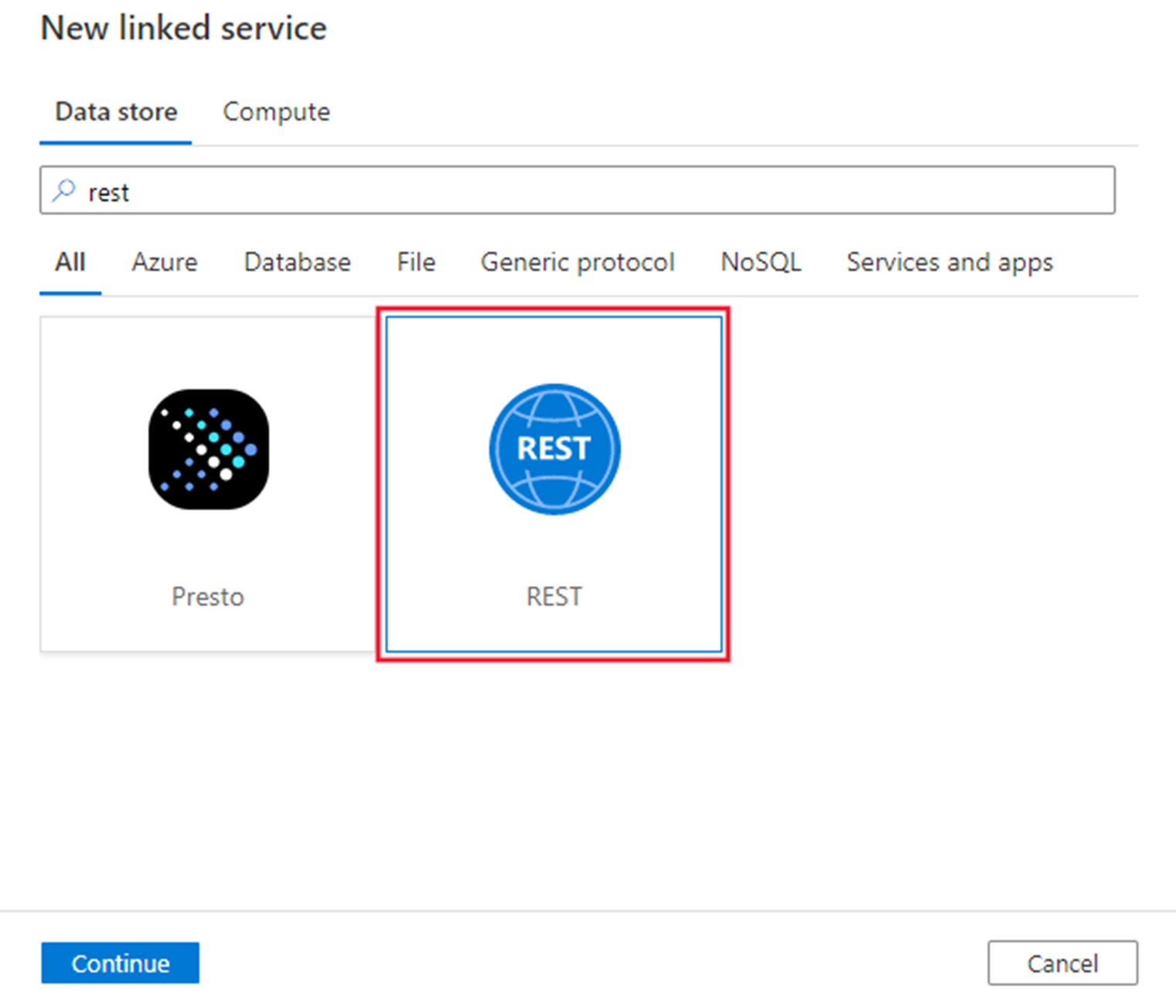

- Search for REST and select the REST connector.

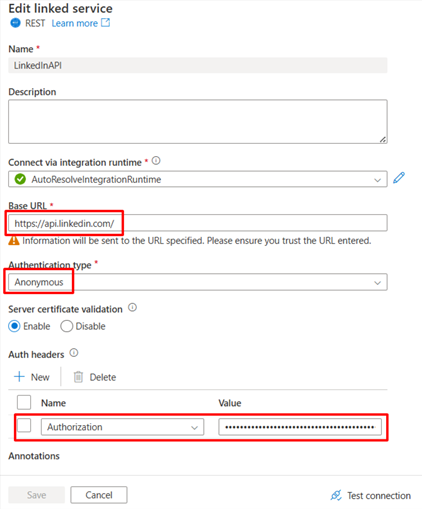

- Configure the service details, test the connection, and create the new linked service.

- Base URL: https://api.linkedin.com/

- Authentication type: Anonymous

- Under Auth headers, click + New and add:

- Name: Authorization

- Value: Bearer <your_access_token> (replace with the valid LinkedIn access token, not the refresh token).

Step 4: Add and Configure the Data Pipeline

Once your linked service is ready, it’s time to create the data pipeline that connects everything from LinkedIn API to your data lake.

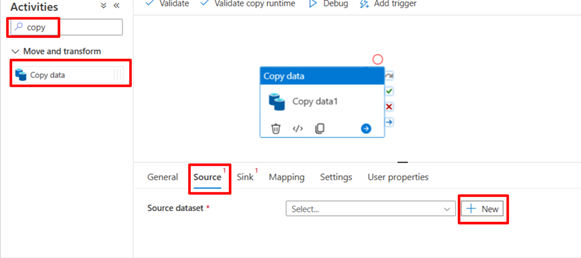

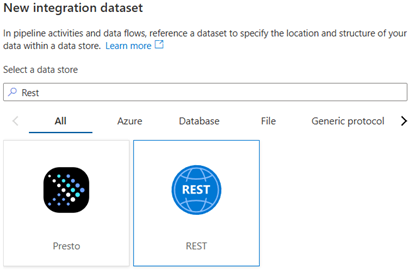

- Add Copy Activity and Integration Dataset in Azure Synapse or Data Factory

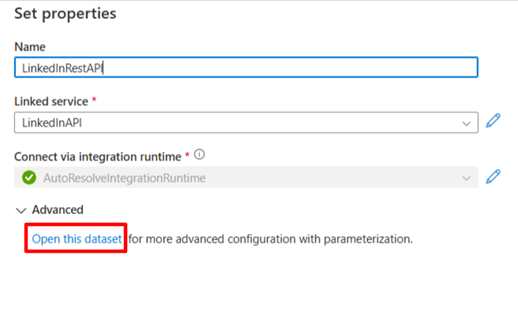

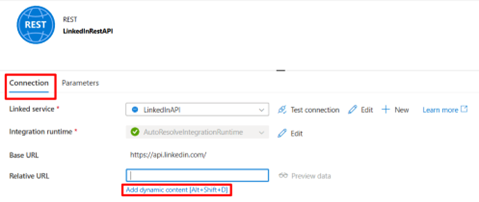

- Name the integration dataset, select the linked service and open the dataset

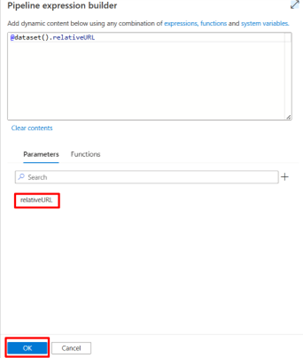

- Under Parameters, add a new parameter to make the relative URL dynamic

- Under Connection, add dynamic content for the Relative URL and select the newly added parameter

- Commit/Save the integration dataset

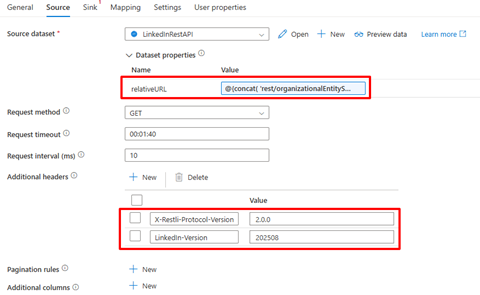

- Fetch Monthly Aggregated Post Statistics

We’ll use the organizationalEntityShareStatistics endpoint to extract post-level analytics (impressions, clicks, reactions, etc.) returns share(post) data only within the past 12 months, using a rolling 12-month window.

Explore three powerful APIs that can elevate your data science projects.

Output Schema Example:

| Field | Type | Description |

| clickCount | long | Number of clicks |

| commentCount | long | Number of comments |

| engagement | double | Engagement rate (clicks, likes, comments, shares per impression) |

| impressionCount | long | Number of impressions |

| likeCount | long | Number of likes |

| shareCount | long | Number of shares |

| uniqueImpressionsCount | long | Number of unique impressions |

Sample Request

Dynamic URL for the Last 12 Months

To automate the date range, use the following dynamic expression in Relative URL within your Copy Activity:

Explanation:

- Base Path: Fetches LinkedIn page post statistics for a specific organization.

- Time Range: Defines a 12-month rolling window, from the start of the month one year ago to the current date.

- Dynamic Generation: Converts Azure date functions into LinkedIn’s required Unix timestamp format (milliseconds since 1970).

Learn how to use Postman and Python for efficient API testing.

Additional Headers

- X-Restli-Protocol-Version: 2.0.0

- LinkedIn-Version: 202508

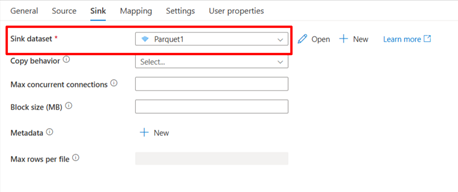

- Under the Sink tab, select a Parquet integration dataset to store your LinkedIn data as Parquet files in ADLS.

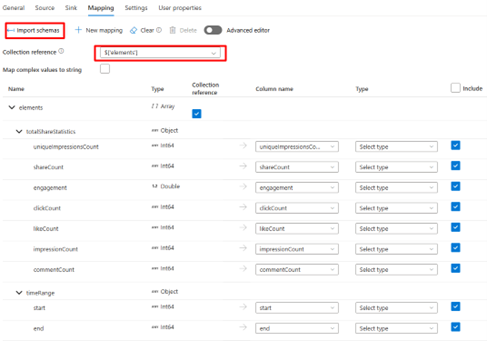

- Go to the Mapping tab:

- Click Import Schemas

- Change the Collection reference to elements[]

- Click Import Schemas again

Remove any paging objects and fix malformed column names

Step 5: Run and Validate

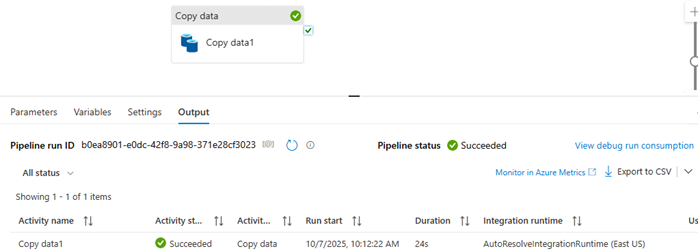

- Debug the pipeline to execute your Copy Activity.

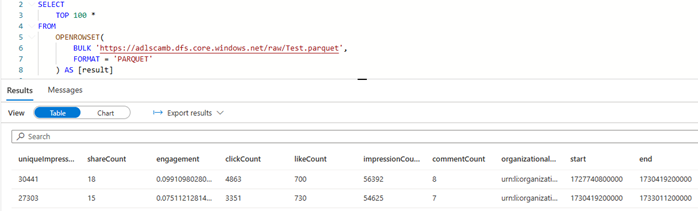

- Verify the data output in your ADLS container.

- Optionally, create a view in Azure Synapse serverless SQL pool for Power BI to consume.

Summary

By connecting the LinkedIn Community Management API with Azure Synapse or Azure Data Factory, you can automate the ingestion and transformation of critical marketing metrics — including:

- Post and share analytics

- Follower growth and engagement trends

- Page performance metrics

- Social metadata and reactions

- Video engagement and view-through analytics

- This automated integration ensures that your LinkedIn data flows seamlessly into Azure Data Lake Storage (ADLS) in Parquet format, ready for querying, transformation, or visualization in Power BI.

Ultimately, this setup empowers your marketing and analytics teams to:

- Access real-time campaign insights in a single, scalable data warehouse

- Combine LinkedIn data with CRM, financial, and web analytics datasets

- Track performance and ROI across all digital touchpoints

- Build richer, more actionable marketing intelligence dashboards

In short, integrating LinkedIn data into Azure Synapse transforms fragmented platform metrics into a cohesive, analytics-ready data foundation, enabling smarter, faster, and more informed business decisions.

Ready to build the next generation of agentic AI?

Explore our Large Language Models Bootcamp and Agentic AI Bootcamp for hands-on learning and expert guidance.